CPython's Garbage Collector and its Impact on Application Performance

Learn how the knowledge of CPython internals translate into performance insights for your code

A while back I published a detailed code walkthrough of CPython's GC implementation, but there was a need for a higher level explanation of the overall memory management mechanism of CPython without discussing the code. This article fills that gap. It provides a detailed overview of the overall memory management mechanism in CPython. The main focus is on the cyclic garbage collector (GC), the article covers details about how and when the GC runs and ties it up with its impact on the performance of an application.

This article was triggered due to a performance regression found in CPython’s incremental GC implementation in the 3.13 release candidate. As I posted an explainer about it on Twitter, I realized there is a need for a simpler article on the design of GC which makes it easy to deduce performance insights around memory management in Python applications.

⚡️Upcoming Live Session ⚡️

In the next live session we will learn about how bytecode compiled languages (like Python) work by live coding a compiler and virtual machine for a small subset of Python syntax. We will learn about the structure of Python’s AST, its bytecode instructions and how they execute. More details at the below link:

Reference Counting and Role of the GC in CPython

In CPython, the garbage collector isn’t the primary driver behind reclaiming the memory, it has a very limited role. CPython primarily depends on reference counting for reclaiming the memory of unused objects.

In reference counting, every object starts with a reference count of 1 on initialization. On assignment to other variables, or function parameters the reference count of the object gets incremented. Similarly, when a variable goes out of scope, or a function call returns, the reference count gets decremented. Finally, when the last variable referring to that object goes out of scope (i.e. the reference count reaches 0), the runtime immediately reclaims the memory for that object.

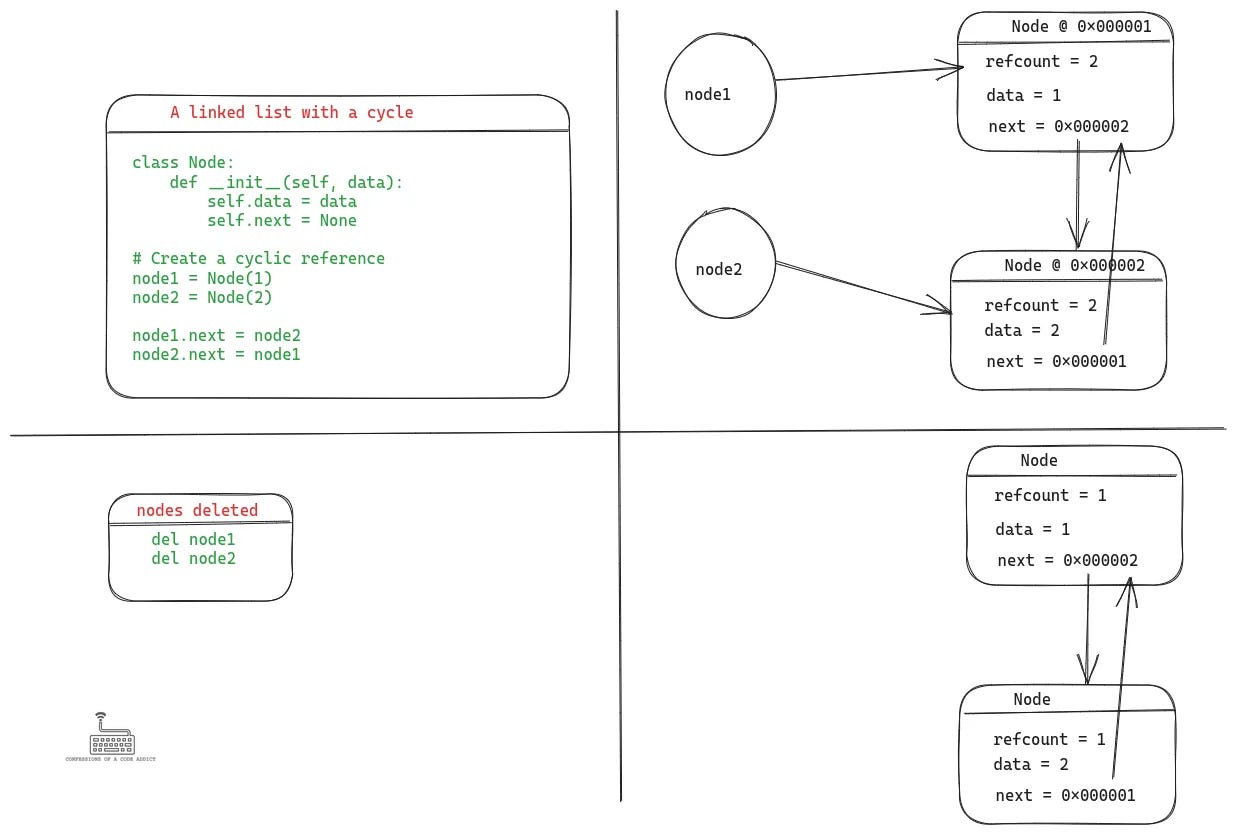

This scheme is simple to implement but has one shortcoming. If a set of object references build a cycle amongst themselves then their reference counts will never reach 0 even if no one else is referring to them. As a result, such objects are left behind in the heap which can quickly turn into a memory leak.

To reclaim the memory of such cyclic references, a garbage collector is needed which scans the objects on the heap, finds out the cyclic references and cleans them up if they are not reachable from anywhere else in the program.

Read my article on CPython’s reference counting internals to learn how it works and its implementation details.

CPython’s Object Allocator

Programming languages with managed memory need to make it really cheap to create new objects. If they always call malloc and free to create and destroy objects, it can quickly get inefficient. Apart from the overhead associated with increased system calls, it can also lead to fragmented memory which can result in poorer performance. Therefore, most language runtimes implement object pools and customized object allocators.

CPython also has an object allocator designed to minimize the cost associated with frequent allocation and deallocation of objects. The design of this allocator is similar to arena based allocators. Internally, it maintains object pools of various sizes. Depending on the size of memory requested, the allocator allocates memory from an appropriately sized pool. When the object’s use is done and it is deallocated, it is returned back to the allocator.

However, this allocator is used only for allocating objects of size less than 512 bytes. For larger objects, CPython relies on the system’s malloc API.

Now let’s get back to discussing the CPython GC.

Conditions for Triggering the GC

Programming languages which solely depend on the garbage collector for managing memory run the GC at a fixed frequency. However, in CPython most of the objects are freed up as soon as they are not required, thanks to reference counting. As a result, there is no need for running the GC at a fixed frequency.

Then how does the CPython runtime decide when to invoke the GC? It does so based on the number of objects currently alive in the young generation of the heap.

GC Generations: Conceptually, the heap is divided into a few segments called generations. Typically there is a young generation which is where all newly created objects are placed. And then there are one or more old generations where the objects which survived GC cycles while in the young generation are placed. The GC runs much less frequently for older generations with the hypothesis that if an object survives a GC cycle, it is very likely to stay around for a long time and should be checked less frequently for collection.

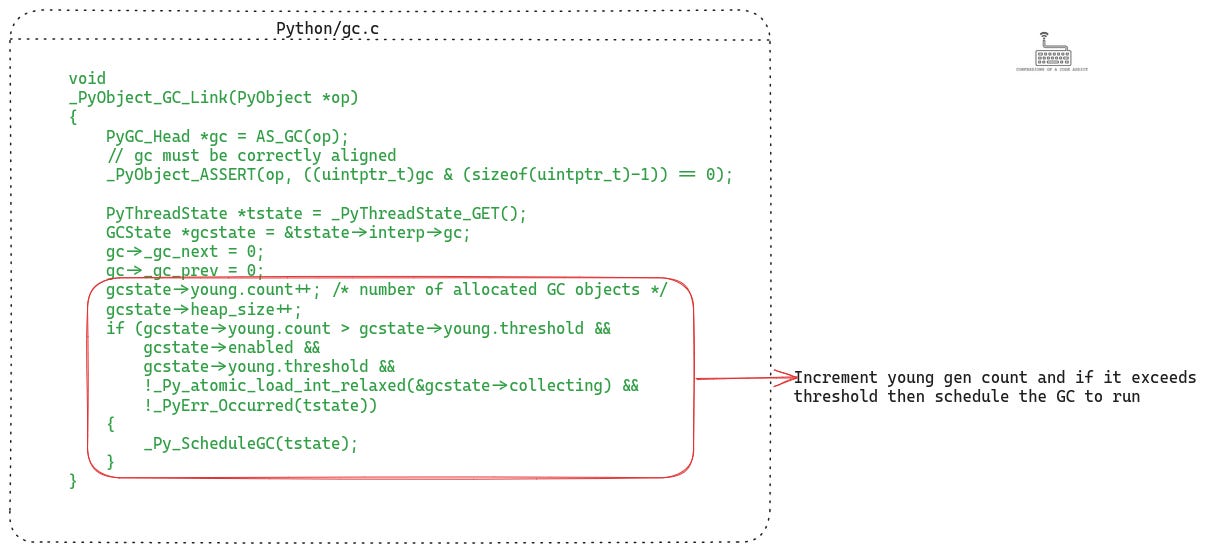

As soon as a new object is allocated, the runtime puts it into the list of objects tracked by the young generation. Similarly, when an object is deallocated (for instance when its reference count goes to 0) it is delinked from the young generation and the count of objects in young generation is decremented.

When the total number of alive objects in the young generation crosses a threshold (default value 2000 in Python 3.12, but it is configurable), the runtime sets a flag in the current thread’s thread state data structure. This flag is set in a field called eval_breaker where flags for a few other events are also set, such as signals.

This means that if most of your objects are short lived and do not have cycles, the population of objects within the young generation will remain within control and GC will not be triggered very frequently.

Entering the GC

So let’s say you create enough long lived objects that you eventually cross the young generation threshold and the GC flag is set. But that doesn’t invoke the GC automatically. The CPython virtual machine (VM) checks this flag at specific points during bytecode execution and invokes the GC if it finds the flag to be set.

The CPython VM checks the flag at the end of the execution of some very specific bytecode instructions. These instructions are essentially different variations of function calls and backward jump instructions (i.e. at the end of a loop iteration).

This means that if at the end of executing a function or a loop iteration, the GC threshold was crossed and the flag was set then the VM will stop executing further bytecode instructions of your program and switch to executing the GC to free up memory.

If you want to understand how the CPython works and its internals, read my article on the design & implementation of the CPython VM.

The job of the GC is to check the currently living objects in the young generation for cycles. If it finds any unreachable cyclic references, it cleans them up. Any objects which are not cleaned up are moved to the old generation.

The cycle detection algorithm requires the GC to analyze the incoming references for each object in the collection it is scanning. Even though the object graph is typically not very dense, it still is a lot of pointer chasing, which can be expensive if those objects are not in the CPU cache. Read my article on CPython GC implementation to understand the algorithm.

At the end of the GC cycle, the population of the young generation should reach 0 (or close to 0). Some objects which might have been created as part of the function or loop body would have been automatically destroyed by reference counting. The unreachable cyclic references would get cleaned up by the GC and the surviving objects would get promoted to the old generation.

Scanning the Older Generations and The Full Heap Scan

Whenever an older generation is being scanned, the GC scans all the generations younger to it as well. But when does the GC scan the older generations? It uses threshold values for the older generations as well. However, these thresholds are not based on the number of objects, but on how many times the previous generation has been scanned.

The default threshold values for the two old generations in CPython’s GC is 10. When the GC has been run for the young generation a 10 times, the GC will scan the first old generation + the young generation during the next cycle.

Similarly, when the first old generation has been scanned 10 times, the oldest generation becomes eligible to be scanned. When the oldest generation is being scanned it automatically involves scanning all the other generations, this is called a full heap scan because it is essentially scanning everything on the heap and it can be quite an expensive operation.

Scanning the entire heap every few cycles can be very expensive in certain applications which tend to create many long lived objects. And in general it is observed that objects which survive GC in the young generation tend to live for a long duration and therefore the full heap scan should be done more judiciously.

As a result, CPython puts an extra condition before deciding to do a full heap scan apart from the threshold. It tracks the total number of objects which have been involved in the previous full scans (called long_lived_total), and it also tracks the total number of new objects which are awaiting their full heap scan (called long_lived_pending). When the ratio of long_lived_pending to long_lived_total exceeds 25% then only CPython will actually perform a full heap scan.

The Incremental GC

Incremental GC was an optimization which was recently introduced in CPython after the 3.12 release with the goal of reducing the performance impact of a full heap scan.

As I mentioned at the beginning of the article, incremental GC has been reverted back (it might come back in the 3.14 release), its idea made sense and I will spend a moment to explain what it did.

Because the young generation is scanned only when the number of objects in it crosses a threshold, its performance cost is usually bounded and is largely uniform for a given application.

However, a full scan of the heap can make the overall GC cost unpredictable. The number of objects in the older generation can be unbounded if the application is creating too many long lived objects, and as a result the amount of time spent in GC can also be unpredictably high. This usually affects the tail latency of applications and makes the performance unreliable.

The idea of incremental GC is to amortize the cost of full heap scan by scanning a fraction of the old generation on each scan of the young generation. For instance, on every GC cycle the GC would scan the entire young generation and a small percentage of the oldest objects in the oldest generation. This way, the memory would still be reclaimed, but the overall cost of each GC cycle would become more predictable because now the GC would always scan a similar number of objects, thus improving the tail latency of applications.

This idea showed improvements in the benchmarks, but it seems to have hit an edge case in Sphinx, where it makes the performance much worse than the previous GC algorithm.

Although the real underlying cause behind the regressions is yet to be analyzed, it is not too hard to think of a few scenarios where incremental GC might result in more work per cycle. For instance, if the application is generating many long lived objects, then on each GC cycle those objects would be promoted to the old generation and accumulate there. As a result, over time the GC would be doing increasingly more work because the fraction of the old generation it needs to scan will steadily grow.

Understanding Full Heap Scan Costs with a Worst-Case Example

Now that we understand how GC works in CPython, I want to illustrate the cost of a full heap scan by the GC using a worst case scenario. This is a hypothetical example with many simplifying assumptions, in a real-world system things are neither going to be this extreme, nor such simplified assumptions will be true.

Assumptions:

GC Threshold: For CPython 3.12, the threshold for triggering a GC collection for the young generation (generation 0) is set to 2000 objects. The threshold for the two old generations is 10, which means that the first old generation is scanned after every 10 scans of the young generation and the 2nd old generation is scanned after every 10 scans of the first old generation.

Traffic: Let’s assume we are running a web service which is serving 100 requests per second.

Object Creation: Each request creates 20 new long-lived objects which survive the GC cycle.

Object Size: Each object is approximately 24 bytes in size. Normally, Python objects are much larger but let’s use this for simplicity.

Generational GC in Action:

Generation 0 (Young Generation) Triggers:

With 100 requests per second and each request creating 20 new long lived objects, we generate (

100 x 20 = 2000) objects within one second.As a result, the threshold for the young generation is reached, and a garbage collection cycle is triggered every one second.

Promotions and Collections:

Assuming all 2000 objects are long-lived and do not have any cyclic references, they get moved to the first old generation (generation 1) as a result of the GC cycle.

Performance Analysis:

Scalability:

If these 2000 objects do not reference each other, the GC’s task is simplified to a linked list traversal. (Internally all objects within a GC generation are tracked using a linked list of pointers)

Traversing a linked list requires at least two memory dereferences per node—one to dereference the current node pointer, and the other to get the next node’s address.

2000 objects with 24 bytes each amounts to 48,000 bytes which fit within the L1 cache. A single L1 cache lookup costs 4 cycles, so two lookups for traversing will take 8 CPU cycles.

As we are scanning 2000 objects, the full scan will take 16,000 cycles.

At 1 GHz, this translates to approximately 16 μ

sper GC cycle.

Subsequent Collections:

After 10 scans of the young generation (i.e. justin 10 seconds) the threshold for the first old generation will be reached.

At this point there will be a total of 22,000 objects combined in the two generations.

22,000 objects would consume about 528,000 bytes which will be spilled across L1 and L2 caches. To keep things simple, let’s assume the cost of each memory dereference is still 4 cycles.

Cost of this scan: (

22000 objects x 2 dereferences x 4 cycles per dereference) =176000CPU cycles, roughly (0.176 ms).

Escalating Costs:

After 10 scans of the first old generation (i.e. after 100 seconds), the threshold for the 2nd old generation will be reached.

At this point there will be a total of 220,000 objects to scan across all the three generations.

Total memory: (220000 x 24) bytes = 5.2 MB. This memory size exceeds the typical L1/L2 cache capacity.

If we assume that 20,000 objects fit within L1/L2 then traversing them would consume 160,000 cycles.

The remaining 200,000 objects would be in L3 cache. Assuming 30 cycles latency for L3 cache, traversing 200,000 objects would consume 200,000 objects x 2 dereferences x 30 cycles per dereferences = 12,000,000 cycles.

Overall cycles consumed would be 160,000 + 12,000,000 = 12,160,000 cycles.

On 1 1GHz machine, this translate into roughly 12ms spent inside GC per full heap scan.

Performance Impact:

If the web service normally takes 12 ms to serve a request, during the times when a full heap scan occurs, the latency can increase to 24 ms. This results in a 100% higher latency during a full heap scan by the GC which is quite a lot.

I’d emphasize again that this was a worst case example to put things into perspective about the costs of memory management. In real-world applications things are not this extreme.

Also, this does not mean that CPython’s GC is particularly bad. In most languages, the cost of GC tends to become a point of contention and a lot of developer time and effort is spent in trying to tune the GC, or optimize the application to reduce GC.

Applying GC Internals to Optimize Performance

Now that we understand how CPython manages memory, how can we use this knowledge to improve the performance of our applications? Let’s tie up our understanding of the GC internals of CPython with some actionable insights to reduce the cost of GC.

Note that, there are a ton of different kind of optimizations that you can do to reduce the memory usage of your program. The few insights that I am listing below are mostly to reduce the cost of GC or memory allocation, with the goal of illustrating how the knowledge of the internals help in analyzing and improving the performance of your applications. But you only want to look into these if your performance monitoring tools indicate that the GC is a bottleneck.

Consider Object Size in Allocations

CPython uses an efficient object allocator for objects smaller than 512 bytes. Larger objects are allocated using the system's malloc implementation, which can be more costly.

One potential optimization strategy is to design your data structures to favor smaller objects. This could involve splitting larger objects into smaller ones. While this may result in more individual allocations, these allocations will use the more efficient object allocator.

However, this approach comes with trade-offs:

It may increase overall memory usage due to additional object headers and pointers.

It could complicate your code and potentially slow down access patterns if related data is split across multiple objects.

It may result in more number of objects being created and if they are long lived then it may increase the GC frequency.

Always profile and measure before implementing such optimizations. Only consider this approach if you notice performance issues related to frequent malloc/free calls or memory fragmentation.

For applications that genuinely require many large objects, consider using a more efficient malloc implementation such as jemalloc, mimalloc, or tcmalloc.

Use Memory Efficient Data Structures

Use slot classes. For classes with many instances, using

__slots__can reduce the memory footprint and speed up attribute access. This also prevents the creation of a per-instance__dict__, which the GC would also need to consider.Instead of using a list, consider using the array module if you know that all of your data is numeric. The array module packs the data much more efficiently and reduces the pressure on memory.

Optimize Object Life Times

With the knowledge that every long living object has a potential CPU cost in terms of how frequently the GC will run and how much work it will have to do, it helps to rethink object lifetimes.

Avoiding unnecessary global objects is one obvious optimization. Global or module scope objects tend to stay around for longer durations, keeping them to a minimum is a good design choice, and keeps the GC happy as well.

Releasing resources eagerly is another optimization. For example, use context managers where possible to ensure underlying resources and objects are released as soon as possible.

Optimize the structure of long-lived objects. If such an object references many other objects, those references will persist in the heap. By restructuring these objects to contain only essential information, devoid of unnecessary references, you can release memory for the other objects and reduce GC frequency and cost.

Use weak references. When we weakly reference an object, the object’s reference count is not incremented. This means that if the object is not used anywhere else, it will be freed up despite of us holding a weak reference to it.

Minimize Cyclic References

By now you must know that the whole reason for the existence of the CPython GC is to free up memory associated with cyclic references; rest of the objects are freed up automatically using reference counting.

While sometimes the problem domain demands a design where the objects have cycles between them, but ensure that these do not exist unintentionally.

If the requirements of your application allow it then you can also consider using weak references in places where cycles might form.

Tune the GC Threshold

You may also consider tweaking the thresholds of the garbage collector using the gc module. You can increase the thresholds so that the GC is triggered less frequently at the cost of increased memory usage. Or you may decrease the thresholds so that the GC is run more frequently and memory is reclaimed more aggressively.

You should use telemetry tools to collect stats about the heap and GC usage, such as count of the number of objects in each generation, number of GC cycles triggered, and the amount of time spent in each GC cycle to make a more informed choice about tuning the GC parameters.

Profile and Monitor

Finally, profile and monitor the metrics of your application before jumping to doing these optimizations. Amdahl’s law tells us that the overall speedup we can gain by optimizing a part of the system is limited by the amount of time spent in that system. If the bottleneck of your application lies somewhere else, then optimizing memory is not going to bring much benefit.

Invest in memory profiling tools such as tracemalloc, scalene and other performance analysis tools such as perf, cProfile, py-spy. Check out my video on python profilers to learn more.

Conclusion

While people choose Python because of its syntax and productivity features, not because of its performance. But when you run into performance problems, then having an understanding of the internal mechanisms of the language is the only way to fix them. In this post we discussed how and under what conditions CPython’s garbage collector runs and what are its associated overheads. Based on this knowledge we also came up with a list of techniques to optimize memory usage of application so that the runtime performs GC less frequently.

I hope you find this post insightful. I’ve written a lot of articles covering the implementation details of CPython but I am realizing I also need to provide these insights which are useful for developers who are deploying Python in production. Stay tuned for more.

If you have read the full article and feeling adventurous, check out my article on the implementation of the CPython GC. It gives you a detailed walkthrough of the CPython code and explains the cycle detection algorithm and other juicy details.

CPython Garbage Collection: The Internal Mechanics and Algorithms

We’ve been talking about CPython internals and in the last article I went quite deep in CPython’s runtime. One of the crucial services that the runtime provides is that of managing a program’s memory during execution.

Support Confessions of a Code Addict

If you find my work interesting and valuable, you can support me by opting for a paid subscription (it’s $6 monthly/$60 annual). As a bonus you get access to monthly live sessions, and all the past recordings.

Many people report failed payments, or don’t want a recurring subscription. For that I also have a buymeacoffee page. Where you can buy me coffees or become a member. I will upgrade you to a paid subscription for the equivalent duration here.

I also have a GitHub Sponsor page. You will get a sponsorship badge, and also a complementary paid subscription here.