One Law to Rule Them All: The Iron Law of Software Performance

A systems-level reasoning model for understanding why optimizations succeed or fail.

“One ring to rule them all, one ring to find them, one ring to bring them all and in the darkness bind them.”

— J.R.R. Tolkien

Software optimizations are messy and often unpredictable. Whether you see a win is not guaranteed, and the reasons are usually unclear. Is there a way to reason about this?

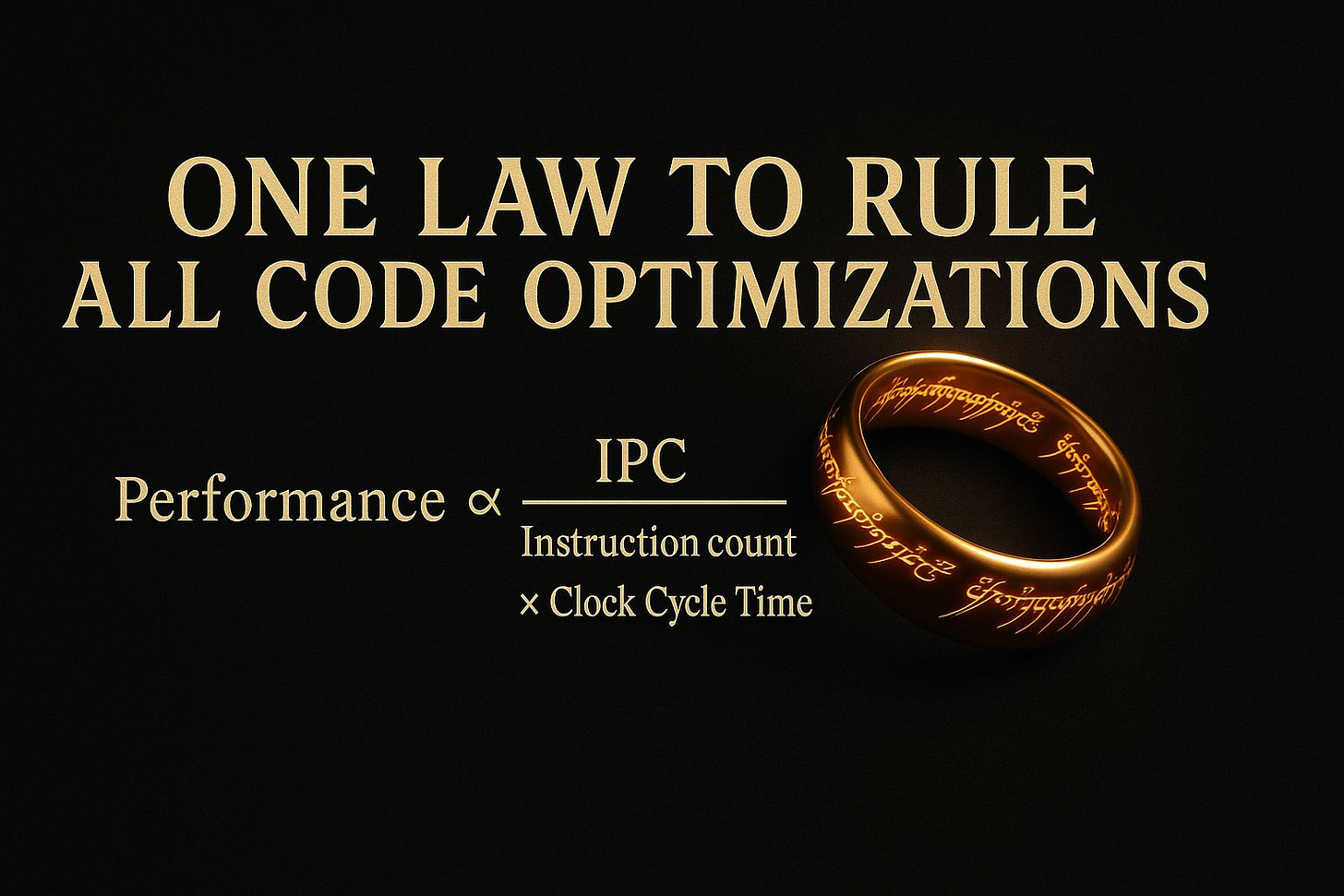

Maybe there is. In this article, I show you one law that explains all low-level code optimizations: when they work, and when they don’t. It’s based on the Iron Law of Performance, a model widely known in the hardware world but relatively obscure in software circles.

What we’ll see is that almost every low-level optimization, whether it's loop unrolling, SIMD vectorization, or branch elimination, ultimately affects just three metrics: the number of instructions executed, the number of cycles needed to execute them, and the duration of a single cycle. The Iron Law ties them together and gives us a unified reasoning model for software performance.

Of course, not all software optimizations fit into this model. Things like algorithmic improvements, contention removal, or language-level tuning (like garbage collection) lie outside its scope. I’m not claiming the Iron Law explains those.

What’s inside:

The Iron Law of Performance for software

Loop unrolling: reducing dynamic instructions

Function inlining: boosting IPC through linearization

SIMD vectorization: trading instruction count for complexity

Branch prediction: reducing pipeline flushes

Cache misses: backend stalls and instruction throughput

A reasoning framework to guide optimization decisions

Cut Code Review Time & Bugs in Half (Sponsored)

Code reviews are critical but time-consuming. CodeRabbit acts as your AI co-pilot, providing instant Code review comments and potential impacts of every pull request.

Beyond just flagging issues, CodeRabbit provides one-click fix suggestions and lets you define custom code quality rules using AST Grep patterns, catching subtle issues that traditional static analysis tools might miss.

CodeRabbit has so far reviewed more than 10 million PRs, installed on 1 million repositories, and used by 70 thousand Open-source projects. CodeRabbit is free for all open-source repos.

Background Read:

This article assumes some knowledge of CPU microarchitecture, and optimization techniques such as branch elimination, loop unrolling etc. If you are unfamiliar with these, I recommend the following two articles:

The Iron Law of Performance (Hardware)

First, let’s start by understanding the Iron Law for hardware performance. It is a simple equation that models the performance of the hardware in the context of executing a program. This depends on three factors:

Number of instructions executed (also known as the dynamic instruction count)

Average number of cycles needed to execute those instructions (cycles per instruction or CPI)

Time taken to execute a single CPU cycle (clock cycle time)

The following equation defines the law:

CPU architects use this to analyse how an architectural change impacts the performance of the processor. For example, should they increase the depth of the instruction pipeline?

In a pipelined processor, an instruction moves from one pipeline stage to another in one cycle. Naturally, the cycle time is dependent on the slowest pipeline stage. When you increase the pipeline depth, you breakdown some of the stages into more granular parts, thus reducing the work done in each stage and in turn reducing the cycle time. It means that now the processor can execute more cycles per second.

However, increasing pipeline depth also raises the penalty of cache and branch misses. For example, accessing main memory still takes about 100 ns, which translates to 100 cycles at 1 GHz but doubles to about 200 cycles at 2 GHz when cycle time is halved. Likewise, deepening the pipeline from 15 to 20 stages also increases the branch misprediction penalty from ~15 to ~20 cycles.

These increased latencies and penalties make the average CPI go up as well. So, whether the pipeline depth should be increased and by how much depends on the overall tradeoff. The iron law gives a very simple framework to make these decisions.

When you do low-level software performance optimizations, similar tradeoffs apply. Every optimization affects the program instruction count, cycles per instruction, and sometimes even the CPU clock frequency. So, it makes sense to apply the same model to analyse and reason about software-level optimizations as well. Let’s try to do that in the next few sections.

The Iron Law of Performance for Software

In the context of software, we can slightly tweak the law to the following form:

Here, we’ve replaced CPI (cycles per instruction) with IPC (instructions per cycle). Although they’re mathematical inverses, IPC is more intuitive for software engineers: for example, modern x86 processors can retire up to 4 instructions per cycle, so IPC gives a clearer sense of how close we are to the peak throughput.

We’ve also relaxed the equality to a proportionality. When optimizing software, we’re not looking for exact numbers, rather we’re reasoning about trade-offs.

So, what role do these three terms play in software performance?

Increasing IPC means the CPU can retire more instructions per cycle, reducing total execution time.

Lowering the dynamic instruction count means fewer instructions need to be executed overall. In general, this means the CPU needs to do less work and performance should go up.

Lowering the clock frequency, as sometimes happens with power-hungry instructions (e.g., AVX-512), increases cycle time and harms performance.

We’ll now apply this model to analyze several well-known optimizations: loop unrolling, function inlining, SIMD vectorization, branch elimination, and cache optimizations, and see how each one shifts the Iron Law variables.

Note: These factors themselves are not quite independent from each other. For example, when you reduce the dynamic instruction count of your program, you need to be careful about instruction selection.

As an example, integer additions have a very low latency, whereas integer divisions are expensive. So, if you reduce the instruction count in your program by replacing a high number of integer additions with a small number of integer divisions, your performance may not improve, or it could even degrade. It depends on the overall tradeoff in the decrease in instruction count and the drop in IPC. Whichever factor wins, dictates the performance.

Loop Unrolling

Loop unrolling is a classical optimization. Instead of executing one step of the loop body per iteration, you rewrite the loop to execute multiple steps per iteration. Consider the following loop that computes the sum of an integer array.

int sum = 0;

for (int i = 0; i < n; i++) {

sum += arr[i];

}If we unroll this loop four times, it will look like the following:

int sum0 = 0, sum1 = 0, sum2 = 0, sum3 = 0;

int i = 0;

// Process 4 elements at a time

for (; i + 3 < n; i += 4) {

sum0 += arr[i];

sum1 += arr[i + 1];

sum2 += arr[i + 2];

sum3 += arr[i + 3];

}

// Handle the remainder

int sum = sum0 + sum1 + sum2 + sum3;

for (; i < n; i++) {

sum += arr[i];

}Now, let’s reason about how such an optimization can improve the performance of a program and what are the tradeoffs to consider, i.e., in what situations it may not deliver performance improvements.

Note: Usually, you don't have to unroll a loop yourself. The compiler does it when it sees it will deliver better performance. But sometimes it may not do that because it cannot guarantee program correctness due to limited knowledge or constraints about the code. So it is useful to be aware of it.

Impact on Instruction Count

For large n, unrolling reduces the dynamic instruction count. In the example shown above, the loop body executes three instructions: a comparison for loop condition, incrementing the loop counter, and updating the sum. So, the normal loop executes 3n instructions.

A 4 times unrolled loop executes: one loop comparison, one loop index increment and four additions - so six instructions per iteration, and 6n/4 = 1.5n instructions for a vector of size n.

In Iron Law terms, we’ve driven down the Instruction Count by nearly 50 % (for large n), all else equal. This sounds like an obvious performance win, but we need to also look at how this impacts the IPC.

Impact on IPC

Recall from our Iron Law that Performance ∝ IPC / Instruction Count. We’ve already reduced instruction count, so if we can raise IPC (or at least not lower it), net performance improves. Let’s see how unrolling affects IPC.

Increased Instruction Level Parallelism

The main advantage of loop unrolling is the potential increase in the instruction throughput of the program. The processor is capable of executing multiple instructions per cycle, e.g., the modern Intel processors can execute up to 4 instructions every cycle.

However, to achieve that kind of throughput, the processor needs to have enough independent instructions to execute, which is difficult. Usually, instructions have dependencies between them, i.e., the result produced by one instruction is consumed by the next. Such instructions cannot be executed in parallel.

For example, consider the assembly (generated by -O1 flag to GCC) for the body of the normal for loop shown previously (without loop unrolling):

Let me explain what is going on:

The register

edxholds the current value of the sum and at every iteration the value ofarr[i]gets added to it.

The register

raxholds the address of the current array element,arr[i]. At the beginning of the loop iteration, the value ofarr[i]gets added to the sum value inedx. Then in the 2nd instructionraxgets incremented by 4, which means nowraxcontains the address of the next array elementarr[i + 1].

Finally, the last two instructions check if we have reached the end of the array, and if not then jump back to the beginning of the loop.

So, for a large array, the CPU is going to be executing these four instructions for a while. Can it execute some of these in parallel so that the loop finishes faster? Not quite. The instructions have dependencies between them that stop the CPU from doing that.

Notice that the first addl instruction that updates the sum in edx depends on the previous iteration's edx and rax values, so the CPU can't issue the next iteration's addl until the previous iteration's addl and addq instructions are finished. In other words, there simply aren't any independent instructions for the CPU to execute in parallel.

Loop unrolling fixes this problem. The following assembly code is the loop body for the unrolled code shown previously.

In the unrolled loop, the compiler assigns each partial sum to its own register (edx, edi, esi, ecx), so each addl instruction uses a different register and memory address. This makes these instructions independent and the CPU can issue and execute those in parallel, reducing the number of cycles needed to finish the loop, and improving the IPC.

In Iron Law terms, we’ve increased IPC from roughly 1.0 (due to dependencies) to perhaps ~3.0 or higher, depending on execution port availability. Combined with a 50 % drop in instruction count, that yields a significant net gain.

Note: How many of these add instructions will actually execute in parallel depends on how many functional units are there in the CPU to perform integer addition. So how much unrolling to do also depends on what kinds of instructions are there to execute.

However, it isn’t all rosy and shiny. Loop unrolling can also hamper the IPC in other ways. Let’s see how.

Register Spills Due to Increased Loop Body Size

Unrolling the loop creates many local variables and increases the demand for registers. When unrolled too many times, or when unrolling a large complicated loop, it can result in register spills. It means that for some variables the compiler will have to use the stack when it runs out of registers.

When register spills happen, the instructions that read data from the stack instead of registers take longer to finish. While a value can be read from a register within a single cycle, reading from stack can take 3-4 cycles (assuming an L1 cache hit).

So, the operations that could be done in a single cycle will now take several cycles due to memory access. In such situations, if the CPU doesn’t have other instructions to execute, it will sit idle and waste resources. This increases the average cycles per instruction and lowers the IPC.

In Iron Law terms, register spills reduce IPC, which can partially or completely negate the instruction count reduction. You must weigh these together.

Note: It is not guaranteed that a register spill will necessarily drop the IPC, because sometimes the compiler can schedule the instructions better to keep the CPU busy while other instructions are stalled on memory access. But it is a tradeoff that you risk introducing when being too aggressive with this optimization.

Instruction Cache Pressure Due to Increased Loop Body Size

Another potential impact of unrolling the loop is the increased code footprint that can cause pressure on instruction cache. These days the instruction caches are large enough that unrolling a loop will not result in cache misses for the loop itself, but in larger systems where there is existing pressure on the instruction cache, this may cause eviction of other instructions.

For example, most x86 cores have a 32-64 KB L1 I-cache. For a loop body consisting of four instructions of 4 bytes each, unrolling it 4 times may increase the code size by ~64 bytes but that is negligible.

So, in general it is not a huge concern for high-end CPUs. But we still need to be aware of the tradeoff from Iron Law's perspective because increased instruction cache misses lower how fast the CPU frontend can send instructions to the backend for execution, thus lowering the IPC.

Loop Unrolling from the Lens of the Iron Law

Below is a summary of trade-offs when viewing loop unrolling through the Iron Law.

Function Inlining

Next, let’s see how function inlining shifts the balance between instruction count and IPC. It is a simple optimization that the compiler routinely performs where it inlines a function call. It means that it replaces the function call with the body of the function being called to avoid the overhead of the function call. Let’s understand with an example.

Consider the following C function and its assembly (generated by GCC with -O1)

Function calls incur hidden costs beyond the core logic. When this function executes, several additional steps occur:

Stack Frame Setup: A stack frame needs to be set up for the function to manage the function local data on the stack. At the minimum, it requires saving the

rbpregister on the stack and then copying the current value of therspregister (the stack pointer) intorbp. So, at least two instructions. These days the compilers optimize this away if they notice that the function doesn’t use the stack, but it is not guaranteed.

Saving and Restoring Callee Registers: As per the System V AMD64 calling convention, certain registers are required to be saved by the callee function if it needs to use them. This is needed because those registers might be in use by the caller and if the callee doesn’t preserve the previous values, then the caller’s state will be corrupted. So, sometimes you will notice code to save and restore these registers as well. In the case of the code shown above, the function is simple enough that it doesn’t need to do this. But it is also a potential cost of calling functions.

Destroying Stack Frame: As the function returns, it needs to destroy the stack frame and restore the stack in the state as it was before the call.This again incurs extra set of instructions as you can see in the assembly.

Function Return: Finally, the

retinstruction is required to return the control from the function back to the caller.

Apart from the extra work inside the called function, calling the function also requires extra instructions. The following figure shows a main function calling compute, and on the right hand side you can see the assembly code.

So, what are the overheads while making the function call?

Saving/Restoring Caller Saved Registers: The Sys V AMD64 ABI defines certain registers as caller saved registers, meaning that the caller needs to save these registers on the stack before making the function call so that the callee function can use these registers freely. In the above code for

main, you can see it saving the values ofraxandrdxon the stack using thepushinstruction. In this case, the compiler does not care about restoring them back, but usually you also need to restore them back after the function call returns by a correspondingpopinstruction. So, you have two extra instructions per saved register.

Setting up Function Arguments: Before invoking the function call the caller needs to set up the function call arguments. The Sys V AMD64 ABI designates certain registers in which these arguments can be passed. In the assembly code, I’ve highlighted the instructions which set up the registers.

Calling the Function: Calling the function requires an extra

callinstruction. Compared to everything else this looks like a small cost, but nevertheless, when executing a function very frequently, it adds up.

So, when you inline a function, you save all this extra work that the CPU needs to do each time the function is called. The following figure shows a version of the program where the compute function has been inlined in main.

As you can see, inlining has streamlined the entire flow. The extra instructions are gone and only the core logic of the inlined function remains.

Now, it may look like an obvious performance win but let’s analyse from the perspective of the iron law.

Impact on Program Instruction Count

Function inlining reduces the dynamic instruction count of the program (number of instructions executed) in two ways. One is directly due to avoiding the function call overhead, and second is by compiler optimizations that get unlocked after inlining.

Direct Reduction in Instruction Count

call/ret instruction elimination

stack frame setup/teardown elimination

function argument handling elimination

register save/restore elimination

Even if we conservatively assume a saving of 5 instructions for inlining a function that is called one million times, we save the CPU from executing 5 million extra instruction. This also gives the CPU cycles to execute other instructions, improving the overall IPC.

Context-Sensitive Optimizations

Apart from eliminating function call overhead, inlining gives the compiler opportunity to do further optimizations of the inlined code because it has more information about the context in which the function was being called.

For example consider the following code (source).

int sat_div(int num, int denom) {

if (denom == 0) {

return (num > 0) ? INT_MAX : INT_MIN;

}

return num / denom;

}

int foo(int a) {

int b = sat_div(a, 3);

return b;

}After inlining, the sat_div function into foo, the compiler may simplify it to following. It can do that because it knows that the 2nd parameter is always 3 when called from foo.

int foo(int a) {

// The generated code for this looks confusing because

// the compiler has turned a division into a multiplication.

return a / 3;

}These kind of optimizations may not always be possible, but it is a potential positive outcome that can further reduce the number of instructions that the CPU needs to execute.

Impact on IPC

Again, the impact of function inlining on the program IPC is not direct, but via many indirect factors. Let’s see how.

Increased Register Pressure

Inlining large functions with many variables can increase the register pressure and cause a potential spill onto the stack. The compiler needs to make a complex decision considering things like register pressure, function size and other factors. So, usually it may not inline a function if there is going to be a spill. But, if you are manually inlining a function, or forcing the compiler to inline, then it is a potential factor that may impact the IPC of your program.

Increased Code Size

Inlining functions results in a large code footprint because you are making copies of the function everywhere it is called. This increases instruction cache pressure and can cause increased cache misses. Frequent instruction cache misses can starve the CPU backend for new instructions to execute, causing the IPC to drop.

Instruction Level Parallelism

Inlining functions can increase instruction level parallelism because it eliminates the branch introduced in the code due to the function call. It gives the CPU a larger window of instructions to analyze that may enable it to find more work to do in parallel, thus improving the IPC.

Function Inlining from the Lens of Iron Law

Again, we can see that whether or not performance improvements are seen from function inlining depends on several factors. But eventually, all of these factors result in two metrics: the dynamic instruction count, and overall IPC of the program. The following table summarizes these tradeoffs.

With loop unrolling and inlining covered, next we’ll see how SIMD vectorization affects instruction count, IPC, and even clock frequency.

SIMD Vectorization

Single instruction multiple data (SIMD) is a widely used optimization technique when the algorithm performs the same operation on multiple data elements. This is particularly applicable in numeric computing, image processing and similar domains. It improves the performance by significantly improving the IPC and lowering the dynamic instruction count, because the CPU is able to do more work in less number of instructions.

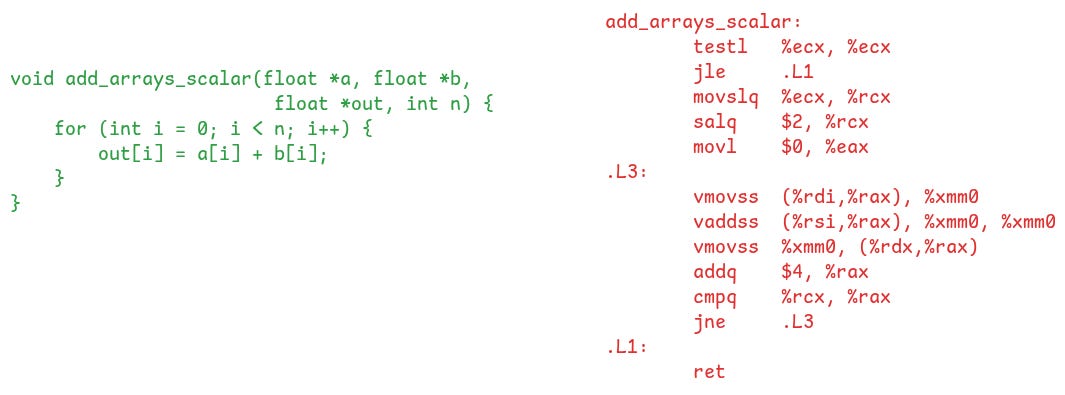

As an example, consider the following function and its assembly:

The function is performing vector addition. I’ve used the -O1 flag which prevents the compiler from vectorizing it. The label .L3 contains the loop body. It executes 4 instructions per element of the vectors. If we manually vectorize the code, or use the -O3 optimization flag, we get the following assembly output which uses SIMD instructions.

Here, the compiler has generated SIMD instructions like vmovups and vaddps which can process 8 float values (256 bits) in a single instruction. It means that the SIMD version of the loop can process 8 elements in just 3 instructions. Compare it to the non-vectorized version shown previously that was executing 4 instructions per array element.

Now, let’s analyze how SIMD vectorization impacts the program performance from the lens of the Iron Law.

Impact on Instruction Count

If you look at the vectorized assembly output above, it may appear as if the number of instructions have significantly increased. However, what matters is how many instructions that the CPU eventually executes.

Typically, for large datasets, the number of instructions executed by the CPU drops significantly when SIMD is used, because each instruction operates on multiple data points simultaneously.

In the example above, the scalar version of the loop executed roughly four instructions per element: two loads, one addition, and one store. In contrast, the vectorized version performed the same work using just three SIMD instructions, each operating on eight elements at once. This reduces the instruction count per element from 4 to 0.375, yielding a theoretical 10.66× reduction in instruction count within the vectorized loop.

So, in general, vectorization results in a massive decrease in the instruction count executed by the CPU.

Impact on IPC

Let’s analyze how SIMD instructions impact the IPC of programs.

Increased Code Size and its Effects on Instruction Cache

Vectorization increases the overall code footprint. One reason is due to the complexity of the vectorization algorithm and extra instructions for processing the left over elements.

Apart from that, on x86, SIMD instructions tend to be longer than scalar ones (due to decades of backward-compatible extensions). When code is heavily vectorized, this increased instruction size leads to a larger static code footprint.

Also, some SIMD instructions have higher latency and can take a few cycles to produce their results. This means that the dependent instructions have to wait longer and the overall instruction throughput is lower. The trick to overcome this is to manually unroll the loop in code. Again, this means even larger code size.

In summary, vectorization inflates the static code footprint. This expanded footprint can evict hot code from the L1 instruction cache, causing front-end stalls and lowering IPC.

Note: While most tight vector loops will easily fit within a 32–64 KB I-cache, it is a tradeoff worth being aware of.

Impact on Instruction Fetch and Decode

On x86, many SIMD instructions, especially AVX and AVX-512, are wider and more complex to decode than typical scalar instructions. This reduces how many instructions the CPU frontend can fetch and decode per cycle, lowering IPC.

Modern x86 processors can fetch 16 bytes and decode up to 4 instructions per cycle. With 3-byte scalar instructions, that’s enough bandwidth for peak throughput. But with 7-byte AVX-512 instructions, only 2 can be fetched per cycle, and decode throughput can drop to 1–2 instructions per cycle.

To mitigate this, CPUs use a μop cache to store decoded instructions. During loop execution, this often hides the decode bottleneck. But for small vector sizes, where the loop runs only briefly, this overhead can dominate and the drop in IPC can negate any performance gains from vectorization.

Register Pressure

Vectorized code usually results in a much larger set of instructions. This results in more general-purpose registers being used as part of the loop being vectorized, increasing the register pressure.

Apart from that, sometimes the loop itself has to be unrolled to take into account the higher latency of some SIMD instructions. This requires using more SIMD registers, which puts pressure on the limited number of SIMD registers.

If vectorization results in register spills, frequent memory accesses can decrease the overall IPC due to the stalls while waiting for memory loads to return. If this becomes a dominant factor, it may eat up the gains provided by SIMD instructions.

Note: With AVX-512 having 32 registers, the chances of a spill are slim but it is worth mentioning this factor for a full coverage of all the tradeoffs.

Impact on Clock Cycle Time

Remember that the Iron Law has three terms: dynamic instruction count, IPC and the CPU clock cycle time. So far all the optimizations we discussed only affected the first two factors, but SIMD instructions impact the clock frequency as well.

On x86, certain SIMD instructions (AVX2 and AVX-512) cause the CPU clock frequency to dynamically scale down to keep the power consumption and temperature in control. In other words, it increases the clock cycle time (negatively impacting the performance).

From Iron Law's perspective, vectorization lowers the dynamic instruction count, but also increases the clock cycle time. It results in an interesting tradeoff where the wins are not obvious.

SIMD wins are clearest when most work is vectorized, but in mixed workloads the reduced clock speed can hurt performance. For example, Cloudflare saw a 10% drop in web-server throughput (requests served per second) when using vectorized hashing algorithms. They found that the CPU spent only 2.5% of the time in vectorized code, and the remaining time in scalar execution. In this case, the increased cycle times outweighed the SIMD gains—Iron Law in action.

Analyzing SIMD from the Lens of Iron Law

In summary, SIMD vectorization can slash instruction count and boost IPC, but it also increases cache footprint, register pressure, and may slow the clock. Here’s how these factors map to the Iron Law metrics:

Optimizing Branch Mispredictions

Branch mispredictions are a common performance bottleneck. When the CPU predicts a branch incorrectly, it must discard the speculatively executed instructions and start fetching from the correct address. This flush costs around 15 to 20 cycles, and sometimes more depending on the pipeline depth. These stalls hurt the CPU’s instruction throughput and directly lower the IPC.

To understand why prediction is needed in the first place, consider how the CPU executes instructions. The backend, which performs the actual computation, is fast and parallel. But it depends entirely on the frontend to deliver a steady stream of decoded instructions. This pipeline works well when code is linear. But when there is a conditional branch, the next instruction depends on the result of a previous comparison instruction. Waiting for that result introduces bubbles in the pipeline and wastes cycles.

To avoid this delay, the CPU speculatively picks a direction using its branch predictor and fetches instructions along that path. If the guess is correct, the pipeline stays full and performance remains high. If the guess is wrong, the CPU has to roll back the speculative work, fetch the correct instructions, and refill the pipeline. This flushing not only delays execution but also wastes frontend bandwidth.

Even though modern branch predictors are highly accurate, often over 95 percent, some branches are unpredictable by nature. Others suffer because the predictor's limited buffer space gets overloaded in large, complex programs.

To see how costly this can be, imagine a loop with one million iterations and a branch that is mispredicted five percent of the time. At a penalty of 20 cycles per miss, this results in one million wasted cycles. That is enough to wipe out the benefit of most other optimizations.

Let’s now analyze how optimizing branches affects performance using the Iron Law framework.

Impact on Instruction Count

The exact impact on the dynamic instruction count depends on the specific optimization and how it changes the code execution. For example, you can reorder the conditions in a loop so that the most predictable and frequently executed branch is at the beginning, thus resulting in reduced branch misses. In this case, the overall number of executed instructions may drop.

Sometimes you may replace a branch with branchless logic using bitwise operations or other techniques. This usually results in more instructions but eliminates the branch prediction. So, you may end up with a higher number of instructions executed but save the misprediction penalties.

The point is that whether or not you see a significant improvement in performance largely depends on the IPC factor. Let’s analyze that.

Impact on IPC

Branch optimizations can impact the IPC in a variety of ways.

Reduced Pipeline Stalls and Flushes

When done well, reduced branch misses result in reduction in pipeline stalls and flushes, resulting in improved instruction delivery to the backend and an improved IPC.

Impact on ILP

Another aspect to consider with branch optimizations is their impact on instruction level parallelism.

Often, branchless implementations require more instructions than their branching counterparts and introduce serial dependencies. For example, consider the following function for conditionally swapping two values.

And the following figure shows a branchless way of implementing the same function along with its assembly output.

You can clearly see that the branchless version has more instructions.

But, the problematic part is that the branchless version results in dependent instructions, which lowers the ILP. In the example above, the compiler has used the cmov instructions that conditionally copy a value depending on the result of a condition. These instructions sidestep the branch predictor, but they introduce data dependencies. Any subsequent instruction that depends on a result produced by a prior cmov instruction has to wait until the cmov instruction has finished. This lowers the potential ILP.

Following figure shows another example of a branchless function to compute max of two integers. Again, you see that most instructions read and write the eax register, creating a dependency chain among them. The CPU will have to execute these sequentially, resulting in significantly lower ILP.

So, the moral of the story is that branchless code comes at the cost of reduced ILP (and thus lower IPC). It is only worth doing when the branch predictor is exhibiting a high number of misses for that code. Otherwise, optimizing a branch which is highly predictable can backfire because the drop in IPC will dominate everything else.

Analyzing Branch Optimization from the Lens of Iron Law

Branch optimization is a tricky optimization to do but as far as its performance gains are concerned, they are relatively easy to reason about from the lens of iron law. Here’s a concise overview:

Analyzing Cache Misses from the Lens of Iron Law

The final optimization we’ll discuss is minimizing cache misses. I’ll keep this section brief. The goal is to complete the picture and demonstrate that the Iron Law applies to almost every low-level optimization you might attempt.

Data cache misses can significantly reduce IPC. When an instruction misses the cache, it must wait hundreds of cycles for the data to arrive from main memory. During this time, it occupies CPU resources such as a reservation station slot, a reorder buffer slot, and a physical register. Any instructions that depend on the result of this instruction cannot proceed either. They remain in the reorder buffer until the data becomes available.

If things like reorder buffer, reservation stations are new to you, I suggest reading my article on the microarchitecture of SMT processors which touches on these details.

When enough of these long-latency instructions accumulate, they create backpressure in the backend. Reservation stations fill up, the reorder buffer approaches capacity, and eventually the frontend is forced to stop issuing new instructions. This limits parallelism and reduces the number of instructions executed per cycle.

Optimizations like structure padding, blocking, data layout transformations, and software prefetching aim to reduce miss rates and improve IPC. However, these often increase instruction count (both static and dynamic) because of added pointer arithmetic, bounds checks, or loop restructuring. The tradeoff is clear: does the reduction in stalls outweigh the overhead of extra instructions?

We will not go into further detail here, but the pattern should feel familiar by now.

Conclusion

We began the article with a bold claim: that one law can explain all low-level code optimizations. After walking through multiple examples, that claim feels much less dramatic.

Most low-level optimizations shift one or more of the Iron Law variables: instruction count, IPC, or clock-cycle time. We often focus on isolated effects, like reducing cache misses or branches, and get confused when performance doesn’t improve. But that confusion disappears once we look at the bigger picture.

The Iron Law gives us a way to step back and see the full picture. It helps us reason about trade-offs clearly, without relying on guesswork. When combined with tools like perf, which show instruction counts, IPC, and backend stalls directly, it becomes easier to understand not just whether something changed, but whether it changed in the right direction.

This model doesn’t apply to every kind of optimization. It won’t help with algorithmic improvements or garbage collection tuning. But for the kind of low-level performance work that many developers struggle to reason about, it gives a clear lens.

So next time you’re tuning code, ask yourself which of the three metrics you’re moving. You might find that one law really does explain more than you expected.