Hardware-Aware Coding: CPU Architecture Concepts Every Developer Should Know

Write faster code by understanding how it flows through your CPU

Even the most elegant algorithms can run painfully slow when they fight against your computer's underlying hardware. The difference between mediocre and exceptional performance often comes down to whether your code works with, or against the CPU's architecture.

Modern processors employ sophisticated mechanisms for executing instructions efficiently. Writing code that takes advantage of these mechanisms for delivering maximum performance is what I call "hardware-aware coding".

In this article, I'll use a drive-through restaurant analogy to explain three CPU architecture concepts every developer should know:

instruction pipelining

memory caching

speculative execution

Along with exploration of these techniques, we will also look at multiple concrete code examples to understand how to apply this knowledge to optimize code performance. Let's learn how to write code that your CPU actually wants to run.

Stream - Scalable APIs for Chat, Feeds, Moderation, Video & Audio (Sponsored)

Stream helps developers build engaging apps that scale to millions with performant and flexible Chat, Feeds, Moderation, and Video and Audio APIs and SDKs powered by a global edge network and enterprise-grade infrastructure.

A Free Maker Plan - Available for teams of 5 or fewer with less than $100K funding and $10K monthly revenue

Full-Featured Stack - Includes Chat (2K MAU), Video/Audio APIs ($100 monthly credit), and Activity Feeds

Global Edge Network - Enterprise infrastructure with 9ms average response times and 99.999% uptime SLA

Risk-Free Development - Hard usage limits ensure you'll never face unexpected bills

Multi-Platform SDKs - Implement across React, iOS, Android, Flutter, and more with minimal code

The Drive-through Restaurant Analogy

Throughout the article we will use the analogy of a drive-through restaurant where people drive through in their vehicles, stop by at the order window, place their order, collect it and move on. There is usually a queue of cars waiting to place their orders and the restaurant staff needs to optimize their order taking, and preparation pipeline to deliver the orders as fast as possible and as many orders as possible.

This analogy applies closely to how a CPU executes instructions.

Just like the restaurant needs to process customer orders, a processor needs to process program instructions.

Similar to how several customers await in the queue to place their orders, many instructions await to be picked up for processing by the hardware.

After an order is taken, it is broken down into its individual items and each individual item is prepared one step at a time. Similarly, an instruction is also broken down into smaller instructions (called microinstructions) for execution by the hardware.

Just like it is imperative that the restaurant optimises various stages of order preparation to process as many orders as possible every hour (the order throughput), the processor must also process as many instructions as possible every CPU cycle (the instruction throughput) to deliver high performance.

Now, let’s use this analogy to understand how the hardware optimises processing and execution of instructions.

A Pipeline of Orders

In the beginning, the restaurant was running into losses. They had all the staff and equipment but everything was underutilised. The restaurant was not delivering enough orders to make profits. The owner hires an expert to figure out how to improve things.

The expert comes and observes what is happening. He notices that when a customer arrives in her car, until her order is not delivered, all other customers have to wait.

If it takes ten minutes to prepare one order, then the restaurant can only deliver one order in ten minutes, or six orders per hour. This long wait time was leading to very few orders being taken and delivered, while most of the staff and equipment was lying idle and unused.

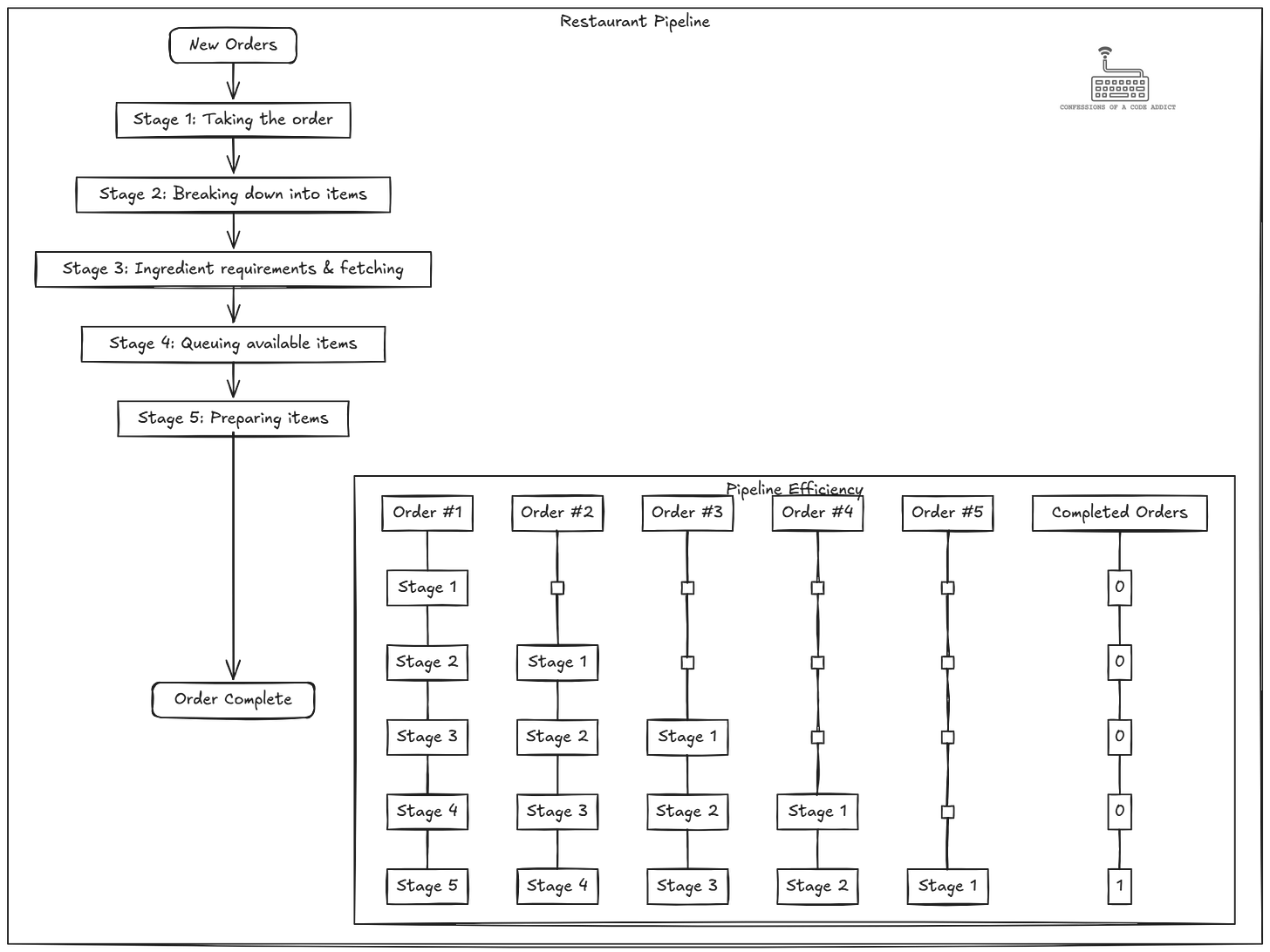

After realizing the source of the inefficiency, the expert comes up with the idea of building a pipeline for processing orders. The pipeline breaks down order processing into a set of stages. As an order moves from one stage to another, it makes space for another order to enter the pipeline. An example of such stages could be:

Stage-1: Taking the order

Stage-2: Breaking it down into individual items

Stage-3: Identifying the ingredient requirements and fetching the ingredients from warehouse that are not available for immediate access

Stage-4: Queuing the items for cooking (whose ingredients are available)

Stage-5: Cooking an item from the queue

There are five stages and assuming each stage takes two minutes in the ideal scenario, the restaurant can finish processing an order in 10 minutes.

It will take the restaurant 10 minutes to deliver the very first order but after that the pipeline will be full and the restaurant will start to finish one order every two minutes. We will refer to it as the steady state of the pipeline.

With this pipelined order processing, the restaurant can deliver 30 orders per hour as compared to the previous regime which was only able to process 6 orders per hour—a 5x higher order throughput, i.e. more revenue.

Even the cooking of an item can be broken down into individual stages. For instance, making a burger can consist of toasting the burger, adding the patty and vegetables, and finally adding cheese. As a burger moves from one stage to another, a new burger can be started to get cooked.

The following diagram visualizes the stages of the pipeline and how the first five orders proceed through it.

Instruction Pipelining

Similar to how processing one order at a time was the bottleneck for the restaurant’s efficiency, it used to be a bottleneck for processors as well. A single instruction during its execution doesn’t use all the processor resources.

For example, if the instruction is accessing memory then the execution units (adders, multipliers) remain unused. This is similar to how the restaurant was inefficiently using its resources when it was processing only one order at a time.

To improve this situation, Instruction processing is pipelined in the processors to enable them to process multiple instructions simultaneously. The pipeline consists of several stages and as one instruction moves from the first stage to the second, it makes space for a new instruction to be introduced into the pipeline.

The number of stages in a CPU pipeline varies. Some simpler architectures consist of a five stage pipeline while there are also more complex pipelines in high performance architectures (such as x64) that have very deep pipelines consisting of 15-20 stages. Let’s use a simple five stage pipeline as an example to understand instruction pipelining better. These five stages are:

Fetch: An instruction is fetched from memory. This is similar to taking the next order

Decode: The instruction is decoded to identify the operation (e.g. add, multiply, divide) and its operands. In CISC style processors (e.g. X86), the instruction is also broken down into one or more simpler instructions, called microinstructions (μops). This is similar to how an order is broken down into its individual items and the required ingredients

Execute: Decoded μops are picked up and executed (assuming all the required operands are available). This is similar to how an individual order item is picked up by a cook for preparation.

Memory access: Any memory reads or writes required by the instruction are done

Write back: The final instruction result is written back to the destination

This pipeline has five stages and in a steady state it can process five instructions at a time and retire (i.e. successfully finish) one instruction every CPU cycle.

This means that while an instruction is being executed, others might be getting fetched, and decoded, similar to how while an item is being cooked, a new order is being taken and another order’s ingredients are being prepared.

Parallel Order Processing

The restaurant owner liked how the restaurant was making profits. He asks the expert if he can scale the profits even more. The expert gets back to the job. He thinks the pipeline is doing so well, what if he hires more staff and creates more parallel pipelines so that the restaurant could take multiple orders parallelly.

He installs three more order windows for taking orders, so now four customers can place their orders simultaneously. He also hires more workers, cooks and equipment (ovens, stoves, friers) to independently work on the pipeline stages of those orders in parallel.

With a five-stage order pipeline and four such pipelines, the restaurant can now have 20 orders under process in a steady state, and it can deliver 4 completed orders every two minutes to the customers. This is 4x the previous throughput.

Superscalar Instruction Execution

Similar to how the restaurant scaled its order processing capacity by installing multiple pipelines, modern processors have also been equipped with multiple execution resources. For example, they have multiple ALUs for arithmetic computations, and multiple load/store units for memory operations. But, utilizing these requires that the CPU can issue multiple instructions in parallel, for instance, if there are two add instructions in the program, the CPU can process them in parallel instead of serial order.

Modern X64 processors can issue up to 4 new instructions each cycle and with their deep pipelines, they can have hundreds of instructions in different stages of completion. Under a steady state, such a processor can deliver a throughput of up to 4 instructions/cycle (peak instruction throughput).

Out-of-Order Execution

Taking advantage of this instruction level parallelism (ILP) requires that the processor is able to find enough independent units of work which can be executed in parallel in the same cycle.

Usually, a short sequence of instructions in a program are all dependent. For example, the immediate next instruction might be dependent on the current instruction’s result. So the processor looks at a larger window of instructions to identify independent instructions that can be executed in parallel.

This naturally means that the processor executes instructions out of their natural program order. It is called out-of-order execution and it enables processors to find and execute multiple instructions in parallel. Even though the processor executes instructions out-of-order, their results are committed to the process state in their original order, it ensures that the program logic remains correct.

So how does all this knowledge about pipelining superscalar and out-of-order instruction execution help us improve the performance of our code? We know that achieving peak instruction throughput requires that the processor finds enough independent instructions to execute in parallel. So are there ways to do this? Let’s see an example.

Applying Mechanical Sympathy: Loop Unrolling

Let’s say every order pipeline in the restaurant has one fryer. A customer comes and places an order for four fries. The order is placed in the pipeline but because there is only one fryer on that pipeline, until the first fry is not done, the next one cannot be prepared. This means that particular order window is blocked and no more orders can be taken in the meantime.

If those four fries were distributed on the different pipelines, they could all be prepared in parallel and independently. Neither the customer would have to wait extra long, nor the order window would have been blocked.

This is the idea of mechanical sympathy. By organizing our code in a way which works in synergy with the way the hardware works, we can get maximum performance possible.

In the case of software, we can have bottlenecks which don’t allow the processor to execute as many instructions in parallel as it can. In order to fully utilize the superscalar capabilities of the hardware, there have to be enough independent instructions in the code. But often, there are situations where the code is written such that we have a sequence of instructions where the next instruction depends on the previous one’s result. For example:

for (int i = 0; i < size; i++) {

// Each addition depends on previous result

sum += array[i];

}The optimization to fix such a bottleneck is called “loop unrolling”. It basically means executing multiple steps of the loop in a single iteration. For example, we can compute four parallel sums in each iteration. Each sum value can be updated independently of the other one, the CPU will notice that and execute those four add operations in parallel.

// Better superscalar utilization - independent operations

int sum1 = 0, sum2 = 0, sum3 = 0, sum4 = 0;

for (int i = 0; i < size; i += 4) {

sum1 += array[i];

sum2 += array[i+1];

sum3 += array[i+2];

sum4 += array[i+3];

}

int sum = sum1 + sum2 + sum3 + sum4;Usually, we don’t have to do such kind of optimization ourselves, the compilers these days can do this for us. However, when you notice below par instruction throughput, you may want to check the compiler’s output to see what is going on. Sometimes, the compiler may not be able to do the right optimization and you may have to roll up your sleeves to do it yourself.

The Ingredients Cache

While pipelining of orders was a great idea, it was still not as successful as it could be. Orders were taking a long time to be prepared, which was preventing the restaurant from accepting more orders and adding them to the pipeline.

On analysis, the expert finds that this was happening because the chefs would not start cooking an item until all of the required ingredients were brought to them. And instead of keeping them locally stocked, the ingredients were being delivered from the market on demand. It took 10 minutes to get something delivered from the market–the amount of time in which the restaurant could have delivered 5 orders if this stall was not there.

The expert optimizes this by installing local shelves and fridges to stock the ingredients which have been in-demand recently, he calls it the ingredients cache.

He designed this based on the observation that usually at certain hours of the day, certain items are in demand and in that case keeping their ingredients helps preparing these items faster. The technical name of this property is temporal locality, which means an item which is required now is very likely to be required in the near future as well. Of course, when people are ordering random things then this optimization is not of much use.

Another thing about these shelves and fridges is that they have limited space, so when they run out of space, the restaurant needs to throw away some of the ingredients to make space for the ones which are required right now but not present in the cache. This is called the cache eviction policy.

Apart from the local shelves and fridges, the restaurant also builds a bigger cache in the basement from where any ingredient can be fetched in 2 minutes. And, whatever is not present even in the basement, gets delivered from the market. This gives rise to a hierarchy of ingredient caches, each with larger capacity and larger access times.

Caches in Processors

Just like items of an order need ingredients immediately available for cooking, the CPU needs the operand values for an instruction to be available in the CPU to execute them.

For example, consider a line of code a = b + c, where a, b, c are integers. To execute this code, the values of the variables b and c need to be brought into the CPU registers from memory.

All the program data resides in the main memory, and it takes 100-200 cycles to read any data from there. In the above example, if both the values need to be fetched from main memory, the simple add operation will take 200-400 cycles which is extremely slow. For comparison, the CPU can do the addition within a single cycle if the operands are in its registers, so this is 200x slower at the very least.

Just like the restaurant built a hierarchy of storages to retrieve the ingredients faster, the processors come with a hierarchy of cache memories. These days we usually have three levels of caches in the processors, called L1, L2 and L3 caches–each larger and slower and farther from the CPU than the previous level. The access latency of the L1 cache is usually 3-4 cycles which is significantly faster than main memory but it is of very small capacity, 32-64 kB on modern X64 hardware.

The following diagram illustrate the sizes of all the memories in the hardware and their latencies:

Just like the restaurant keeps the currently in-demand ingredients in its cooking floor cache, processors also keep the most recently used data in the L1 cache, anticipating temporal locality.

As these caches are small, they also employ an eviction policy to make space for new data. Most of the processors implement some approximate variant of the least recently used eviction (LRU) policy, which means evict cache lines which have not been accessed for a while.

Spatial Locality of Ingredients

Apart from temporal locality, the caches exploit another property of data, called spatial locality. Let’s understand through the restaurant example.

While caching ingredients improved speed, another inefficiency remained. When preparing a dish whose ingredients weren't on the cooking floor, the restaurant faced a problem with how their warehouse was organized.

Initially, ingredients were stored randomly throughout the warehouse - flour might be in aisle 1, tomato sauce in aisle 7, and cheese in aisle 12. The delivery person could only visit one location per trip, bringing whatever was at that location. This meant multiple trips were needed to gather all ingredients for a single recipe, causing significant delays.

The restaurant reorganized their warehouse so that ingredients commonly used together were stored at the same location. Pizza ingredients (flour, sauce, cheese, toppings) were all placed on the same shelf, as were burger ingredients in another section.

Now, when the delivery person made a single trip to get flour for a pizza, they automatically brought back all the other pizza ingredients stored at that same location. This "spatial locality optimization" meant one trip could supply everything needed for a recipe, dramatically reducing preparation time.

Just as the restaurant groups ingredients that are used together, computers benefit from similar spatial organization of data in memory. Let's see how this applies to our processors.

Spatial Locality of Data

Similar to how the delivery person could only visit one location in the warehouse at a time, the memory bus can be requested for data of only a single location in main memory. This means that accessing values at different addresses requires multiple trips and costs hundreds of cycles.

As a result caches are optimised for spatial locality of data. They assume that the related data is stored contiguously or nearby in memory and instead of bringing just the value at the requested address into the cache, the cache brings an entire block of data. The block size depends on the memory bus capacity, which is usually 64 bytes on present day hardware.

The CPU caches are also organized to cache these entire blocks rather than caching the exact value that the application requested. Within the cache, these blocks are called cache lines and these are also 64 bytes in size (on X64 hardware at least).

Consider this example: When an application requests an 8-byte long value stored at memory address 130, the hardware doesn't retrieve only those specific bytes. Instead, it fetches the entire 64-byte block starting at address 128 (the nearest 64-byte aligned address) and loads this block into one of the available cache lines. Subsequently, if the program attempts to access any value within the address range of 128-191, that data will already reside in the cache, eliminating the need for additional time-consuming trips to main memory.

Taking advantage of spatial and temporal locality results in improved system performance and better utilization of the memory bandwidth. But doing this requires writing code with mechanical sympathy. Let’s understand with some examples.

Mechanical Sympathy with the Caches

These caches in the CPU improve the system performance only when they are used effectively. They are designed anticipating temporal and spatial locality, but if things are more random, they become ineffective.

Taking advantage of the caches requires careful consideration of the data structure layout and memory access patterns. Let’s take a look at a few examples.

Cache Friendly Data Layout

If the restaurant did not store the ingredients carefully to optimize for spatial locality, they would be bringing in random sets of ingredients onto the kitchen floor. This would hamper the performance in two ways:

The random and unrequired set of ingredients waste precious space in the kitchen

And actual required ingredients still need to be fetched which would add further delays in order preparation.

Similarly, in the case of data structures, sometimes we need to optimize the layout of our data structures to take advantage of the spatial locality. The fields which are usually accessed together should be kept together in the object definition so that when one of those fields is accessed, the remaining fields are also cached as part of the same cache line.

Consider the following example of data structure layout with poor spatial locality. We have a GameEntity object which contains metadata about the entity such as its name and description, and then data about its current position and orientation.

Usually the player coordinates and orientation are accessed together and more frequently, while the metadata is accessed very rarely. The definition shown below will result in a lot of cache misses because the position_x and position_y fields lie in two different cache lines but the code will usually access them together. It will result in at least one cache miss and an extra memory access on every frame update.

/* Poor layout - frequently accessed fields scattered across cache lines */

struct GameEntity {

int id; // 4 bytes

char name[64]; // 64 bytes

float position_x; // 4 bytes - accessed every frame

char description[256]; // 256 bytes

float position_y; // 4 bytes - accessed every frame

char model_path[128]; // 128 bytes

float position_z; // 4 bytes - accessed every frame

float rotation; // 4 bytes - accessed every frame

};When position_x, position_y, position_z, and rotation are accessed together during each physics update (which happens thousands of times per second), the CPU must load 4 different cache lines despite only needing 16 bytes of actual data. A more cache friendly layout for this object is shown below:

/* Cache-friendly layout - group fields by access frequency */

struct GameEntity {

/* Hot path data (fits in one cache line) */

float position_x; // 4 bytes

float position_y; // 4 bytes

float position_z; // 4 bytes

float rotation; // 4 bytes

int id; // 4 bytes

/* Cold data - rarely accessed during main game loop */

char name[64]; // 64 bytes

char description[256]; // 256 bytes

char model_path[128]; // 128 bytes

};Now all frequently accessed fields are in a single 64-byte cache line, reducing memory traffic by ~75% during physics updates. This simple reorganization can yield 20-30% performance improvements in game engines and similar performance-critical applications.

A related optimization about data structure layout is keeping the read-only and read-write fields in separate cache lines. Whenever a field is modified, the entire cache line containing other fields and values becomes dirty. If some of the other processor cores have also cached the same cache line to access the read-only fields, their cache line becomes invalid. The next time these cores try to access this cache line, they will have to sync the latest value using cache coherency protocols, which adds a delay to the cache access process.

Cache Friendly Memory Accesses

Another situation where you can apply your understanding of cache mechanics is in optimizing your data access patterns - the specific ways your code retrieves and manipulates data. Let's illustrate this concept using our restaurant analogy.

Consider a variety of orders coming in: pizzas, sandwiches, fries, and so on. If these orders are sent to the chef randomly without any strategic organization, efficiency suffers. The chef might prepare a pizza, then switch to a burger, followed by fries, and later return to making another pizza. This approach poorly utilizes the "ingredients cache." When the first pizza was prepared, all pizza ingredients were readily accessible, and making a second pizza immediately would have been much faster. Instead, after the lengthy gap, those pizza ingredients may have been put away, requiring the chef to retrieve them all over again.

A more efficient approach would be to batch similar orders together. This strategy ensures that once the necessary ingredients are gathered (the cache is "primed"), they can be fully utilized to complete multiple similar orders quickly, significantly reducing overall preparation time.

Software applications frequently encounter similar inefficiencies. Developers often overlook their data access patterns when designing code. A typical scenario involves reading a specific value, which triggers the loading of an entire 64-byte cache line. However, instead of immediately utilizing the neighboring data now readily available in cache, the program executes unrelated operations. By the time the code requires additional values from that same cache line, it may have already been evicted, forcing another costly memory fetch.

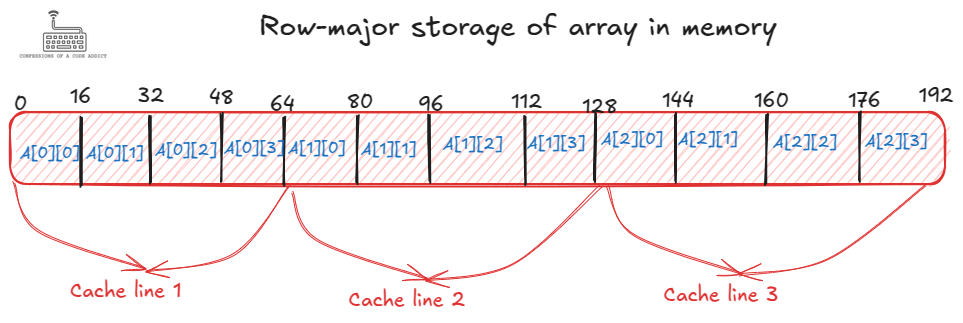

As a concrete example, consider the process of iterating through a multidimensional array. In memory, these arrays are stored in row-major order, which means all elements of a row are placed sequentially before beginning the next row. The diagram below illustrates how a 3×4 two-dimensional array is arranged in memory. Each array element occupies 16 bytes, and consequently, one complete row precisely fills a single cache line.

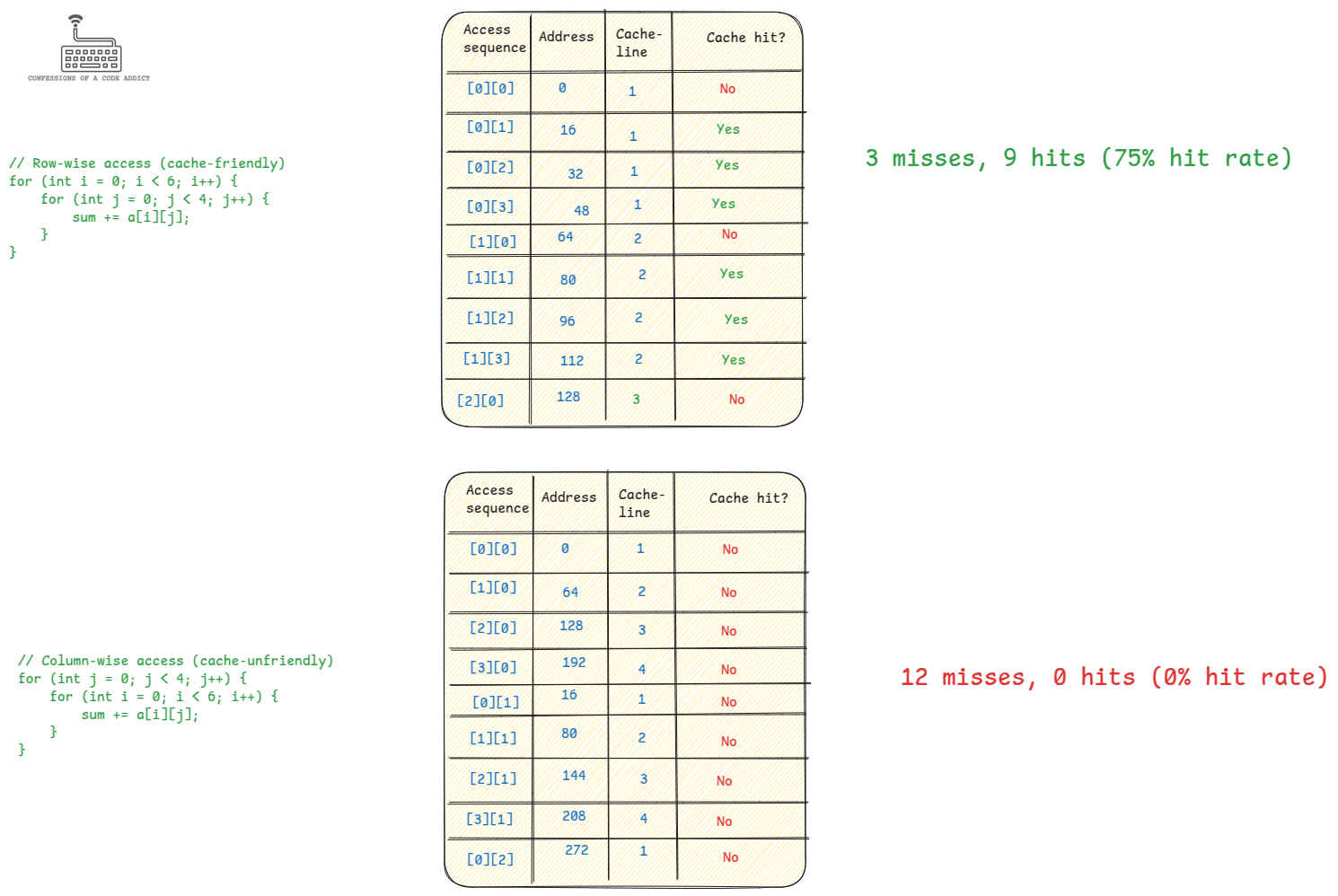

You can iterate through such an array in two ways:

Row-wise iteration: Here, you process every element within a row before advancing to the subsequent row. For instance, you would navigate from

a[0][0]througha[0][3], then proceed to the next row and continue froma[1][0]througha[1][3], and so forth.Column-wise iteration: With this method, you process all elements in a column before moving to the adjacent column. For example, you would traverse from

a[0][0]toa[2][0], then froma[0][1]toa[2][1], and continue this pattern.

Column-wise iteration can significantly degrade performance due to inefficient cache utilization. In our example, each row of the array perfectly fills one cache line. When iterating column-wise, accessing a[0][0] brings its entire row (through a[0][3]) into the cache. However, the next operation accesses a[1][0], which resides in a different cache line, triggering a cache miss. This pattern continues, causing cache misses at virtually every step. Furthermore, with larger arrays, by the time the iteration returns to access a[0][1], the original cache line containing a[0][0] may have already been evicted from the cache.

Row-wise iteration avoids this problem entirely. After accessing a[0][0], the three subsequent values (a[0][1], a[0][2], and a[0][3]) are already present in the same cache line. This spatial locality dramatically reduces cache misses and improves overall performance.

The following diagram illustrates the cache hits for row wise and column wise iterations through it:

I will also note that in some cases optimizing compilers can detect such poor data access patterns and they can generate code that is cache friendly.

Also, modern processors have cache prefetching which means that the hardware detects the access patterns and starts to prefetch the cache lines before they are accessed to prevent cache misses. These are not covered in this article.

Speculating the Order Demands

The final hardware optimization we are going to learn about is speculative execution. Let’s first understand what it is through the restaurant analogy.

At the drive-through, the restaurant notices a consistent pattern: customers quickly select their main item (burger, chicken sandwich, etc.) but then spend considerable time deliberating over sides and drinks from the many available options. It means that while the customer makes a decision, the order pipeline is stalled and the restaurant is losing on the potential order revenue.

To avoid this pipeline stall the restaurant implements a predictive system:

As soon as a customer orders a main item (e.g., "I'll have a cheeseburger..."), the kitchen begins preparing both the burger AND the most commonly ordered sides (fries)

This "speculative execution" happens while the customer is still deciding ("...and for my side, let me see...")

If the customer chooses fries (as predicted), their complete order is ready much faster

If they choose something else ("...I'll take onion rings instead"), the prepared fries are wasted and the correct side must be prepared from scratch. This misprediction causes extra delays to the delivery of the order, making the customer wait longer than usual.

The restaurant further refines this system by:

Tracking which sides are most commonly ordered with each main item

Noting regular customers' preferences to make better predictions

Analyzing time-of-day patterns (e.g., morning customers more likely to choose hash browns over fries)

However, this speculation comes with costs. Whenever the prediction is wrong, whatever they had on the order pipeline needs to be thrown away and they have to start from scratch to prepare the right item. This adds extra delay to the order.

Speculative Execution in Processors

The processor also does something similar in the form of speculative instruction execution. While the hardware can execute instructions very fast, fetching new instructions from memory takes time. To keep the execution resources in the processor busy, the instructions must be supplied at a fast rate.

If programs had a linear structure where one instruction followed another, this wouldn’t be a problem. The processor already prefetches sequential instructions and keeps them cached ahead of time to keep the pipeline fed.

However, program structure is not always linear. All non-trivial programs consist of branches in the form of if/else blocks, switch cases, loops, and function calls. For example, in the case of an if/else conditional block, the value of the condition decides which block of code needs to be executed next.

The branch condition itself involves one or more instructions which need to be executed before the processor knows which instructions to fetch next. If the processor waits for that to occur, the pipeline will not be fetching and decoding any new instructions until that time, resulting in a significant drop in the instruction throughput. And the performance penalty doesn’t end there - after the branching direction is determined and the location of the next instruction is known, the instructions from that address need to be fetched and decoded, which is another level of delay (especially if those instructions are not in the cache).

To avoid this performance degradation, the CPU implements branch predictors to predict the target address of branches. This is similar to how the restaurant used a predictive system to predict the side orders to keep the order pipeline busy.

But, whenever the branch predictor is wrong, there is a penalty on performance as well. When the processor finally evaluates the branch condition and finds that the branch predictor did a misprediction, it has to flush the pipeline because it was processing the wrong set of instructions. Once the pipeline is flushed, the processor starts to execute the right set of instructions. On modern X64 processors this misprediction can cause a performance penalty of ~20-30 cycles.

Modern processors have sophisticated branch predictors which can learn very complex branching patterns and offer accuracy of up to ~95%. But they still depend on predictable branching patterns in code. Let’s talk about how this knowledge about branch predictors helps us develop mechanical sympathy.

Mechanical Sympathy for Speculative Execution

Just as the restaurant benefits when customer order patterns are predictable, our code performs best when branch patterns are predictable to the CPU.

Branch predictors are very sophisticated these days and explaining them is out of the scope. But it helps to have some intuition about how they work. Under the hood, the branch predictor tries to associate the jump result (jump taken/not taken) with the address of the branch instruction. It initially starts with a default guess (e.g. branch not taken) and then based on the actual branch condition result it updates itself. So if the branch is usually taken, the branch predictor eventually learns to predict that.

However, if the branching condition is random, the predictor will have a hard time learning it. For example, consider the following code:

int abs(int x) {

if (x >= 0) {

return x;

}

return -x;

}Here, the result of the if condition is always going to be dependent on the value of x. If those values are random in nature, then the branch predictor will have a hard time predicting the branch. One solution is to convert such code into branch free code. For example:

static int abs(int x) {

int y = x>>31;

return (x^y)-y;

}Your compiler and runtime may already do these kinds of optimizations behind the scenes. But, being aware of how branch prediction works helps us structure algorithms to avoid frequent mispredictions in critical loops. Just as a smart restaurant manager considers customer decision patterns when designing their workflow, a performance-conscious developer considers branch patterns when optimizing critical code paths.

Another example is when you have to process objects of different types in a loop. At each iteration of the loop you need to check the type of the object to decide how to handle its processing. If the object types are randomly distributed, then the branch predictor will have a hard time predicting the right outcome and the performance will suffer. For example:

for (Order o : orders) {

switch (o.type) {

case DOMESTIC_BULK:

handleBulkOrder(o);

break;

case DOMESTIC_RETAIL:

handleRetailOrder(o);

break;

case INTERNATIONAL_BULK:

handleInternationalBulk(o);

break;

case INTERNATIONAL_RETAIL:

handleInternationalRetail(o);

break;

// Add other cases as needed

default:

handleUnknownOrder(o);

break;

}

}In such a case, it helps to first group the objects by their type and then have separate loops for each type of the object. That way there is no branching involved as you are handling objects of the same kind in the loop.

There are many such situations where the branch misprediction can become a bottleneck and various techniques exist that people utilize to improve the performance. While this article doesn’t have space to cover everything, hopefully it has made it clear the importance of mechanical sympathy and how it plays out in every line of code that we write.

Conclusion

Understanding how processors work "under the hood" is essential for writing truly performant code. As we've seen through our restaurant analogy, modern CPUs are complex systems with many optimizations that work best when code aligns with their expectations:

Instruction pipelining and superscalar execution enable multiple instructions to be processed simultaneously, but only when we provide independent operations

Memory hierarchy and caching dramatically reduce latency, but only when our data structures and access patterns exhibit good locality

Speculative execution keeps the pipeline full through branches, but becomes a performance liability with unpredictable branching patterns

Mechanical sympathy doesn't mean you need to write assembly code or understand every transistor. It means being aware of these fundamental hardware behaviors and how they interact with your higher-level code.

The most effective optimization approach is to:

Write clean, maintainable code first

Profile to identify performance bottlenecks

Apply mechanical sympathy principles specifically to those hot spots

As software engineers, we don't need to fight the hardware - we can work with it. When we align our algorithms and data structures with the grain of the processor rather than against it, we achieve that satisfying moment when a program that once took seconds now completes in milliseconds.

Acknowledgements

Huge thanks to Sanjeev Dwivedi in reviewing and providing feedback on this article. Sanjeev is a technology leader with vast experience in product management and leading high performance teams at companies such as VMWare and Microsoft. You can find him on Linkedin here.