Why This Python Performance Trick Doesn’t Matter Anymore

A deep dive into Python’s name resolution, bytecode, and how CPython 3.11 quietly made a popular optimization irrelevant.

The trick to performance optimization is mechanical sympathy: writing code that makes it easier for the hardware to execute it efficiently. In the past, CPU microarchitectures evolved so quickly that an optimization might become obsolete in just a few years because the hardware had simply become better at running the same code.

The same idea applies when writing code in interpreted languages like Python. Sometimes you need to use tricks that help the language’s virtual machine (VM) run your code faster. But just like hardware improves, the Python VM and compiler also keep evolving. As a result, optimizations that once made a difference may no longer matter.

One such optimization trick in Python is to create a local alias for a function you’re calling repeatedly inside a hot loop. Here’s what that looks like:

# Benchmark 1: Calling built-in len directly

def test_builtin_global(lst: list):

for _ in range(1_000_000):

len(lst)

# Benchmark 2: Aliasing built-in len to a local variable

def test_builtin_local(lst: list):

l = len

for _ in range(1_000_000):

l(lst)

This trick works because of how Python resolves variable names. Creating a local alias replaces a global lookup with a local one, which is much faster in CPython. But is it still worth doing?

I benchmarked this code across recent Python releases, and the results suggest that the answer is: not really. So what changed?

To answer that, we’ll need to dig into how Python resolves names during execution, and how that behavior has evolved in recent versions. In particular, we’ll explore:

Why this trick worked in earlier versions of Python

What changed in recent CPython releases to make it mostly obsolete

Whether there are still edge cases where it helps

Cut Code Review Time & Bugs in Half (Sponsored)

Code reviews are critical but time-consuming. CodeRabbit acts as your AI co-pilot, providing instant Code review comments and potential impacts of every pull request.

Beyond just flagging issues, CodeRabbit provides one-click fix suggestions and lets you define custom code quality rules using AST Grep patterns, catching subtle issues that traditional static analysis tools might miss.

CodeRabbit has so far reviewed more than 10 million PRs, installed on 1 million repositories, and used by 70 thousand Open-source projects. CodeRabbit is free for all open-source repos.

How Python Resolves Local and Global Names

To understand why this trick made a difference in performance, we need to look at how the Python interpreter resolves variable names, specifically, how it loads locally vs globally scoped objects.

Python uses a stack-based virtual machine. This means it evaluates expressions by pushing operands onto a stack and performing operations by popping those operands off. For example, to evaluate a + b, the interpreter pushes a and b onto the stack, pops them off, performs the addition, and then pushes the result back on.

Function calls work the same way. For a call like len(lst), the interpreter pushes both the function object len and its argument lst onto the stack, then pops and uses them to execute the function.

But from where does the interpreter find and load objects like len or lst?

The interpreter checks three different places when resolving names:

Locals: A table of locally scoped variables, including function arguments. In CPython, this is implemented as an array (shared with the VM stack). The compiler emits the

LOAD_FASTinstruction with a precomputed index to retrieve values from this table, which makes local lookups very fast.Globals: A dictionary of global variables, including imported modules and functions. Accessing this requires a hash lookup using the variable’s name, which is slower than a local array access.

Builtins: Functions like

len,min, andmax. These live in a separate dictionary and are checked last if the name isn’t found in globals.

With that understanding of how name resolution works in CPython, let’s now compare the disassembly of the two versions of our benchmark function.

For a more comprehensive coverage of the CPython virtual machine, check out my article on its internals:

Dissecting Unoptimized Python Bytecode

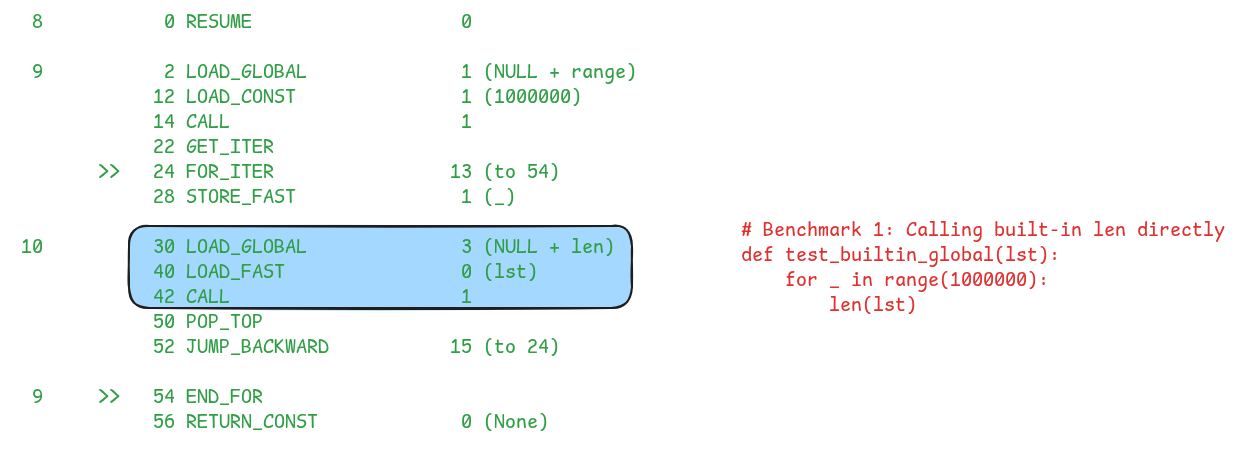

Let’s take a look at what’s actually happening under the hood. We can use Python’s built-in dis module to view the bytecode generated by our functions. Below is the disassembly of the slower version, the one that calls len directly:

Let’s break down what’s happening in those highlighted instructions:

LOAD_GLOBAL: This instruction loads the name

lenfrom the global scope on the stack. In the disassembly, you’ll see something likeLOAD_GLOBAL 3 (NULL + len). That3is the argument passed to the instruction. It’s an index into theco_namesarray, which is a tuple of all names used in the function for global or builtin lookups. So,co_names[3]gives'len'. The interpreter retrieves the string'len', hashes it, and performs a dictionary lookup inglobals(), falling back tobuiltinsif needed. This multi-step lookup makesLOAD_GLOBALmore expensive than other name resolution instructions. (We will look at howLOAD_GLOBALis implemented in CPython right after this)LOAD_FAST: After loading the function that is to be called, the next thing the interpreter needs to do is to push all the arguments. In this case, len takes only one argument which is the list object. This is done using the

LOAD_FASTinstruction. It loads thelstobject from the local variables using a direct index into an array of local variables, so there’s no hashing or dictionary lookup involved. It’s just a simple array access, which makes it very fast.CALL: Next, the interpreter needs to perform the function call. This is done using the

CALLinstruction. The number afterCALLtells the interpreter how many arguments are being passed. So,CALL 1means one argument is being supplied. To execute the call, the interpreter pops that many arguments from the stack, followed by the function object itself. It then calls the function with those arguments and pushes the return value back onto the stack.

One of the costlier steps here is LOAD_GLOBAL, both in terms of what it does and how it’s implemented. We’ve already seen that it involves looking up a name from the co_names array, hashing it, and checking two dictionaries, globals() and builtins(), before it can push the result onto the stack. All of that makes it noticeably slower than a simple local access.

To understand just how much work it does behind the scenes, let’s now take a look at its actual implementation in CPython.

The code is taken from the file generated_cases.c.h which contains all the opcode implementations. Let’s focus on the highlighted parts that I have numbered.

The first highlighted block deals with instruction specialization. As we will see later, the default way of looking up globals is slow because it does not know which global symbol we are trying to load and from where. This information is only available to the interpreter at runtime. Instruction specialization caches this dynamic information and creates a specialized instruction, making future executions of the same code faster. We will circle back to this in a later section. Note that, this optimization was not present before CPython 3.11.

The second highlighted block is where the actual global lookup happens. It’s broken into two parts, which I’ve marked with arrows labeled 3 and 4.

First, the interpreter needs to figure out which name it’s supposed to look up. The

LOAD_GLOBALinstruction receives an argument (oparg), which is an index into theco_namestuple. This is where all global and builtin names used in the function are stored. The interpreter calls theGETITEMmacro to fetch the actual name (a string object) using this index.Once the name is retrieved, the interpreter calls

_PyEval_LoadGlobalStackRef. This function looks for the name in theglobalsdictionary first. If it’s not found there, it falls back to thebuiltinsdictionary.

Let’s zoom into this part and see the code for doing this globals and builtins lookup. _PyEval_LoadGlobalStackRef simply delegates to a function called _PyDict_LoadGlobalStackRef, defined in dictobject.c, so let’s directly look at its implementation (shown in the figure below).

Here’s what is happening in this code:

First, the function computes the hash of the name which is being looked up. This hash determines the index into the dictionary’s internal hash table.

Next, the function checks the globals dictionary.

If the name isn’t found in

globals, the function falls back to checking thebuiltinsdictionary.

From this entire discussion of global lookups in CPython, few things are worth highlighting:

The lookup requires a hash computation. This means that when you are repeatedly calling a function in a loop, the runtime is computing the hash each time. That said, string hashes are cached, so the overhead isn’t as bad as it might seem.

Another thing to note here is that builtins are checked last. So even if you’re calling a builtin function, the runtime still checks globals first and only then builtins. In a hot loop where performance matters, these things matter.

Next, we’ll dissect the disassembly of the code with the optimization in place.

Dissecting Optimized Python Bytecode

Let’s see how using a local alias actually changes the bytecode, and why it makes the optimized version faster. The following figure shows the bytecode disassembly for this version:

Let’s focus on the highlighted instructions that are responsible for the call to l, which is the alias we created for len. The key difference between the unoptimized and this version is that this one uses the LOAD_FAST instruction instead of LOAD_GLOBAL to load the function object onto the stack. So, let’s look at how LOAD_FAST is implemented in CPython (shown in the figure below).

You can see how short and tight this implementation is. It performs a simple array lookup using an index passed to it as argument. Unlike LOAD_GLOBAL, which involves multiple function calls and dictionary lookups, LOAD_FAST doesn’t call anything. It’s just a direct memory access, which makes it extremely fast.

By now, you should have a clear understanding of why this optimization trick works. By creating a local variable for the len builtin, we turned an expensive global lookup into a fast local lookup, which is what makes the performance difference.

But as we saw in the benchmark results, starting with CPython 3.11, this optimization no longer makes a meaningful difference in performance. So, what changed? Let’s see that next.

Inside CPython's Instruction Specialization

CPython 3.11 introduced a major optimization called the specializing adaptive interpreter. It addresses one of the core performance challenges in dynamically typed languages. In such languages, bytecode instructions are type-agnostic, meaning they don’t know what types of objects they will operate on. For example, CPython has a generic instruction called BINARY_OP, which is used for all binary operations like +, -, *, and /. It works with all object types, including ints, strings, lists, and so on. Therefore, the interpreter has to first check object types at runtime and then dispatch to the appropriate function accordingly.

So how does instruction specialization work? When a bytecode instruction is executed for the first time, the interpreter captures some of the runtime information about it, such as the type of the objects, the specific operation being performed, etc. Using that information, it replaces the slow generic instruction with a faster specialized instruction.

Thereafter, whenever the same line of Python code executes again, the interpreter executes the specialized instruction. Inside the specialized instructions, the interpreter always checks that the conditions for specialization still hold true. If the conditions have changed, e.g., the types are no longer the same, then the interpreter deoptimizes and falls back to the slower instruction.

The LOAD_GLOBAL instruction is also a generic instruction. In this case, the interpreter has to do a lot of additional work, such as looking up the name of the symbol, computing the hash, and finally performing lookups in the globals and builtins dictionaries. But once the interpreter sees that you’re accessing a specific builtin, it specializes LOAD_GLOBAL into LOAD_GLOBAL_BUILTIN.

The LOAD_GLOBAL_BUILTIN instruction is optimized to check the builtins dictionary directly, i.e., it skips checking the globals dictionary. It also caches the index of the specific builtin we are trying to lookup, which avoids the hash computation. The result is that it behaves almost like a LOAD_FAST, performing a fast array lookup instead of a costly dictionary access. The following figure shows its implementation.

Let’s break down the highlighted parts:

First, the instruction performs some checks to ensure that the conditions for which it specialized the

LOAD_GLOBALinstruction to this specialized version still hold true. If the conditions no longer hold, it falls back to the genericLOAD_GLOBALimplementation.After that, it reads the cached index value. This is based on the hash value it computed the last time while executing

LOAD_GLOBAL. It means that this instruction is specialized for looking up only thelenfunction.Next is the lookup in the builtins dictionary. This requires first getting access to the keys within the dictionary.

From the keys, it gets the list of entries in the internal hash table and looks it up using the cached index value. If it finds an entry, that is the object we were trying to load.

As you can see, an expensive hash table lookup turned into an array lookup using a known index, which is almost the same amount of work as the LOAD_FAST instruction. This is the reason that in the newer CPython releases, we don’t explicitly need to do the kinds of optimizations where we create a local variable for a global function or object. It automatically gets optimized.

But is this optimization of creating a local alias really obsolete? Maybe not. Let me show you another benchmark.

Benchmarking Imported Functions Vs Aliases

Let’s now look at a similar benchmark, this time involving a function from an imported module rather than a builtin. Here’s what the code looks like:

import timeit

import math

# Benchmark 1: Calling math.sin directly

def benchmark_math_qualified():

for i in range(1000000):

math.sin(i)

# Benchmark 2: Aliasing math.sin to a local variable

def benchmark_math_alias():

mysin = math.sin

for i in range(1000000):

mysin(i)

# Benchmark 3: Calling sin imported via `from math import sin`

from math import sin

def benchmark_from_import():

for i in range(1000000):

sin(i)There are three benchmarks:

benchmark_math_qualified: calls

math.sindirectlybenchmark_math_alias: creates a local alias

mysinformath.sinbenchmark_from_import: uses

sinimported viafrom math import sin

And the following table shows the results across the recent CPython releases.

In this case, we see that calling math.sin (fully qualified name) is slowest across the releases and creating an alias is fastest. While calling “math.sin” directly has gotten faster in recent Python versions, it still lags behind the alternatives in performance.

The performance gap here comes from how the function object is resolved when using a fully qualified name like math.sin. It turns into a two-level lookup. For example, the following figure shows the disassembly for calling math.sin(10).

Notice that now the interpreter has to execute two instructions to load the function object on the stack: LOAD_GLOBAL followed by LOAD_ATTR. LOAD_GLOBAL loads the math module object on the stack from the global scope. Then, LOAD_ATTR performs a lookup for the sin function in the math module and pushes the function object on the stack.

So, naturally this requires much more work. And the work increases as the number of levels of lookups increase. For example, foo.bar.baz() requires three levels of lookups.

With the recent Python releases, the performance of fully qualified invocation has also improved due to instruction specialization. However, you still have multiple instructions to execute. Whereas in the case of a local alias, the interpreter has to execute a single LOAD_FAST instruction.

Whether it’s worth trading the readability of a fully qualified name, such as math.sin for a small speedup by aliasing it to mysin, depends on your goals. If that part of the code is performance-sensitive, and your profiling shows this line is a bottleneck, then it’s worth considering. Otherwise, readability might matter more.

Wrapping Up

Aliasing global functions to local variables used to be a meaningful optimization. In earlier versions of Python, global lookups involved more overhead, and avoiding them made a measurable difference. With recent improvements in CPython, especially instruction specialization, that gap has narrowed for many cases.

Even so, not all lookups are equal. Accessing functions through a module or a deep attribute chain can still carry overhead. Creating a local alias or using from module import name continues to be effective in those situations.

The larger point is that optimizations don’t last forever. They depend on the details of the language runtime, which keeps evolving. What worked in the past might no longer matter today. If you want performance, it helps to understand how things actually work. That context makes it easier to know which tricks are worth keeping, and which ones you can leave behind in favor of cleaner, simpler code.