Understanding Computer Organization from First Principles

A ground-up model of how computers execute code, starting from logic gates and ending at the instruction cycle.

“Do not try to bend the spoon. That's impossible. Instead, only try to realize the truth... there is no spoon.” — The Matrix

Most programmers work comfortably inside layers of abstraction: writing code, calling APIs, using tools, without needing to know what happens underneath. But systems-level thinking is about lifting the hood. It means understanding how things actually work, from source code all the way down to silicon.

This article is where that starts. We’ll build a concrete mental model of how a computer executes instructions, beginning at the hardware level with logic gates and circuits. From there, we’ll step through how those circuits form an ALU, how data moves through registers, and how a CPU follows instructions.

But that’s only part of the story. We’ll also look at how this hardware model shapes everything above it. How compilers turn code into machine instructions. How executables are structured. How the OS lays out a process in memory. None of these make full sense without the layer below.

If you want to understand systems, this is the foundation. You don’t need to memorize how every part works. What matters is building a model that helps you reason through the system when things break or behave in unexpected ways. That’s what systems-level thinking is about.

Let’s get started.

Quick heads-up before you read

This article was originally paywalled as part of my x86 assembly series. If you find it useful, consider upgrading to get access to the full series, and discounts on upcoming courses and books.

There’s also a PDF ebook version of the series (currently 60 pages across four chapters), available separately. Paid subscribers get 40% off with an annual plan and 20% off with a monthly one — just email me to get your discounted link.

Building a Very Simple Processor

We will learn how the hardware executes code by doing a thought exercise where we construct a very simple processor based on the computational requirements of software, such as a simple calculator.

Even the calculator is capable of doing myriads of computations, so to begin with, we will focus on just one computation: adding two integers. Essentially, we want a simple computer capable of expressing and computing the following computation:

int a = 10

int b = 20

int sum = a + bTo be able to do this, what capabilities do we need in the hardware?

We need a way to represent information: How do we represent data such as the integers 10 and 20 here, and also how do we tell the hardware that it has to add the two values?

Storing the input and output data: Where do these values of a and b live?

Doing the actual computation: How do we perform the addition in the hardware?

This line of inquiry leads us to the work done by Claude Shannon where we will find all our answers.

Encoding Information using Binary

You might be familiar with Claude Shannon’s work on information theory, which is the foundation underlying all modern communication systems and data compression techniques.

As part of this work, he came up with the idea of using electrical switches to encode information as binary data. Essentially, if we represent the state of the circuit when it is closed as 1 and when it is open as 0, then we can encode 1 bit of information in that circuit, and by combining multiple such circuits, we can encode more information.

By encoding information in binary, we can leverage the power of binary arithmetic and Boolean algebra to implement complex computational and logical calculations, giving way for general-purpose computation, which are modern computers.

Transistors as Digital Switches

But modern processors aren’t built using electrical switches, they are built using digital switches that can be turned on and off automatically. This is made possible through transistors.

Transistors are semiconductor devices that act as electronic switches. They conduct current only when the voltage applied to them is above or below a certain threshold, depending on their configuration. This ability to switch on or off based on voltage levels makes them ideal for implementing digital circuits. By precisely controlling the flow of current through circuits built from transistors, digital switches are created. These switches form the fundamental building blocks of all modern chips.

Transistors to Logic Gates

Transistors are the bottommost layer of the computing stack based on which everything else is built. They are combined in specific configurations to build reusable components called logic gates.

You can think of gates as mathematical functions (or, if you prefer code, then a function in code) that takes one or more inputs and produces an output. Because we are working with digital circuits, all the inputs and outputs here are 1s and 0s.

For instance, the NOT gate takes one parameter as input and produces one output. As its name suggests, it inverts its input. So NOT(1) = 0 and NOT(0) = 1.

Similarly, there is an AND gate that takes two inputs and produces one output (you can also make an AND gate that takes a larger number of inputs). Mathematically, it works like this:

AND(0, 0) = 0

AND(0, 1) = 0

AND(1, 0) = 0

AND(1, 1) = 1We also have an OR gate which works like this:

OR(0, 0) = 0

OR(0, 1) = 1

OR(1, 0) = 1

OR(1, 1) = 1Finally, there is a very useful gate called XOR:

XOR(0, 0) = 0

XOR(0, 1) = 1

XOR(1, 0) = 1

XOR(1, 1) = 0Boolean algebra establishes that by combining these basic operations, it is possible to compute any mathematical function, and this is how the computational circuits within the processor are designed. Let’s see how.

Building Computational Circuits from Logic Gates

So we started with the goal of implementing the functionality to add two integers in our simple hardware. And now we know that it can be accomplished using logic gates. The logic circuit which implements binary addition is called an adder. But, before looking at the circuit itself, let’s talk about binary addition.

Again, we can think of it like a mathematical or programming function. It receives two bits as input and produces two bits as output. One of the output bits represents the sum of the two input bits, and the 2nd output bit represents the overflow or carry of the result.

add(0, 0) = sum: 0, carry: 0

add(0, 1) = sum: 1, carry: 0

add(1, 0) = sum: 1, carry: 0

add(1, 1) = sum: 0, carry: 1

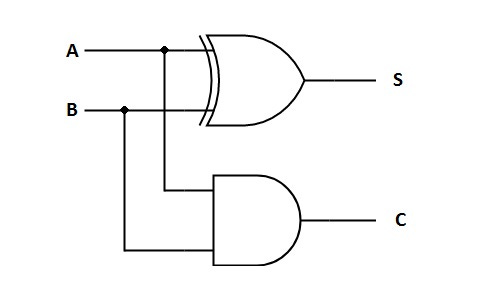

What you will see is that the mapping of the input bits to the sum value is identical to that of the XOR gate, while the mapping of the carry bit is identical to that of the AND gate. It means that an adder can be implemented by sending the input to an AND gate and a XOR gate, like the following figure:

This design is called a half adder because it is not useful when adding multibit numbers. For addition of two multibit numbers, we need three inputs: two input bits from the numbers at a given position, and one carry bit from the addition of bits at the previous position. For this, a slightly modified circuit is used, called the full adder.

I will not show its construction because this article is not about digital design, but the following circuit shows what it looks like. By chaining these adders together, we can create circuits capable of adding multibit numbers.

The ALU

A real-world processor consists of multiple different kinds of computational circuits for operations such as addition, subtraction, multiplication, division, and also logical operations (AND, OR, NOT). These circuits are combined in the form of an arithmetic logical unit (ALU) and the various computational circuits within it are called functional units.

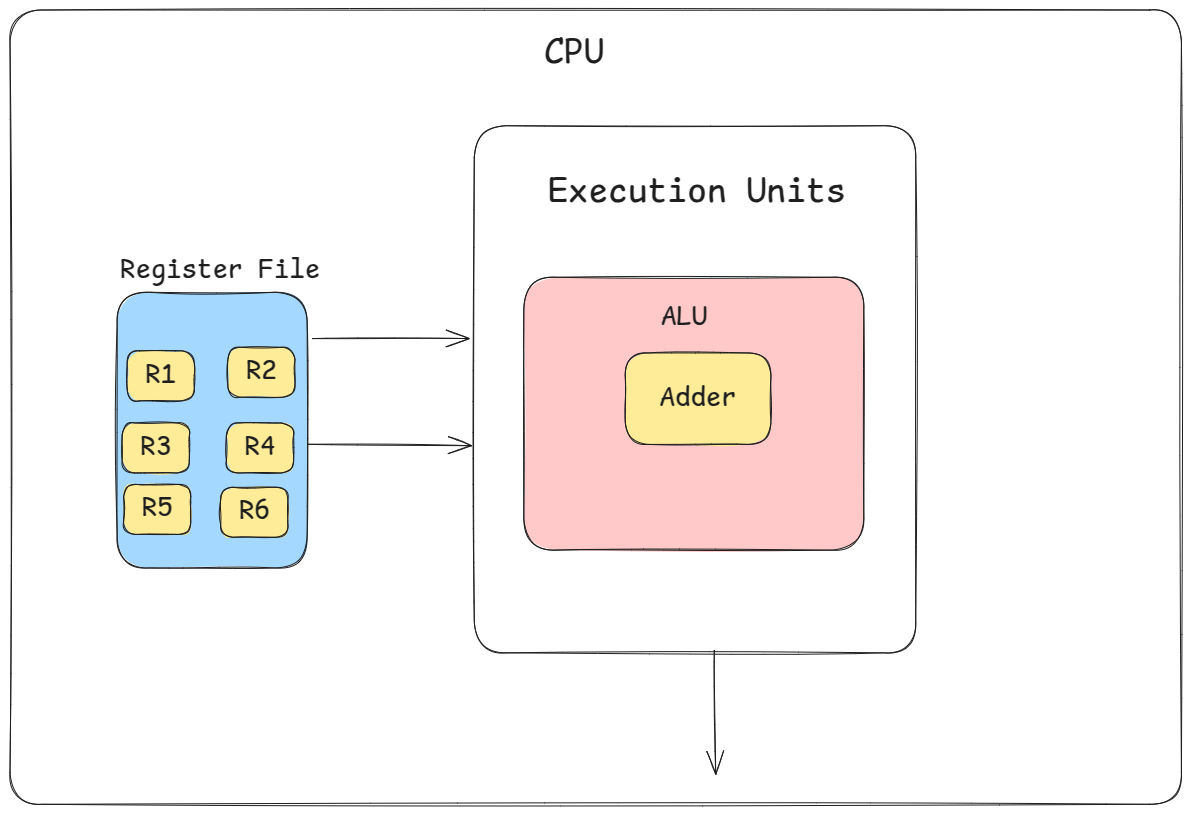

The inputs flow into the ALU which activates the right functional unit and produces the output. Schematically for our simple processor it looks the following diagram.

The Need for Storage: Introducing Registers

As you can see in the ALU diagram, it receives some input, performs the computation, and produces an output. So, the question arises: from where do these inputs come and where does the result go afterward? The answer is registers.

Apart from building computational circuits, transistors can be used to construct circuits that can hold state as well, i.e. memory. Using such circuits, we can construct memory units, and one such unit is the register.

Registers are fixed-sized memory units capable of storing a small number of bits, for example, modern processors have 32 or 64 bit wide registers. These are used to temporarily hold the data during computation. For instance, when performing an add operation, the input parameters are first stored in the registers, and then their values are fed into the ALU to perform the computation.

Typically, during program execution, data is moved into the registers from main memory (the RAM) and after the computation is done, the result is written back to the main memory. This frees up the register for other computations.

In our example of building a simple calculator, we need:

Two registers to hold the numbers we want to add (let’s say R1 and R2)

One register to hold the result of the addition. However, we could also use one of the two registers to store the output.

But real-world processors have many registers, e.g., the X86 architecture has 16 general-purpose registers. These registers are combined into a register file from which the data flows into the ALU. Let’s update the architecture diagram of our processor to see how it looks after the introduction of a register file consisting of 6 registers :

The diagram has started to show the typical organization of computer hardware at an abstract level. Typically, the ALU isn’t the only execution unit within the processor, so we have abstracted it inside an execution unit. The data flows from the register file into the execution unit to execute the instructions, and an output comes out of the execution units. As we go along, we will furnish more details in the diagram.

At this stage, our ALU is extremely limited; it can only perform an addition. Let’s extend it.

Adding Features and Control to Our Calculator

A processor capable of just addition is not very useful. We need more features, such as subtraction, multiplication, and division. All of these require computational circuits similar to the adder.

For example, subtraction can be implemented using the adder itself by simply negating the second operand value. But operations like multiplication need their own specialized circuits. We can implement these additional operations by adding separate circuits for each one, resulting in a more functional ALU with multiple functional units.

While we discussed the construction of the adder at the level of logic gates, we will not cover the remaining circuits at that level of detail. While that knowledge is valuable, as software engineers, it is not necessary to understand how everything works at the circuit level.

With multiple functional units in the ALU and multiple registers, we need a way to control which operation is performed and which registers are involved. This is done by a component of the processor, called the control unit.

The Control Unit and Decoders

The Control Unit is responsible for managing the flow of data between the registers and the ALU, and for selecting the appropriate functional unit within the ALU. It orchestrates the entire computation process by sending specific control signals to activate exactly the right components at the right time.

To do this, the Control Unit must know three things:

What operation needs to be performed. (e.g., addition, multiplication)

Where the operand lives. (i.e., which register holds the operand)

Where the result needs to be stored. (i.e., the destination register, or the memory address)

This information is provided to the Control Unit in the form of a binary encoded instruction.

Yes, here we are talking about the program that you and I write. Our programs get compiled down to a sequence of binary encoded instructions that the processor can decode and execute. We will talk about program execution later, but right now let’s focus on how the instructions are encoded and decoded.

Instruction Encoding and Decoding

Every hardware architecture usually has its own encoding format, and the details vary. So, instead of focusing on a specific implementation, we can try to get a general understanding of what this whole process involves. Let’s first understand how an encoded instruction may look like.

An instruction in our simple architecture needs to provide three pieces of information:

The opcode: which operation to perform

The two operand registers: from where the data for executing the operation comes

The destination operand register: where does the result go

Now, let’s say our hardware currently supports four operations (+, -, /, *), then we can encode all of it using 2 bits. But to be future proof where we expect more operations to be added, we can make the opcode size as 3 bits and encode these as follows:

000 = Addition

001 = Multiplication

010 = Subtraction

011 = DivisionSimilarly, we have six registers which we can encode using 3 bits:

000 = R1

001 = R2

010 = R3

011 = R4

100 = R5

101 = R6For instance, an instruction to multiply the values in R1 and R2 and store the result in R3 will be encoded as: 001000001010.

To decode the instruction, the control unit contains a decoder - a logic circuit (similar to the adder we saw previously) which maps these opcode and register bits to the right functional unit and registers.

Based on the decoded opcode and register names, the control unit sends control signals to the register file and the ALU which internally use that signal to select the appropriate functional unit (e.g., the adder) and the right register (e.g., R1).

The ALU uses the control signal to switch on the required functional unit. In the case of the register file, a multiplexer is involved which selects the right register in the file, and its data flows into the ALU.

The Instruction Pointer and the Instruction Execution Cycle

Up to this point, we have explained how the Control Unit decodes instructions and orchestrates data movement between the Register File and the ALU. But we haven’t discussed, from where the control unit gets these instructions?

In most hardware architectures, there is a special register called the instruction pointer (often called the program counter). This register’s role is to contain the address of the next instruction of the program.

The control unit reads the address in the Instruction Pointer and fetches the instruction from main memory. The instruction fetched is then stored in another special register called the instruction register (IR). Once the instruction register has a new instruction, the whole process of decoding and executing instruction takes place.

These registers enable what’s known as the instruction execution cycle - the fundamental process by which all programs run:

Fetch: The Control Unit reads the address stored in the Instruction Pointer and retrieves the instruction from that memory location, placing it in the Instruction Register.

Decode: The Control Unit decodes the instruction in the IR, determining which operation to perform and which registers to use.

Execute: The appropriate ALU circuit is activated to perform the computation using data from the specified registers.

Store: The result is written back to the destination register (or memory, as needed).

Update IP: The Instruction Pointer is incremented to point to the next instruction in memory.

This cycle explains how a program’s instructions are executed one after another. But how is the Instruction Pointer register initialized? The answer is the operating system kernel.

When a program first starts, the operating system loads the program’s instructions into memory and initializes the Instruction Pointer to the address of the first instruction. From that point on, the hardware takes over, executing instructions one by one through this cycle.

But what about Memory?

So far we have covered everything needed to construct a bare-bone hardware capable of doing simple arithmetic. But we have not talked about memory, even though we referred to it a few times. As most of the people reading my blog are experienced programmers, so I expect you already know how memory works. But let’s touch upon it briefly to complete the picture.

The Main Memory

So far, we have only talked about registers as memory. Registers are fast, fixed-sized memory units, but they have two significant limitations:

Limited Number of Registers:

Registers are small and fast, and they are also available in limited numbers. A typical program uses much higher number of variables than the number of registers in the hardware. It necessitates the need for a larger memory which can be used when there are not enough registers.

Lack of Address-based Access:

Registers can only hold primitive data types, e.g. integers and floats. But we need more powerful types, such as arrays and structs to build higher-level programming abstractions. These data types need address-based access.

For example, if an array

Astarts at address0x04and each element occupies 4 bytes, thenA[2]can be accessed by the address0x0c. This isn’t possible with registers.

This leads to the requirement of a larger memory with an address-based access scheme, which is what the main memory provides. While we don’t have space to go deeper into the physical construction of main memory, let’s discuss it briefly.

Physical Structure of Main Memory

Physically, the main memory is implemented as an array of memory cells, each storing one bit of information. These cells are arranged on silicon chips in a grid of rows and columns for manufacturing reasons. However, the memory is presented to the programmer as a linear sequence of bytes, each with a unique address. There are two types of main memories depending on their physical construction:

Dynamic RAM (DRAM): The most common type of main memory. Each cell is composed of a capacitor and a transistor. The capacitor holds a charge to represent a 1 or 0, but the charge leaks over time, so the cells must be periodically refreshed. This makes DRAM relatively slower compared to registers.

Static RAM (SRAM): Used primarily for CPU caches. Each cell is made from flip-flops instead of capacitors, making it faster and more reliable but also more expensive and power-hungry.

Communication Between CPU and Memory

Interacting with the memory is not straightforward. There are multiple pieces of information required to access it. Such as

Are we reading from memory or writing to it?

What is the address where the operation is to be performed?

To solve this communication challenge, there are multiple buses between the processor and the memory. These are

Control Bus: Specifies whether the operation is read or write.

Address Bus: Carries the memory address specifying where to read from or write to.

Data Bus: Transfers the actual data between the processor and the memory.

For instance, when the control unit needs to fetch an instruction, here are the things it needs to do:

Read the instruction address from Instruction Pointer and place it in the address bus

Update the control bus to indicate the read operation

Read the returned instruction from the data bus

Apart from the processor using memory to fetch program instructions, program instructions can also read or write memory. Let us discuss how that is made possible.

The Load/Store Units

We already discussed that the control unit fetches the instructions from main memory and puts them in the instruction register for decoding and execution.

Apart from that, the program instructions can themselves read or write memory. For instance, a program that iterates through an array of integers to sum them up has to bring each element of the array from memory into one of the registers to update the sum (which itself will be kept in a register).

Most hardware architectures have instructions that can move data between registers and memory, in both directions. For example, the mov instruction in x86 can be used to move data between the registers, and also between a register and the memory. At the hardware level, the CPU contains special execution units called the Load and Store units that are activated to execute such instructions.

The following diagram shows the processor architecture after the introduction of memory.

There is No Spoon

This is a great place to mention an interesting observation that you will appreciate.

As far as the processor is concerned, everything is just bits. The hardware doesn't see integers, floating-point numbers, strings, or even code—it only processes binary data. The distinction between these abstractions exists only in our minds or at higher layers of abstraction, such as programming languages and compilers.

The hardware doesn't know whether a value in memory is float or an int. It merely moves bits around according to the instructions it's given. For example, most processors have separate sets of registers for integer and floating-point values. When you instruct the processor to move data from memory to a register, it performs that operation without ever verifying the nature of the data.

If you mistakenly load a 32-bit floating-point value into an integer register, the hardware will interpret those bits as an integer. It doesn't validate, interpret, or question, it only executes. The compiler enforces type safety in higher-level languages, but when you're working with assembly, you are responsible for ensuring that the right instructions operate on the right data. There’s no safety net.

Even the distinction between code and data is just another layer of abstraction. When the Instruction Pointer fetches an instruction from memory, the processor simply decodes the bits as an instruction. If you somehow update the Instruction Pointer with the address of a piece of data, such as an integer, the control unit will still read it and attempt to decode it as an instruction. If the bit pattern is invalid as an instruction, the hardware will raise an exception and the program will crash. But it doesn’t do this because it realizes you provided data instead of code, it only fails because the bits didn’t correspond to a valid instruction.

In the end, the processor does not recognize or care about your abstractions. It only sees bits. This realization is similar to the moment in The Matrix when the child tells Neo: “There is no spoon.” .

From High-level code to the Hardware-level Execution

We have covered the fundamentals of how the hardware is organized, now let’s connect everything from top-to-bottom and see how a high-level program is translated to machine code and executed.

Compilation of High-Level Code to Machine Code

As we have seen, the hardware understands only instructions encoded in binary form. But, writing programs directly in binary is cumbersome and error-prone, which is why hardware designers define mnemonic instructions: cryptic but human-readable representations of these binary instructions. This is known as the assembly language.

For example, in x86 assembly, the following instruction adds the values in the registers rax and rdx, and stores the result back into rdx.

add %rax, %rdx

raxandrdxare register names in x86-64 architecture, and the assembler syntax requires using%to represent register names. Don’t worry about these details, we will cover them during the course.

Even writing assembly by hand is cumbersome, so we use high-level languages which are compiled down to assembly or directly to machine code for execution. Consider the following high-level C code:

long a = 10;

long b = 20;

long sum = a + b;The C compiler will compile this into assembly code which may look like this:

movq $10, %rax ; Store 10 in register rax

movq $20, %rdx ; Store 20 in register rdx

addq %rax, %rdx ; rdx = rax + rdxThe ; character is used to write comments in some assemblers.

Here’s what is happening in the code:

The

movqinstructions are used in x64 assembly to write a long (64 bit integer) value into a register. So, the twomovqinstructions are writing the values 10 and 20 intoraxandrdx, respectively.The

addqinstruction is used to add the values of the two registers. The 2nd register is used as the destination. So, this equivalent tordx = rax + rdx.

But, this assembly code isn’t what the hardware understands. So, there is another translation step where this assembly code is converted into machine code by a tool called the assembler.

The assembler translates one assembly source file at a time and creates a binary file containing the machine code. This file is called the object file. If there are multiple assembly source files, then the assembler creates one object file for each of them.

Finally, to produce the executable file, a third tool, called the linker is used that stitches these object files together into a single file. The role of the linker is to resolve the addresses of the symbols and functions across multiple files.

For instance, if your C program consists of two source files: main.c and math.c, where main.c calls functions defined in math.c, then the compiler and assembler will produce two object files: main.o and math.o. Afterwards, the linker will generate the final executable such that it contains the code for all the functions called from main.

The output of the linker is the final executable binary file. Even though it is a binary file, its format has to be understandable by the operating system because ultimately it is the OS which has to load it into memory for execution.

The Executable Binary Format

To execute a program, the operating system needs to load it into memory and update the Instruction Pointer (IP) register with the address of the first instruction. For this, the OS must understand the format of the executable binary file.

Operating systems standardize the format of binaries. For example, Linux and BSD systems use the Executable and Linkable Format (ELF).

In ELF, the binary file is organized as a sequence of sections, where each section stores a particular kind of data. For example:

The text section holds the binary encoded instructions (the machine code).

The data section holds the statically declared program data (e.g., your globally initialized variables).

The bss section holds uninitialized data.

Note: There are many more kinds of sections and the overall ELF format is more detailed, but we don’t need to know all that right now. Knowledge of the sections and why they exist will be useful when reading/writing assembly code.

The organization into sections serves two purposes:

Contiguous Instruction Storage: The program instructions need to be stored contiguously in memory so that the hardware can simply increment the Instruction Pointer (IP) by the size of the instruction to get the next instruction address.

Permission Management: Different kinds of data require different permissions. For example:

Program code should be readable and executable, but not writable.

Data may be readable and writable, but not executable.

By segregating different kinds of data, the OS can set appropriate permissions to enhance security and stability.

This well defined format enables the operating system to parse the file and load the different sections in different regions in memory with appropriate permission flags.

Loading the Program into Memory

When we execute a program, the operating system loads all the code and data into memory before execution begins. Because the operating system understands the binary format, this process is straightforward. The OS allocates memory pages in different regions of memory for different sections of the binary:

The text section is placed in executable memory.

The data section is placed in read/write memory.

After setting up the pages and loading all the data, the operating system updates the Instruction Pointer with the address of the first instruction of the program. This is possible because the OS knows exactly where the code was loaded in memory.

Once this setup is done, control is transferred to the hardware to begin executing the program.

The Instruction Fetch-Decode-Execute Cycle

Now that the program is loaded into memory, the Control Unit takes over and begins executing instructions by repeating the Fetch-Decode-Execute Cycle:

Fetch: The Control Unit fetches the next instruction from memory using the address stored in the Instruction Pointer (IP).

Decode: The Control Unit decodes the instruction to determine the operation, source operands, and destination register.

Execute: The ALU performs the specified operation.

Store: The result is written back to the appropriate register or memory location.

Increment IP: The Instruction Pointer (IP) is incremented to point to the next instruction, and the cycle repeats until the program ends.

This cycle is fundamental to how all processors operate. Each instruction is processed through these stages in a continuous loop until the program completes.

Conclusion

We began this article by asking a simple question: What does it take to build a processor for a simple computer that can add two numbers? From this modest starting point, we’ve uncovered the fundamental architecture that underpins all modern computing.

Our simple processor led us to discover key components that exist in every computer:

Transistors and Logic Gates: The basic building blocks that implement Boolean operations and enable computation at the physical level.

ALU: The computational core that began as a simple adder in our processor but expands to handle diverse operations in real processors.

Registers: Fast, accessible storage locations that hold the data being actively processed.

Control Unit: The orchestrator that decodes instructions and coordinates all components, determining which operations to perform and on what data.

Instruction Execution Cycle: The fundamental fetch-decode-execute cycle that drives all program execution.

Memory System: The larger storage hierarchy that holds both instructions and data beyond what fits in registers.

While our simple processor serves as a clean conceptual model, real architectures like x86 and ARM are much more sophisticated. They incorporate advanced features such as pipelining, branch prediction, cache hierarchy.

Yet despite this complexity, these advanced processors still follow the same fundamental organization and principles we’ve explored. They still fetch instructions from memory, decode them to determine operations, execute using an ALU, store results, and move to the next instruction.

By understanding this bottom layer, how hardware actually implements computation, you now have a solid foundation for learning assembly programming.

In our upcoming x86 assembly course, we’ll build on this foundation by exploring the specific registers, instructions, and memory addressing modes of the x86 architecture. We’ll connect high-level programming constructs to their low-level implementation, allowing you to see through the abstractions to the true nature of computation - just as Neo finally saw the Matrix for what it really was.

What to Read Next

Real-world processors have many more advanced features for delivering high performance, such as instruction pipelining, caches, and branch prediction. If you are curious, then my recent article is the perfect stepping stone to learn about them.

Hardware-Aware Coding: CPU Architecture Concepts Every Developer Should Know

Even the most elegant algorithms can run painfully slow when they fight against your computer's underlying hardware. The difference between mediocre and exceptional performance often comes down to whether your code works with—or against—the CPU's architecture.