Hi everyone,

Thanks for joining for this session. Please enjoy the recording.

We covered the following topics:

The basic design principle behind TSP and LPU - The limitations in CPU/GPU hardware which led Groq to create custom processors

The architecture of TSP and program execution on it

From TSP to LPU

Physical packaging of TSPs in LPU

Compiler scheduled data flow in LPU

Program execution on LPU & analyzing performance results

Following is an AI generated summary of the session (I felt it was decent enough that you could use it for a quick review).

Meeting summary for Comparing CPUs, GPUs and LPU + AMA (03/17/2024)

Quick recap

Abhinav discussed the advances and challenges in language processing units (LPUs) and their impact on larger language models. He also presented a detailed breakdown of the TSP (tensor streaming processor) hardware, explaining its unique design and functionality, and discussing the architecture and functionality of the TSP system. Role of Compiler in Distributed Systems, Error Correction Mechanism and results from Groq’s papers were discussed. The talk also included discussion of clock synchronization, data flow, and the complexities of compiling and running machine learning (ML) code on specific hardware configurations.

Summary

Language Processing Units and Large Language Models

Abhinav discussed the advances and challenges in language processing units (LPUs) and their impact on large language models. He highlighted the achievements of a company that broke all standards for intervention on large language models. He further explained the architecture and functioning of the LPU, emphasizing its predictability and stability. Abhinav ended the conversation by encouraging questions and discussions on the topic.

CPU and GPU: Limitations and Fixes

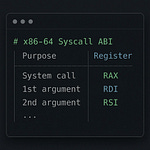

Abhinav discussed the limitations of using CPUs and GPUs, emphasizing that control over instruction scheduling and execution lies with the hardware, not the compiler. They highlighted issues of instruction latency, non-deterministic architecture, and inability to guarantee program execution time. Charles questioned the relevance of determinism in achieving high resource utilization, to which Abhinav clarified that it is necessary for large-scale distributed systems to avoid any sources of non-determinism.