Last night we did this live session on performance engineering. I’ve done sessions on 1BRC in the past as well, but this time the focus was on learning things at a more fundamental level. We spent a good chunk of the time in the beginning understanding the basics of how the CPU executes code, how the data cache when utilized efficiently improves program performance significantly, and touched upon the branch predictor and instruction level parallelism.

After that we discussed the optimization techniques that winners of 1BRC used. These included:

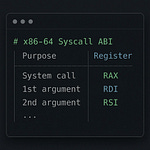

An analysis of read and mmap system calls, how they work under the hood and their trade offs

Static partitioning versus work stealing when distributing work in a multithreaded solution

Cost of branch misses in a hot loop and a cool branchless coding technique to find delimiters in strings

Why a shared data structure might be bad in a multithreaded system

Design of a cache efficient hash table

Loop unrolling to improve the performance by 3x

I hope you enjoy the recording. Pro tip: watch it at 1.5x or 2x speed.

The real benefit of these sessions is in attending live because you get to ask questions and drive the discussion. However, if you wish to access the recording and don’t want a subscription, you can purchase it at the below link.

Note to attenddees: We discussed SWAR but I did not explain the code because doing that would have taken a lot of time. However, for those interested can check out this blog post.