How Python Compares Floats and Ints: When Equals Isn’t Really Equal

Another Python gotcha and an investigation into its internals to understand why this happens

This tweet (see screenshot) was trending on Twitter (X) and it caught my attention. It’s another instance of gotchas in Python due to its unique implementation details.

We know floating point math isn't precise due to their representation limits. Other languages often implicitly promote int to double, leading to consistent comparisons. However, Python's infinite precision integers complicate this process, leading to unexpected results.

Python compares the integer value against the double precision representation of the float, which may involve a loss of precision, causing these discrepancies. This article goes deep into the details of how CPython performs these comparisons, providing a perfect opportunity to explore these complexities.

So this is what we will cover:

Quick revision of the IEEE-754 double precision format — this is how floating-point numbers are represented in memory

Analyzing the IEEE-754 representation of the three numbers

The CPython algorithm for comparing floats and ints

Analyzing the three test scenarios in the context of the CPython algorithm

Photo by Mika Baumeister on Unsplash

A Refresher on the IEEE-754 Format

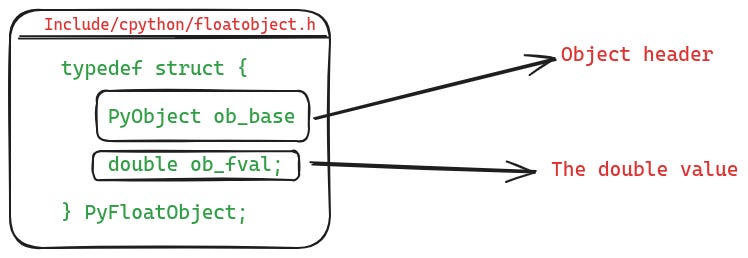

CPython internally uses the C double type to store the floating point value, and as a result it follows the IEEE-754 standard for representing these values.

Let’s do a quick revision of what the standard says about representing double precision values. If you already know this, then feel free to jump to the next section.

The double precision values are represented using 64 bits. Out of these:

1 bit is used as the sign bit

11 bits are used to represent the exponent. The exponent uses a bias of 1023, i.e., the actual exponent value is 1023 less than the value in the double precision representation.

and 52 bits are used to represent the mantissa

There is a lot of theory behind this format, how numbers are converted to it and what the various rounding modes are — there is a whole paper on it that you should read if interested.

I will cover the basics, but know that this is not the full coverage of the IEEE-754 format. I will try to explain how numbers are converted to the IEEE-754 format for the common case when they are > 1.

Converting a Floating-Point Number to IEEE-754 Format

To convert a floating-point number to its IEEE-754 binary representation you need to go through the following three steps. We will consider the example of the number 4.5 to understand each of these steps.

Step-1: Convert the Number to Binary

Converting a floating point value from decimal to binary requires that we convert the integer part and the fraction part separately and then in the binary representation join them with a binary point (.).

The binary representation of 4 is 100 and for 0.5 it is 1. Therefore, we can represent 4.5 as 100.1.

Step-2: Normalize the Binary Representation

The next step is to normalize the binary representation from the previous step. Normalization simply means that the binary representation must have just one bit before the binary point.

In our example, the binary number is 100.1 and we have 3 bits before the binary point. To normalize it, we need to do a right shift by 2 bits. So this normalized representation will look like 1.001 * 2^2.

Step-3: Extract the Mantissa and Exponent from the Normalized Representation

The normalized representation directly gives us our mantissa and exponent. In our running example, the normalized value is 1.001. To obtain this we had to right shift the original value by 2 bits, therefore the exponent is 2.

In the IEEE-754 format, the mantissa is of the form 1.M. The leading 1 before the binary point is not really part of the binary representation because it’s always known to be 1 and not storing it gives us 1 extra bit of storage. Therefore, the mantissa here is 001. As the mantissa is 52 bits wide, the remaining 49 bits will be 0s.

The Final Binary Representation

Sign bit: The number is positive, so the sign bit will be 0.

The Exponent: The exponent is 2. However, when representing in the IEEE-754 format a bias of 1023 needs to be added to it, which gives us 1025. So the binary value of the exponent is

10000000001.The Mantissa: The mantissa is

001000…000

Finally, following is the IEEE-74 binary representation of 4.5:

(0) (10000000001) (001000…000)(I’ve separated out the different components using parentheses for clarity.)

Analysing the IEEE-754 Representation of the Three Test Cases

Let’s quickly put our knowledge of the IEEE-754 double precision format to figure out the representation of the three numbers from the three test scenarios. This will come handy when we analyze them in context of the CPython algorithm for float comparison.

IEEE-754 Representation of 9007199254740992.0

Let’s start with the value from the first scenario: 9007199254740992.0.

It turns out 9007199254740992 is actually 2^53. That means in the binary representation it would be 1 followed by 53 zeros: 10000000000000000000000000000000000000000000000000000.

The normalized form will be 1.0 * 2^53, which will be obtained by shifting the bits 53 places to the right, giving us the exponent as 53.

Also, recall that the mantissa is only 52 bits wide, and we are shifting our value by 53 bits to the right, which means that the LSB of our original value will be lost. However, because all the bits here are 0, no harm is done and we are still able to represent 9007199254740992.0 precisely.

Let’s summarize the components of 9007199254740992.0:

Sign bit: 0

Exponent:

53 + 1023 (bias) = 1076(10000110100in binary)Mantissa:

0000000000000000000000000000000000000000000000000000IEEE-754 Representation:

0100001101000000000000000000000000000000000000000000000000000000

IEEE-754 Representation of 9007199254740993.0

The value from the 2nd test scenario is 9007199254740993.0 which is one higher than the previous value. Its binary representation can be obtained by simply adding 1 to the previous value’s binary representation:

10000000000000000000000000000000000000000000000000001.0

Again, to convert this to the normalized form, we will have to right shift it by 53 bits, giving us the exponent as 53.

However, the mantissa is only 52 bits wide. When we right shift our value by 53 bits, the least significant bit (LSB) with the value 1 will be lost, resulting in the mantissa being all zeros. This leads to the following components:

Sign bit: 0

Exponent:

53 + 1023 (bias) = 1076(10000110100in binary)Mantissa:

0000000000000000000000000000000000000000000000000000IEEE-754 Representation:

0100001101000000000000000000000000000000000000000000000000000000

Notice that the IEEE-754 representation of 9007199254740993.0 is the same as that of 9007199254740992.0. This means that in its in-memory representation, 9007199254740993.0 is actually represented as 9007199254740992.0.

This explains why Python gives the result for 9007199254740993 == 9007199254740993.0 as False, because it sees this as a comparison between 9007199254740993 and 9007199254740992.0.

We will recall this peculiarity when analyzing the 2nd scenario after discussing the CPython algorithm.

IEEE-754 Representation of 9007199254740994.0

The number in the 3rd and final test scenario is 9007199254740994.0, which is 1 more than the previous value. Its binary representation can be obtained by adding 1 to the binary representation of 9007199254740993.0, giving us:

10000000000000000000000000000000000000000000000000010.0

This also has 54 bits before the binary point and requires right shifting by 53 bits to convert to the normalized form, giving us the exponent as 53.

Notice that this time, the 2nd LSB has the value 1. Therefore, when we right shift this number by 53 bits, it becomes the LSB of the mantissa (which is 52 bits wide).

The components look like this:

Sign bit:

0Exponent:

53 + 1023 (bias) = 1076(10000110100in binary)Mantissa:

0000000000000000000000000000000000000000000000000001IEEE-754 Representation:

0100001101000000000000000000000000000000000000000000000000000001

Unlike 9007199254740993.0, the IEEE-754 representation of 9007199254740994.0 can represent it exactly without any loss of precision. Therefore, the result of 9007199254740994 == 9007199254740994.0 is True in Python.

How CPython Implements Float Comparison

The IEEE-754 representation of the three numbers, and the fact that Python does not perform implicit promotion of ints to doubles, explain the unexpected result(s) in the test scenarios from the Twitter post.

However, if you want to go deeper and see how Python actually performs comparison of ints and floats, then continue to read.

The function where CPython implements comparison for float objects is float_richcompare which is defined in the file floatobject.c.

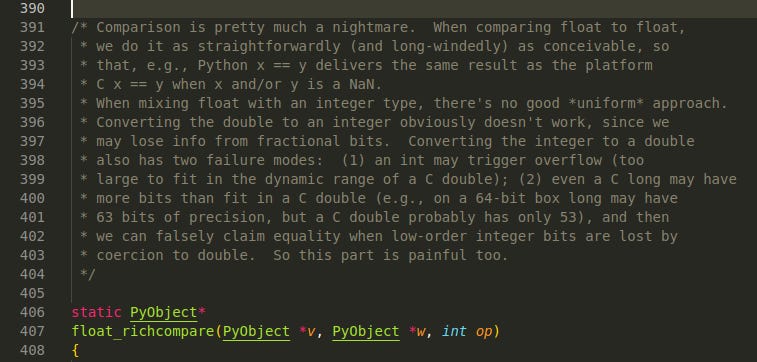

It’s a pretty big function because it needs to handle a large number of cases. The above screenshot shows the comment which explain how painful it is to compare floats and ints. I will break down the function into smaller chunks and then explain them one-by-one.

Part-1: Comparing Float vs Float

Python is dynamically typed, which means when this function is called by the interpreter for handling the == operator, it has no clue about the concrete types of the operands. The function needs to figure out the concrete types and handle accordingly. The first part of the comparison function checks if both the objects are float types, in which case it simply compares their underlying double value.

The following figure shows the first chunk from the function:

I’ve highlighted and numbered the different parts. Let’s understand what is happening, one-by-one:

i, andjhold the underlying double values ofvandw, andrholds the final result of their comparison.When this function is called by the interpreter, the argument

vis already known to be a double type, which is why they have an assert condition for that. And they are assigning the underlying double value toi.Next, they are checking if

wis also a Python float object, in which case they can simply assign its underlying double value tojand compare them directly.But, if

wis not a Python float then they have an early check for handling the case whenvhasinfinityas its value. In this case, they assign the value0.0tojbecause the actual value ofwdoesn’t matter when comparing to infinity.

Part-2: Comparing Float vs Long

If w is not a Python float but an integer, then things get interesting. The algorithm has a bunch of cases to avoid performing actual comparison. We will cover all these cases one by one.

This first case handles the situation when v and w have opposite signs. In this situation, there is no need to perform comparison between actual values of v and w, which is what this code does. The following listing shows the code:

Let’s understand each numbered part from the code listing:

This line makes sure that

wis a Python integer object.vsignandwsignhold the signs ofvandwrespectively.If the two values have different signs, then there is no need of comparing their magnitudes, we can simply use the signs to decide.

Part-2.1: w is a huge integer

The next part also involves a simple case, when w is so big that it will surely be larger than any double precision value (except infinity which is already handled earlier). The following listing shows this code:

_PyLong_NumBitsreturns the number of bits being used to represent the int objectw. However, if the int object is so large that a size_t type cannot hold the number of bits in it then it returns -1 to indicate that.So, if

wis a huge number then it surely is larger than any double precision value. In this case, the comparison is straightforward.

Part-2.2: w fits into a double

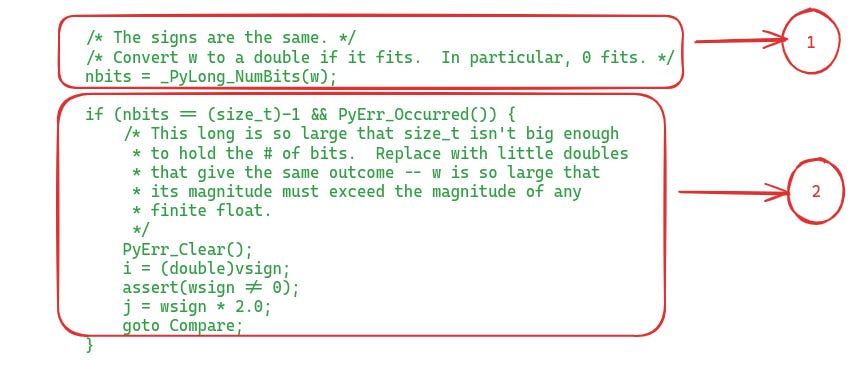

The next case is also simple. If w can fit into a double type, then we can simply typecast it to a double value and then compare the two double values. The following listing shows the code:

It’s worth highlighting that they have used

48bits as the criteria to decide whether to promotew’s underlying integer value to a double or not. I’m not sure why they chose48bits and not54bits (which would have avoided the above failure) or some other value. Perhaps they wanted to be super sure that there was no chance of an overflow on any platform? I don’t know.

Part-2.3: Comparing the exponents

Most of these cases are designed to avoid comparing the actual int and float values. The last and final case to avoid comparing the actual numbers is to compare their exponents. If they have different exponents in their normalized form then it is sufficient to compare them. The number with higher exponent is greater (assuming both v and w are positive)

To extract the exponent of the double value in v, the CPython code uses the frexp function from the C standard library. frexp takes a double value x, and splits it into two parts: the normalized fraction value f, and the exponent e, such that x = f * 2 ^ e. The range of the normalized fraction f is [0.5, 1).

For instance, let’s consider the value 16.4. It’s binary representation is 10000.0110011001100110011. To make it a normalized fraction as per the definition of frexp, we need to right bit shift it by 5 positions, which makes it 0.100000110011001100110011 * 2^5. Therefore, the normalized fraction value is 0.512500 and the exponent is 5.

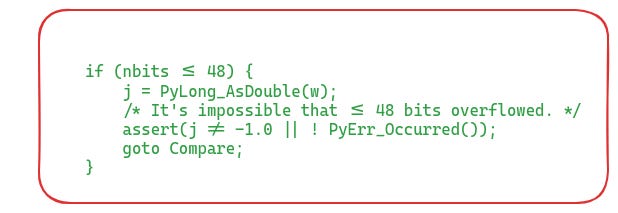

In the case of w, it is an integer, so its exponent is simply the number of bits in it, i.e. nbits. Now, let’s look at the code for this case:

Let’s go over each of the numbered parts:

First, they are making sure that the value of v is positive.

Then they use the

frexpfunction to splitiinto its normalized fraction and exponent parts.Next, they are checking if the exponent of

vis negative or smaller than the exponent ofw. In that casevis definitely smaller thanw.Otherwise, if the exponent of

vis larger than the exponent ofw, thenvis larger thanw.

Part-2.4: Both Numbers have the Same Exponents

This is the final, and the most complicated part of this comparison function. Now, the only thing left is to actually compare the two numbers.

At this point we know that v and w have the same exponents, which means that they use the same number of bits for representing their integer values. So, in order to compare v and w, it is sufficient to compare the integer value of v with w.

To split v into its integer and fraction parts, the CPython code uses the modf function from the C standard library. For instance, if the input to modf is 3.14 then it splits it into the integer part 3 and the fraction part as 0.14.

In most cases, it should be sufficient to compare the integer values of v and w. However, it is not sufficient when their integer values are equal. For instance, if v=5.75 and w=5, then their integer values are equal, but v is actually greater than w. In such cases, the fractional value of v needs to be taken into account for comparison as well.

To do this, the CPython code does a simple trick. If the fractional part of v is non-zero, they left shift both v and w’s integer values by 1 and set the LSB of v’s integer value to 1.

Why do this left shift and setting of LSB?

Left shifting the integer values of

vandw, simply multiplies both of them by 2. If v and w had same integer values, this doesn’t change anything. If w was larger than v, it will get a bit more larger but comparison result remains the same.Setting the LSB of the integer value

v, simply increments it by 1. This increment accounts for the fractional value which was part ofv. In the case,vandwhad equal integer values, then after setting the LSB, v’s integer value is1larger thanw. However, if w was greater than v, then this addition of 1 to v’s integer value doesn’t change anything.For instance, if

v=5.75andw=5then their integer value is5. Left shifting it by1makes it10. Setting the LSB forv’s integer value will make it 11 whilewremains at10. Thus we will get the right result because11 > 10. On the other hand, ifv=5.75,w=6, then multiplying their integer values by 2 gives 10 and 12 respectively. Adding 1 tov’s integer value makes it 11. Yetwremains larger and we get the right result.

The following is the listing for this part of the code:

And, here is the breakdown:

fracpartandintparthold the fractional and integer values ofv.vvandwware the Python integer objects (infinite precision) representing the integer values ofvandw.They call the

modffunction to extract the integer and fractional parts ofv.This part handles the case when

vhas a non-zero fractional component. You can see they left shiftvvandwwby 1, and then set the LSB ofvvto 1.Now, it’s just a matter of comparing these two integer values

vvandwwto decide the return value.

Summary of Python’s Float Comparison Algorithm

Let’s summarize the overall algorithm without the actual code:

If both

vandware float objects, then Python simply compares their underlying double values.However, if

wis an integer object, then:If they have opposite signs then it’s sufficient to compare the signs.

Else if

wis a huge integer (Python has infinite precision ints) then also we can skip comparing the actual numbers becausewis larger.Else if

wfits within48bits or less, then Python convertswinto a double, and then does a direct comparison betweenvandw’s double values.Else if the exponents of

vandwin their normalized fraction form are not equal then it is sufficient to decide based on their exponents.Else, compare the actual numbers. To do this, split

vinto its integer and fraction parts and then comparev’s integer part withw. (While taking into account the fractional part ofv).

Getting Back to Our Investigation

Based on all the knowledge of IEEE-754 standard and how CPython performs comparison of floats, let’s get back to the test scenarios and let’s reason about the output.

Scenario 1: 9007199254740992 == 9007199254740992.0

>>> 9007199254740992 == 9007199254740992.

True

>>>We have v=9007199254740992.0 and w=9007199254740992.

We have already figured out the mantissa and exponent of 9007199254740992.0. The exponent is 53 and the mantissa is 0000000000000000000000000000000000000000000000000000.

Also, the number 9007199254740992 is 2^53, which means that it needs 54 bits to be represented in memory (nbits=54)

Let’s see which part of the float comparison algorithm will handle this:

wis an integer so part-1 does not applySigns of

vandware same so part-2 does not applywfits in 54 bits, i.e. it’s not huge, so part-2.1 does not applywneeds more than 48 bits, so part-2.2 does not applyThe exponent for both

vandwin their normalized fraction form is 54, i.e. they have equal exponents, so part-2.3 also does not applyThis leads to the final part, part-2.4. Let’s walk through it

We need to extract the integer and fraction parts of v, add one to the integer part if the fraction part is non-zero and then compare the integer value with w.

In this case, v=9007199254740992.0, has integer part 9007199254740992 and fraction part as 0. The integer value of w is also 9007199254740992. So Python does a direct comparison of the two integers and returns the result as True.

Scenario 2: 9007199254740993 == 9007199254740993.0

>>> 9007199254740993 == 9007199254740993.

False

>>>This was an unexpected result. Let’s analyze.

We have v=9007199254740993.0, and w=9007199254740993

Both these values are 1 greater than the values from the previous scenario, i.e. v = w = 2^53 + 1.

In binary, 9007199254740993 is 100000000000000000000000000000000000000000000000000001.

Recall that when we were finding its IEEE-754 representation, we found that this number cannot be represented exactly and it has the same representation as 9007199254740992.0.

So, for Python, the comparison is effectively between 9007199254740993 and 9007199254740992.0. Now, let’s see what happens inside the CPython algorithm.

Just like the previous scenario, we will land in the last part of the algorithm (part-2.4) where v’s integer and fraction part need to be extracted, and then the integer part needs to be compared with the value of w.

Internally, 9007199254740993.0 is actually represented as 9007199254740992.0 , so its integer part is 9007199254740992. Meanwhile, w’s value is 9007199254740993. So comparing those two for equality results False.

In a language with implicit promotion rules,

wwith the value9007199254740993would also have been represented as9007199254740992.0and the comparison result would have beenTrue.

Scenario 3: 9007199254740994 == 9007199254740994.0

>>> 9007199254740994 == 9007199254740994.

True

>>>Unlike the previous scenario, 9007199254740994.0 can be represented accurately using the IEEE-754 format. Its components are:

Sign bit:

0Exponent:

53 + 1023 (bias) = 1076 (10000110100 in binary)Mantissa:

0000000000000000000000000000000000000000000000000001

This s, also leads to the final part of the algorithm where the integer parts of the v and w need to be compared. In this case, they have the same values and the equality comparison results in True.

Conclusion

The IEEE-754 standard and floating-point arithmetic are inherently complex, and comparing floating-point numbers isn't straightforward. Languages like C and Java implement implicit type promotion, converting integers to doubles and comparing them bit by bit. However, this can still lead to unexpected results due to precision loss.

Python is unique in this respect. It has infinite precision integers, making type promotion unfeasible in many situations. Consequently, Python uses its specialized algorithm to compare these numbers, which itself has edge cases.

Whether you are using C, Java, Python, or another language, it's advisable to use library functions for comparing floating-point values rather than performing direct comparisons to avoid potential pitfalls.

To be honest, I never thought to look at how Python does integer and float comparison before this article. Now that I’ve looked at it, I feel a bit more enlightened about the complications that floating point math brings, I hope you do, too!

Support Confessions of a Code Addict

If you find my work interesting and valuable, you can support me by opting for a paid subscription (it’s $6 monthly/$60 annual). As a bonus you get access to monthly live sessions, past recordings.

Many people report failed payments, or don’t want a recurring subscription. For that I also have a buymeacoffee page. Where you can buy me coffees or become a member. I will upgrade you to a paid subscription for the equivalent duration here.

I also have a GitHub Sponsor page. You will get a sponsorship badge, and also a complementary paid subscription here.

Excellent article, and I'm delighted to find Python People on Substack (which I joined recently). I was beginning to think it was mostly writers and philosophers. In my experience, it's not uncommon to compare integers and floats in a language like Python, but they're usually reasonable values nowhere near edge cases. I do try to be aware of numeric type, though -- all sorts of nasty things happen when you don't. (Python annotations are helpful in this regard.)

I have to say, it's a bit eerie seeing:

>>> 9007199254740992. == 9007199254740993.

True

Ouch! Of course:

>>> float(9007199254740993) == 9007199254740993.

True

Which is why I tend to cast possible integer values to floats.

Python's floating-point comparison intricacies are fascinating! The IEEE-754 standard's limitations and Python's infinite precision integers create unexpected results, highlighting the complexities of floating-point arithmetic in different programming languages. Your deep dive into CPython's comparison algorithm sheds light on these nuances effectively.