What Every Developer Should Know About GPU Computing

A primer on GPU architecture and computing

Most programmers have some understanding of the basics of CPUs and sequential programming because they grow up writing code for the CPU, but many are less familiar with the inner workings of GPUs and what makes them so special. Over the past decade, GPUs have become incredibly important because of their pervasive use in deep learning. Today, it is essential for every software engineer to possess a basic understanding of how they work. My goal with this article is to give you that background.

Much of this article is based on the book “Programming Massively Parallel Processors”, 4th edition by Hwu et al. As the book covers Nvidia GPUs, I will be talking about Nvidia GPUs and using Nvidia specific terminology. However, the fundamental concepts and approach to GPU programming apply to other vendors as well.

⚡️Announcement — Upcoming Live Session⚡️: I’m doing a live session on building a bytecode compiler and VM for a small subset of Python in Python. Join if you are interested.

Live Session: Live Coding a Bytecode Interpreter for Python

We are due for our next live session. The previous session on performance engineering was quite a success. Those who attended, left some amazing feedback. Now it’s time for the next one.

Comparing CPU and GPU

We will start by doing a comparison between CPU and GPU which will give us a better vantage point of the GPU landscape. However, this is a topic of its own and we cannot possibly squeeze everything in one section. So, we will stick to a few key points.

The major difference between CPUs and GPUs is in their design goals. CPUs were designed to execute sequential instructions1. To improve their sequential execution performance, many features have been introduced in the CPU design over the years. The emphasis has been on reducing the instruction execution latency so that CPUs can execute a sequence of instructions as fast as possible. This includes features like instruction pipelining, out of order execution, speculative execution and multilevel caches (just to list a few).

GPUs on the other hand have been designed for massive levels of parallelism and high throughput, at the cost of medium to high instruction latency. This design direction has been influenced by their use in video games, graphics, numerical computing, and now deep learning. All of these applications need to perform a ton of linear algebra and numerical computations at a very fast rate, because of which a lot of attention has gone into improving the throughput of these devices.

Let’s consider a concrete example. A CPU can add two numbers much faster than the GPU because of its low instruction latency. They will be able to do several of such computations in a sequence faster than a GPU. However, when it comes to doing millions or billions of such computations, a GPU will do those computations much much faster than a CPU because of its sheer massive parallelism.

If you like numbers, let’s talk about numbers. The performance of hardware for numerical computations is measured in terms of how many floating point operations it can do per second (FLOPS). The Nvidia Ampere A100 offers a throughput of 19.5 TFLOPS for 32-bit precision. In comparison, the throughput of an Intel 24-core processor is 0.66 TFLOPS for 32-bit precision (these numbers are from 2021). And, this gap in the throughput performance between GPUs and CPUs has been growing wider with each passing year.

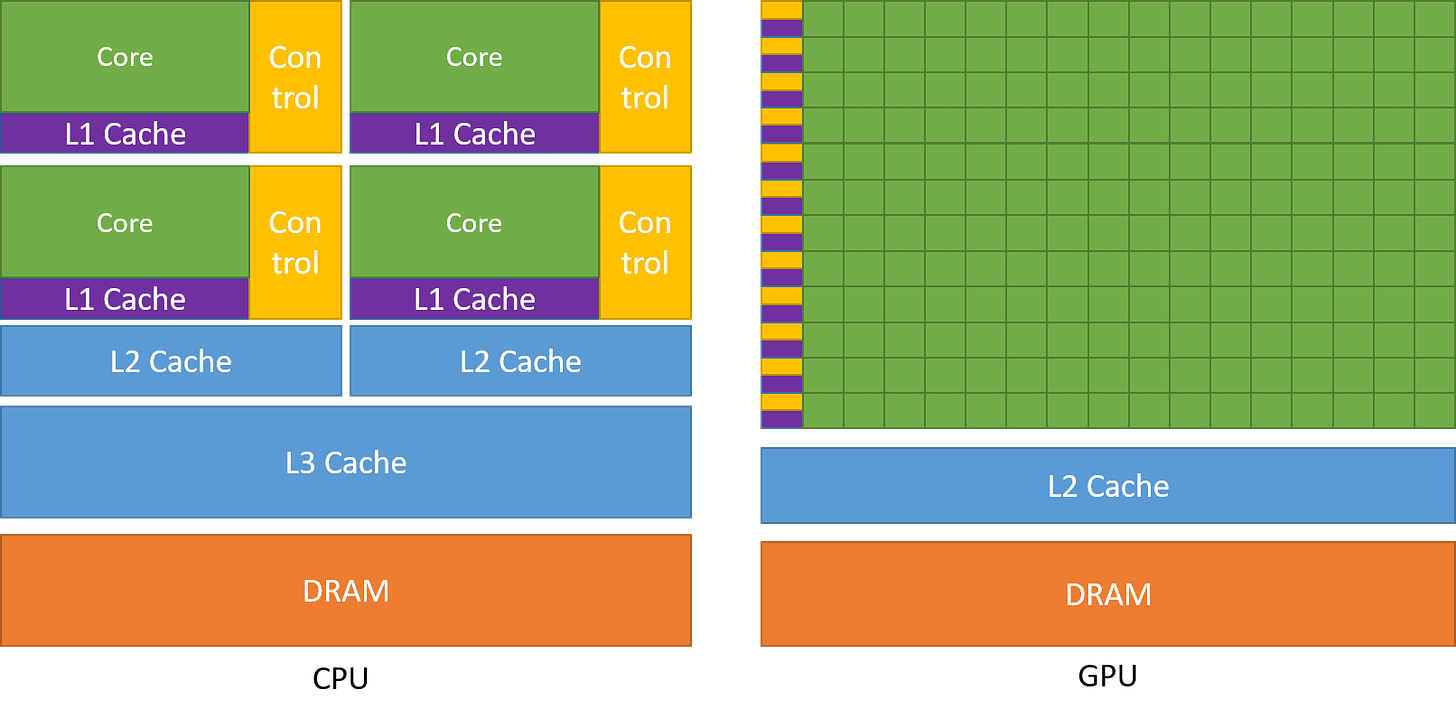

The following figure compares the architectures of CPUs and GPUs.

As you may see, CPUs dedicate a significant amount of chip area towards features which will reduce instruction latency, such as large caches, less ALUs and more control units. In contrast, GPUs use a large number of ALUs to maximize their computation power and throughput. They use a very small amount of the chip area for caches and control units, the things which reduce the latency for CPUs.

Latency Tolerance and High Throughput

You might wonder, how do the GPUs tolerate high latencies and yet provide high performance. This is made possible by the large number of threads and massive compute power that the GPUs have. Even if individual instructions have high latency, GPUs efficiently schedule threads for execution such that they are utilizing the compute power at every point in time. For instance, while some threads are waiting for the result of an instruction, the GPU will switch to executing other non-waiting threads. This ensures that the the compute units on the GPU are operating at their max capacity at all points of time, thus providing high throughput. We will get a clearer picture of this later when we discuss how a kernel executes on the GPU.

GPU Architecture

So we understand GPUs favor high throughput but what does their architecture look like which enables them to achieve this, let’s discuss in this section.

GPU Compute Architecture

A GPU consists of an array of streaming multiprocessors (SM). Each of these SMs in turn consists of several streaming processors or cores or threads. For instance, the Nvidia H100 GPU has 132 SMs with 64 cores per SM, totalling a whopping 8448 cores.

Each SM has a limited amount of on-chip memory, often referred to as shared memory or a scratchpad, which is shared among all the cores. Likewise, the control unit resources on the SM are shared by all the cores. Additionally, each SM is equipped with hardware-based thread schedulers for executing threads.

Apart from these, each SM also has several functional units or other accelerated compute units such as tensor cores, or ray tracing units to serve specific compute demands of the workload that the GPU caters to.

Next, let’s breakdown the GPU memory and look inside.

GPU Memory Architecture

The GPU has several layers of different kinds of memories, with each having their specific use case. The following figure shows the memory hierarchy for one SM in the GPU.

Let’s break it down.

Registers: We will start with the registers. Each SM in the GPU has a large number of registers. For instance, the Nvidia A100, and H100 models have 65,536 registers per SM. These registers are shared between the cores, and are allocated to them dynamically depending on the requirement of the threads. During execution the registers allocated to a thread are private to it, i.e., other threads cannot read/write those registers.

Constant Caches: Next, we have constant caches on the chip. These are used to cache constant data used by the code executing on the SM. To utilize these caches, programmers have to explicitly declare objects as constants in the code so that the GPU may cache and keep them in the constant cache.

Shared Memory: Each SM also has a shared memory or scratchpad which is a small amount of fast and low latency on-chip programmable SRAM memory. It is designed to be shared by a block of threads running on the SM. The idea behind shared memory is that if multiple threads need to work with the same piece of data, only one of them should load it from the global memory, while others will share it. Careful usage of shared memory can cut down redundant load operations from global memory, and improve the kernel execution performance. Another usage of the shared memory is as a synchronization mechanism between threads executing within a block.

L1 Cache: Each SM also has an L1 cache which can cache frequently accessed data from L2 cache.

L2 Cache: There is an L2 cache which is shared by all SMs. It caches the frequently accessed data from the global memory to cut down the latency. Note that both L1 and L2 caches are transparent to the SM, i.e., the SM doesn’t know it is getting data from L1 or L2. As far as the SM is concerned, it is getting data from the global memory. This is similar to how L1/L2/L3 caches work in CPUs.

Global Memory: The GPU also has an off-chip global memory, which is a high capacity and high bandwidth DRAM. For instance, the Nvidia H100 has 80 GB high bandwidth memory (HBM) with bandwidth of 3000 GB/second. Due to being far away from the SMs, the latency of global memory is quite high. However, the several additional layers of on-chip memories, and high number of compute units help hide this latency.

Now that we know about the key components of the GPU hardware, let’s go one step deeper and understand how these components come into picture when executing code.

Understanding the Execution Model of the GPU

To understand how the GPU executes a kernel, we first need to understand what a kernel is and what its configurations are. Let’s start there.

A Brief Introduction to CUDA Kernels and Thread Blocks

CUDA is the programming interface provided by Nvidia for writing programs for their GPUs. In CUDA you express a computation that you want to run on the GPU in the form similar to a C/C++ function and this function is called a kernel. The kernel operates on vectors of numbers in parallel which are provided to it as function parameters. A simple example would be a kernel to perform vector addition, i.e., a kernel that takes two vectors of numbers as inputs, adds them element-wise and writes the result to a third vector.

To execute a kernel on the GPU, we need to launch a number of threads which is collectively referred to as a grid. But there is more structure to the grid. A grid consists of one or more thread blocks (sometimes simply called as blocks) and each block consists of one or more threads.

The number of blocks and threads depends on the size of the data and the amount of parallelism we want. For instance, in our vector addition example, if we are adding vectors of dimension 256, then we may decide to configure a single thread block of 256 threads so that each thread operates on one element of the vector. For bigger problems, we may not have enough threads available on the GPU and we might want each thread to handle multiple data points.

As far as implementation goes, writing a kernel requires two parts. One is the host code which executes on the CPU. This is where we load the data, allocate memory on the GPU, and launch the kernel with a configured grid of threads. The 2nd part is writing the device (GPU) code which executes on the GPU.

For our vector addition example, the following figure shows the host code.

And the following is the device code, which defines the actual kernel function.

As the focus of this article is not teaching CUDA, we will not be going any deeper in this code. Now, let’s look at the exact steps behind the execution of a kernel on the GPU.

Steps Behind Execution of a Kernel on the GPU

1. Copying Data from Host to Device

Before the kernel can be scheduled for execution, all the data that it needs has to be copied from the memory of the host (the CPU) onto the global memory of the GPU (the device). Although, in latest GPU hardware one can also read directly from host memory using unified virtual memory (see section 2.2 of the paper: “EMOGI: Efficient Memory-access for Out-of-memory Graph-traversal in GPUs”).

2. Scheduling of the Thread Blocks on SMs

After the GPU has all the necessary data in its memory, it assigns the thread blocks to the SMs. All threads within a block are processed by the same SM at the same time. To make this happen, the GPU must set aside resources on the SM for those threads before it can start executing them. In practice, multiple thread blocks can be assigned to the same SM for simultaneous execution.

As there are a limited number of SMs and large kernels can have a very large number of blocks, not all the blocks may get assigned for execution immediately. The GPU maintains a list of blocks which are waitlisted for assignment and execution. As and when any block finishes execution, the GPU assigns one of the waitlisted blocks for execution.

3. Single Instruction Multiple Threads (SIMT) and Warps

We know that all threads of a block are assigned to the same SM. But there’s another level of division of threads after this. These threads are further grouped into sizes of 32 (called a warp2), and assigned together for execution on a set of cores called a processing block.

The SM executes all the threads within a warp together by fetching and issuing the same instruction to all of them. These threads then execute that instruction simultaneously, but on different parts of the data. In our vector addition example, all the threads in a warp might be executing the add instruction, but they would be operating on different indices of the vectors.

This execution model of the warp is also called single instruction multiple threads (SIMT) because multiple threads are executing the same instruction. It is similar to the single instruction multiple data (SIMD) instructions in CPUs.

There is an alternative instruction scheduling mechanism available in newer generations of GPUs, starting from Volta and onwards, known as independent thread scheduling. It allows full concurrency between threads, regardless of warp. It can be used to make better use of the execution resources, or as a synchronization mechanism between threads. We will not cover independent thread scheduling here, but you can read about it in the CUDA programming guide.

4. Warp Scheduling and Latency Tolerance

There are some interesting details about how warps work, that are worth discussing.

Even if all the processing blocks (groups of cores) within an SM are handling warps, only a few of them are actively executing instructions at any given moment. This happens because there are a limited number of execution units available in the SM.

But some instructions take longer to complete, causing a warp to wait for the result. In such cases, the SM puts that waiting warp to sleep and starts executing another warp that doesn't need to wait for anything. This enables the GPUs to maximally utilize all the available compute and deliver high throughput.

Zero-overhead Scheduling: As each thread in each warp has its own set of registers, there is no overhead for the SM to switch from executing one warp to another.

This is in contrast to how context-switching between processes happens on the CPU. If a process is waiting for a long running operation, the CPU schedules another process on that core in the meanwhile. However, context switching in CPU is expensive because the CPU needs to save the registers into main memory, and restore the state of the other process.

5. Copying of Result Data From Device to Host Memory

Finally, when all the threads of the kernel have finished executing, the final step is to copy the result back to the host memory.

Although we covered everything about a typical kernel execution but there is one more thing that requires its own section: dynamic resource partitioning.

Resource Partitioning and the Concept of Occupancy

We measure the utilization of the GPU resources through a metric called “occupancy”, which represents the ratio of the number of warps assigned to an SM to the maximum number it can support. To achieve maximum throughput, we would want to have 100% occupancy. However, in practice it is not always possible due to various constraints.

So, why can't we always reach 100% occupancy? The SM has a fixed set of execution resources, including registers, shared memory, thread block slots, and thread slots. These resources are dynamically divided among threads based on their requirements and the GPU's limits. For example, on the Nvidia H100, each SM can handle 32 blocks, 64 warps (i.e., 2048 threads), and 1024 threads per block. If we launch a grid with a block size of 1024 threads, the GPU will split the 2048 available thread slots into 2 blocks.

Dynamic vs Fixed partitioning: Dynamic partitioning allows for more effective usage of the computation resources in the GPU. If we compare this with a fixed partitioning scheme where each thread block receives a fixed amount of execution resources it might not always be the most efficient. In some cases the threads might be assigned more resources than they need, leading to wastage of resources and reduced throughput.

Now, let's look at an example to see how resource allocation can affect the occupancy of an SM. If we use a block size of 32 threads and need a total of 2048 threads, we'll have 64 of these blocks. However, each SM can only handle 32 blocks at once. So, even though the SM can run 2048 threads, it will only be running 1024 threads at a time, resulting in a 50% occupancy rate.

Similarly, each SM has 65536 registers. To execute 2048 threads simultaneously, each thread can have a maximum of 32 registers (65536/2048 = 32). If a kernel needs 64 registers per thread, we can only run 1024 threads per SM, again resulting in 50% occupancy.

The challenge with suboptimal occupancy is that it may not provide the necessary tolerance for latency or the required compute throughput to reach the hardware’s peak performance.

Efficiently creating GPU kernels is a complex task. We must allocate resources wisely to maintain high occupancy while minimizing latency. For example, having many registers can make code run quickly but might reduce occupancy, so careful code optimization is important.

Summary

I understand that wrapping your head around so many new terms and concepts is daunting. Let’s summarize the key points for a quick review.

A GPU consists of several streaming multiprocessors (SM), where each SM has several processing cores.

There is an off chip global memory, which is a HBM or DRAM. It is far from the SMs on the chip and has high latency.

There is an off chip L2 cache and an on chip L1 cache. These L1 and L2 caches operate similarly to how L1/L2 caches operate in CPUs.

There is a small amount of configurable shared memory on each SM. This is shared between the cores. Typically, threads within a thread block load a piece of data into the shared memory and then reuse it instead of loading it again from global memory.

Each SM has a large number of registers, which are partitioned between threads depending on their requirement. The Nvidia H100 has 65,536 registers per SM.

To execute a kernel on the GPU, we launch a grid of threads. A grid consists of one or more thread blocks and each thread block consists of one or more threads.

The GPU assigns one or more blocks for execution on an SM depending on resource availability. All threads of one block are assigned and executed on the same SM. This is for leveraging data locality and for synchronization between threads.

The threads assigned to an SM are further grouped into sizes of 32, which is called a warp. All the threads within a warp execute the same instruction at the same time, but on different parts of the data (SIMT). (Although newer generations of GPUs also support independent thread scheduling.)

The GPU performs dynamic resource partitioning between the threads based on each threads requirements and the limits of the SM. The programmer needs to carefully optimize code to ensure the highest level of SM occupancy during execution.

Wrapping Up

GPUs are in pervasive use today, but their architecture and execution model is fundamentally very different from CPUs. In this article we covered various aspects of GPUs, including their architecture and their execution model. If you're curious about what makes GPUs so sought after and how they operate, I hope this article has provided some valuable insights. If you have any questions about what we discussed here, feel free to ask them in the comments, or reach out to me on Twitter/X.

Acknowledgement

I would like to thank Vikram Sharma Mailthody, who is a Senior Research Scientist at Nvidia for reviewing and offering insights on various parts of the article. His feedback helped improve the quality of the article significantly. I am very grateful. Vikram is very interested in increasing awareness about GPU programming, so if you are interested in learning more about this area, reach out to him on Twitter or LinkedIn.

Further Resources

If you want to dive deeper into GPUs, here are few resources you could refer to:

Programming Massively Parallel Processors: 4th edition is the most up-to-date reference, but earlier editions are fine, too.

Programming Massively Parallel Processors: online course by Prof. Hwu

Yes, CPUs can do things in parallel too, thanks to hyper-threading and multicore. However, for a long time, a lot of effort went into improving the performance of sequential execution.

On the current generation of Nvidia GPUs, the warp size is 32. But it may change in future iterations of the hardware.

Needless to say, this is a fantastic article. Great job and thank you for going so in-depth.

It's interesting that in my time software engineering, I never had to really learn about how GPUs work in-depth. I wish I did. I have a friend working on deep learning over at Nvidia, and he seemingly operates at a different level of technicality than I do. At the same time, I try to remind myself that my expertise and experience is mostly on hyperscale distributed system and what I work on is probably foreign to him.

Regardless, I feel like at least GPU basics should be known knowledge to ambitious software engineers, especially as the world moves forward on GPU-powered computing thanks to AI.

Ciao Abhinav, greetings from Italy. I really enjoy and admire your posts. I have written to you via Linkedin, hope that's okay.