Compiling Python to Run Anywhere

A guest post on building a Python compiler that generates optimized kernels while preserving the language’s simplicity.

Foreword

A recurring theme of this newsletter is going under the hood: how interpreters, compilers, and runtimes actually work, and what performance trade‑offs they force on us. Python is a perfect case study: it’s beloved for its simplicity, but that same simplicity often means poor performance when the workloads get serious.

That’s why I’m really excited to share this guest post by Yusuf Olokoba, founder of Muna. In this piece, he looks at how Python could be pushed beyond its usual limits of speed and portability, laying out a compiler that turns ordinary code into fast, portable executables.

Instead of building another JIT or rewriting everything in C++, his approach generates optimized kernels while keeping the Python source unchanged. This ties directly to themes I’ve written about before, such as CPython internals, and performance engineering. All of those pieces showed why understanding systems at the lowest level matters. Yusuf’s work demonstrates the payoff of that mindset: the ability to design and build new systems on top of that knowledge.

Introduction

I first met Abhinav at the start of 2024, trying to learn more about how the Python interpreter worked under the hood. I had reached out to Abhinav with a singular goal in mind: to build something that could compile pristine Python code into cross-platform machine code.

This idea has been attempted in many forms before: runtimes (Jython, RustPython), DSLs (Numba, PyTorch), and even entirely new programming languages (Mojo). But for reasons we will explore later in this article, we needed something that could:

Compile Python entirely ahead-of-time, with no modifications.

Run without a Python interpreter, or anything other interpreter.

Run with minimal overhead compared to a raw C or C++ program.

Most importantly, run anywhere—server, desktop, mobile, and web.

In this article, I will walk through how this seemingly crazy idea came about, how we began building a solution, how AI happened to be the missing piece, and how we’ve grown to serve thousands of unique devices each month with these compiled Python functions.

Containers Are the Wrong Way to Distribute AI

I got my start in AI research around 2018, back when we called it “deep learning”. I had taken a year off from college and was coming off my first startup experience as co-founder of a venture-backed proptech startup that would later get acquired. One very interesting problem I had encountered in this journey was image editing for residential listings. Each month, a real estate photographer would outsource thousands of photos of homes to be hand-edited in Photoshop and Lightroom, before being posted on the regional MLS or on Zillow.

I teamed up with an old friend and we set out to build a fully automated image editor, using a new class of vision AI models called Generative Adversarial Networks (GANs). We would train our custom model architectures on our datasets, then test rigorously to ensure that the models worked correctly. But when it came time to get these AI models into the hands of our design partners, we simply got stuck. I spent the majority of my time trying to get our models into something we could distribute very easily. But after months of wrangling with Dockerfiles and third-party services, it became crystal clear to me: containers are the wrong unit of distribution for AI workloads.

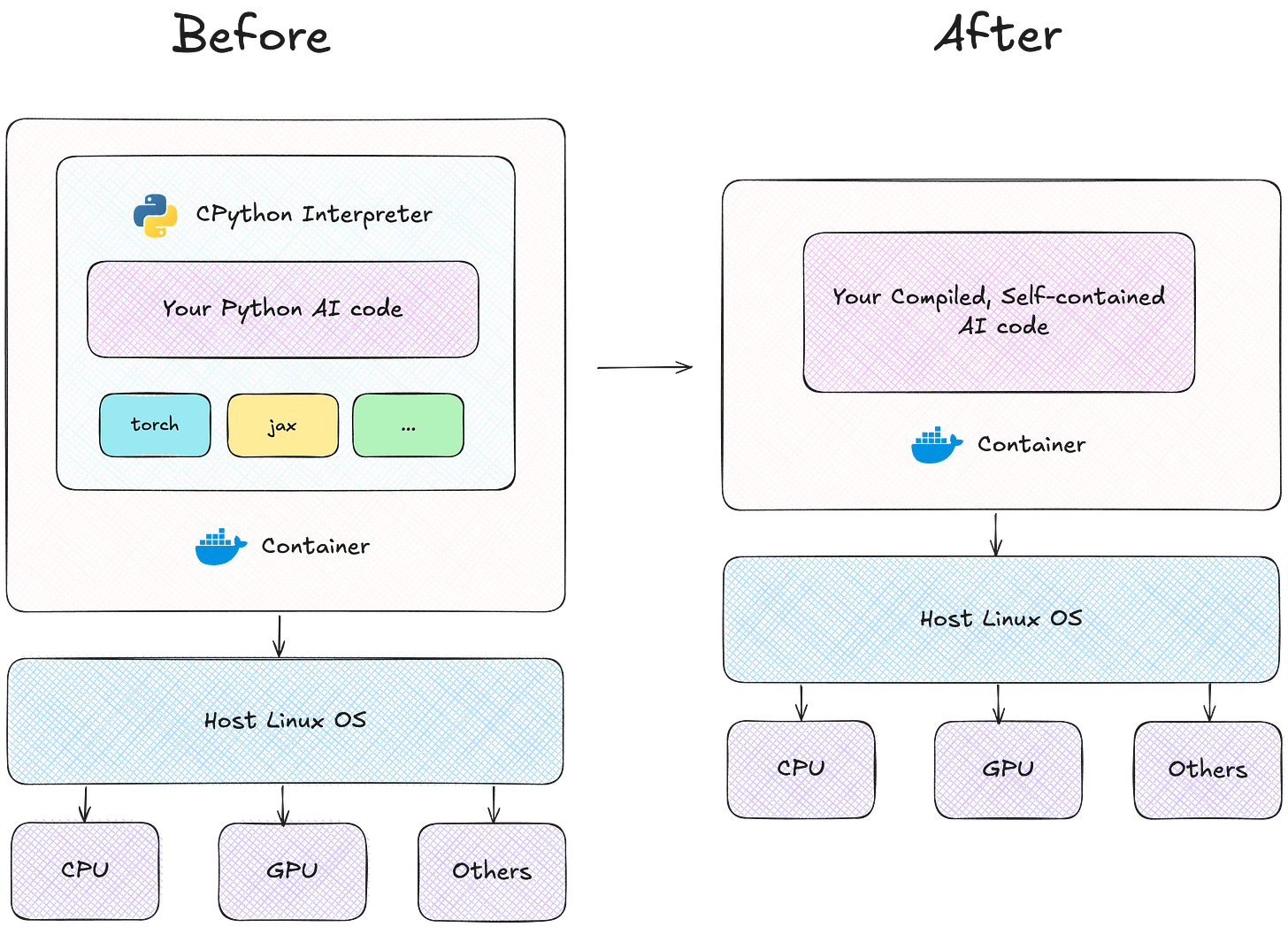

To understand why, we need to look into the container. Containers are simply self-contained Linux filesystems with runtime isolation and resource management. So when deploying our AI model as a container, we would package up the inference code, the model weights, all the Python package dependencies, the Python interpreter itself, and other required software into what was effectively a snapshot of a full Linux operating system.

But what if instead of making a self-contained operating system, we made a self-contained executable that ran only our AI model and nothing else? The benefits here would be significant: We could ship much smaller containers that started up much faster, because we wouldn’t have to include unnecessary Python packages, the Python interpreter itself, or any of the other unnecessary cruft that gets bundled into the container. But even more importantly, not only could we run these executables on our Linux servers—we could run them anywhere.

Arm64, Apple, and Unity: How It All Began

I started programming at the age of eleven, thanks to my dad who vehemently refused to buy me a PlayStation 2 out of fear that my grades would drop. Out of an extreme stubbornness, inherited from him and my mom, I had decided that if he was not going to buy me a game console, then I would simply build the games myself1. I was lucky enough to find a game engine that was intuitive, allowed developers to build once and deploy everywhere, and most importantly, was free to use: Unity Engine.

In late 2013 Apple debuted the iPhone 5S, its first device featuring the relatively new armv8-a instruction set architecture. Unlike prior devices, this was a 64-bit architecture running on ARM. With it, apps could address much more memory, and benefit from a myriad of performance gains. As such, Apple quickly mandated that all new apps be compiled for arm64.

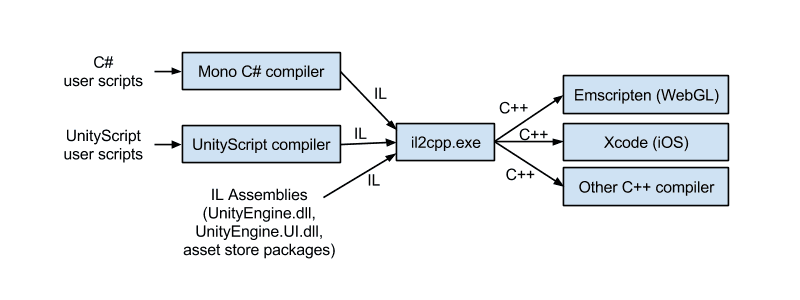

Unity, with its massive developer ecosystem, was thrown into a tailspin. To understand why, we need some context on how Unity works: Because Unity is a game engine, objects within the game can be scripted to have custom behaviors. C# was Unity’s chosen scripting language for these behaviors. But C# does not compile to object code, so it needs a virtual machine to execute at runtime (sound familiar?). Unity used Mono for this purpose, but Mono did not support arm64.

Unity embarked on a journey to build what I still consider to be its greatest engineering feat: IL2CPP. As its name implies, the IL2CPP compiler would take in Common Intermediate Language bytecode (i.e. the intermediate representation generated by the C# compiler); then emit equivalent C++ source code. Once you had C++ source code, you could compile that code to run just about anywhere: from Nvidia GPUs and WebAssembly; to Apple Silicon and everything in-between.

We set out to build the exact same, for Python.

Sketching Out a Python Compiler

At a high-level, the compiler would:

Ingest plain Python code, with no modifications.

Trace it to generate an intermediate representation (IR) graph.

Lower the IR to C++ source code.

Compile the C++ source code to run across different platforms and architectures.

Before jumping in, you might be wondering: why bother generating C++ first? Why not just go from IR to object code?

Going back to why we started on this journey, our main focus with Muna has been on compute-intensive applications, especially AI inference. If you’ve spent time in this space, you’re familiar with technologies like CUDA, MLX, TensorRT, and so on. But there are so many more frameworks, libraries, and even undocumented ISAs that applications can leverage to accelerate everything from matrix multiplication to computer vision.

We wanted to design a system that would allow us leverage as many ways to perform some computation as we might have available on given hardware. We’ll show you how we achieved this, and how this design gives us a novel, data-driven approach to performance optimization.

Building a Symbolic Tracer for Python

The first step in building our compiler is to build a symbolic tracer. The tracer’s job is to take in a Python function and emit an intermediate representation (IR) graph that fully captures control flow through the function.

Our very first prototypes were built upon the PyTorch FX symbolic tracer, introduced in PyTorch 2.0. Their symbolic tracer was built off PEP 523, a feature in CPython that allowed developers in C to override how bytecode frames are evaluated by the interpreter. I won’t go into too much detail here, as it is a marvel of engineering in its own right, but in summary PEP 523 enabled the PyTorch team to register a hook that could record every single function call as it was being evaluated by the interpreter:

Unfortunately, TorchFX had two significant drawbacks that required us to build a custom tracer. The first is that once you hook into the CPython interpreter to record your PyTorch function, you have to actually run said function. For PyTorch, this was not an issue because you could invoke your function with so-called “fake tensors” that had the right data types, shapes, and devices, but allocated no memory. Furthermore, this way of running a function in order to trace it would be perfectly inline with how their legacy serialization APIs worked (torch.jit and torch.onnx).

Since we needed the ability to compile arbitrary Python functions, of which only a tiny subset (or none) could be PyTorch, we would need a similar mechanism for having developers provide us with their inputs to use for tracing. But unlike PyTorch, we could not create a fake image, or fake string, or fake whatever. To us, this became a dead end.

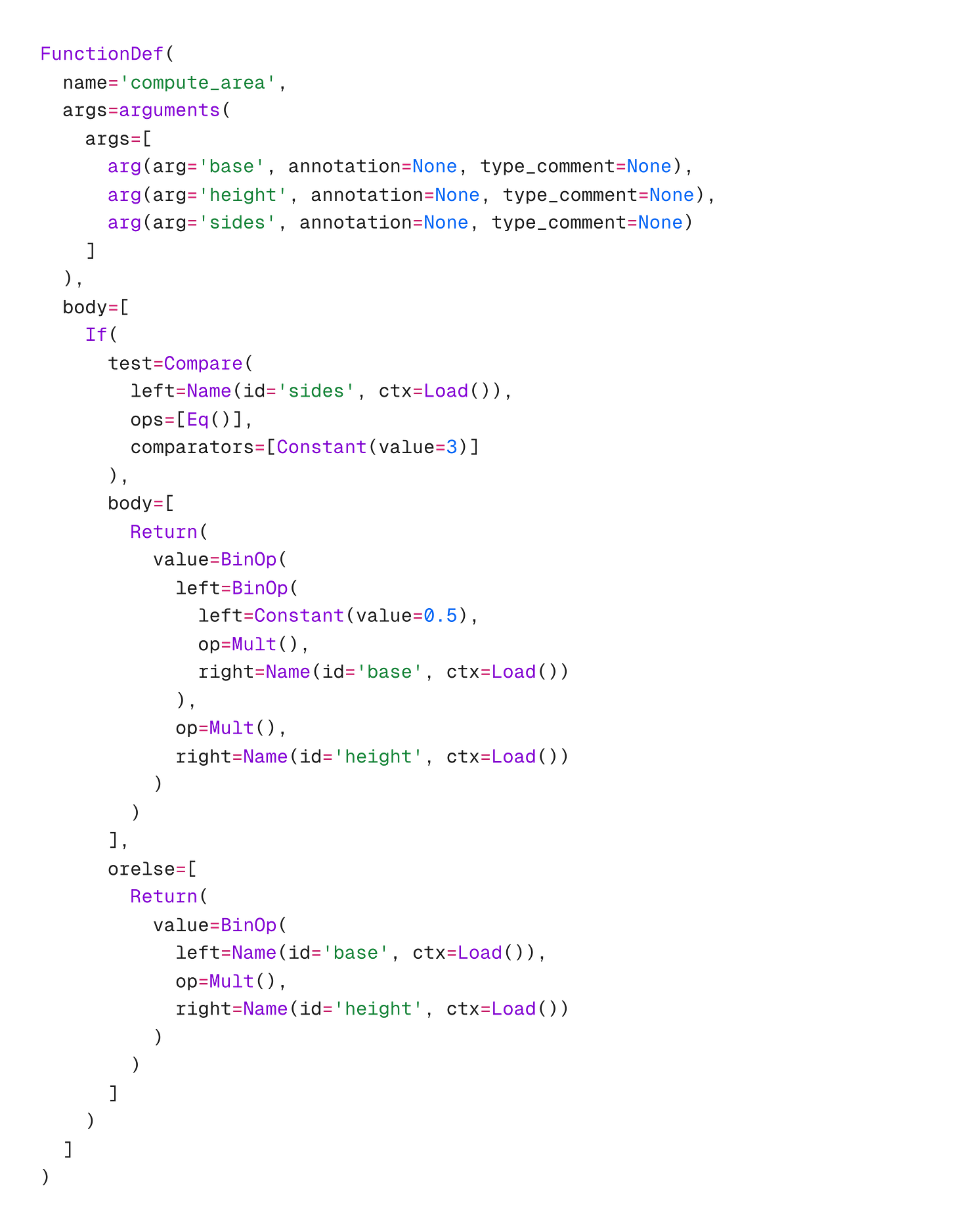

The second challenge was that even when we created fake data as inputs to the TorchFX tracer, we realized that it could only record PyTorch operations. We would have to heavily modify and extend the tracer to support tracing through arbitrary functions across hundreds and thousands of Python libraries. As such, we settled on building a tracer that would instead capture a Python function by parsing its abstract syntax tree (AST). Take an example function:

Our tracer would first extract an AST like so:

It would then step through, resolve all function calls (i.e. figure out what source library each function call belongs to), then emit a proprietary IR format. Currently, our symbolic tracer supports static analysis (via AST parsing); partial evaluation of the original Python code; live value introspection (using a sandbox), and much more. But somehow, it’s the least interesting part of our compiler pipeline.

Lowering to C++ via Type Propagation

This is where things get really interesting. Python is a dynamic language, so variables can be of any type, and those types can change easily:

C++ on the other hand, is a strongly-typed language, where variables have distinct, immutable types that must be known when the variable is declared. While bridging both of these languages might seem like an intractable problem, there’s actually a key insight we can take advantage of:

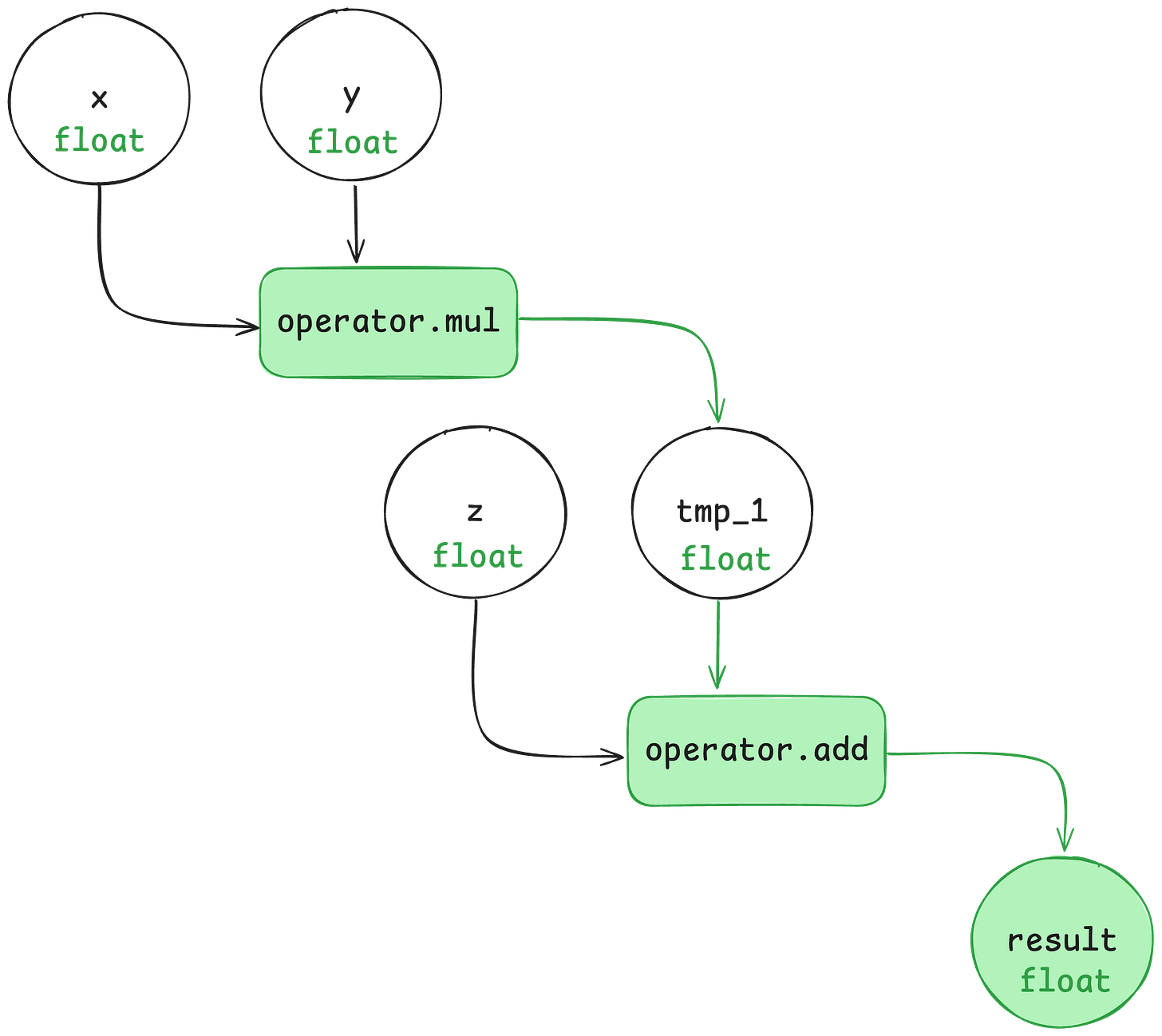

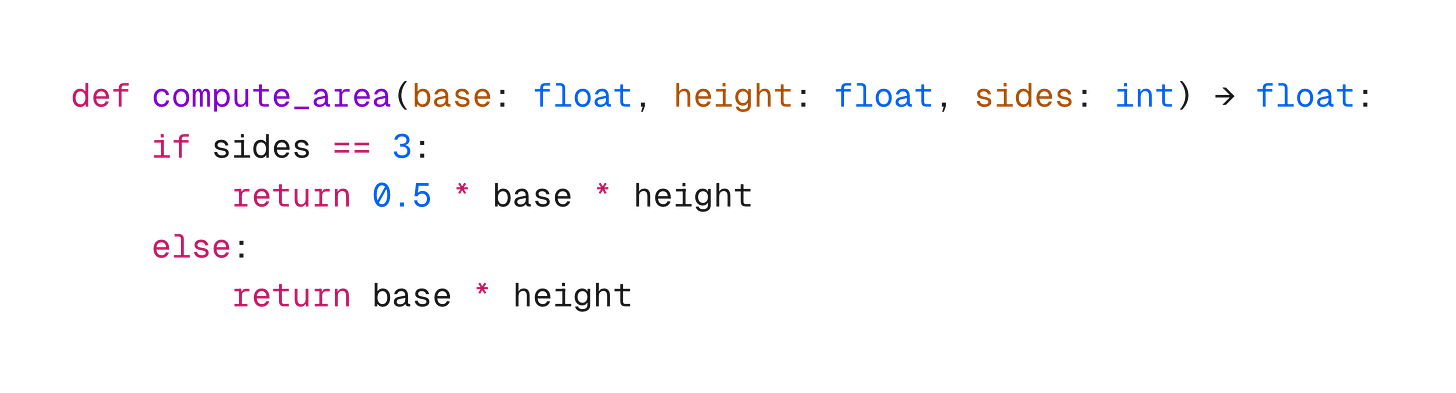

When we invoke a Python function with some given inputs, we can uniquely determine the types of all intermediate variables within that function2:

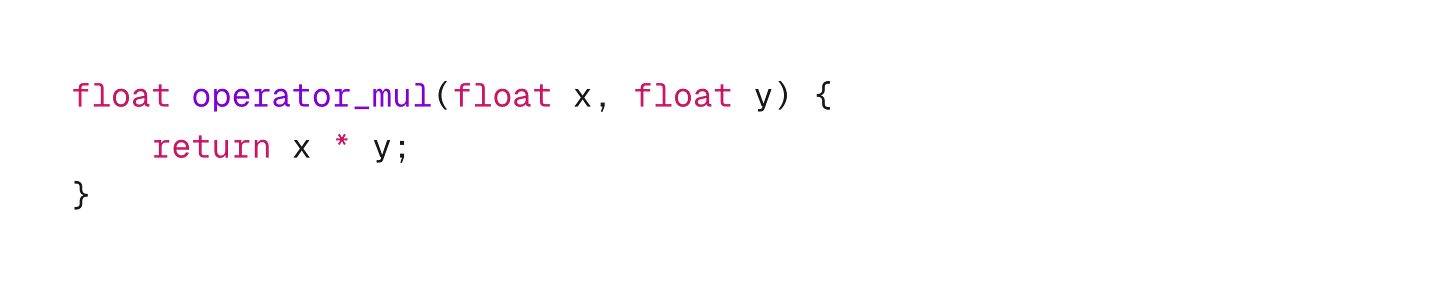

If we know that the inputs x and y are float instances, then we know that the resulting type of their multiplication (i.e., tmp_1) is uniquely determined by whatever the operator.mul function returns. But how do we define operator.mul and get its return type? That’s where C++3 comes in.

From the above, we now know that tmp_1 must be a float. We can repeat this process for the addition call (tmp_1 + z) to get the final result.

At this point, it’s worth taking a moment to reflect on what we have created thus far:

We can take a Python function and generate an intermediate representation (IR) that fully captures what it does.

We can then use parameter type information; and a C++ implementation of a Python operator (e.g.

operator.mul); to fully determine the type of the first intermediate variable in our Python function.We can repeat (2) for all subsequent intermediate variables in our Python function, until we have propagated types throughout the entire function.

Seeding the Type Propagation Process

One point worth expanding upon is how we get the initial parameter type information to kickstart the type propagation process. In the example above, how do we know that each of x, y, and z are float instances?

After prototyping with a few different approaches, we settled on PEP 484 which added support for type annotations in the Python language. Python itself completely ignores these type annotations, as they are not used at runtime4. And while they solved the problem of seeding type propagation, they came with two major drawbacks: first, they conflict with our most important design goal, because using them requires developers to modify their Python code a little5:

The code doesn’t look too different, and some argue that using type annotations makes for writing better Python code (we mandate them at Muna). The second problem was that in order to design a simple and modular interface for consuming the compiled functions, we would have to constrain the number of distinct input types that could be used by developers6. Ultimately, we decided this was a reasonable compromise with nice ergonomics.

Building a Library of C++ Operators

At this point, you might have realized a glaring issue in our design: we need to write C++ implementations for potentially tens or hundreds of thousands of Python functions across different libraries. Thankfully, this is a lot less complicated than you might think. Consider the function below:

When compiling cosecant, we see that there are function calls to sin and reciprocal. Our compiler first checks if it can trace through each function call. In the case of sin, we don’t have a function definition for it (only an import), so we cannot trace through it7. This forms a leaf node that we must implement manually in C++. We can trace through the call to reciprocal, so we do and get an IR graph for it. This can then be lowered and used at other call sites.

The key insight above is that most Python functions our compiler will encounter are composed of a smaller set of elementary functions. What accounts for the large variety of code in the wild is not the unique number of elementary functions that make them up; rather, it’s the different arrangements of these elementary functions.

Still, you could argue that there are potentially thousands of these elementary functions across different libraries that we would have to cover, and you would be 100% correct. Thankfully, we now have an amazing tool that makes this an easy problem to solve: AI-powered code generation.

Today’s LLMs are capable of writing verifiably-correct, high-performance code across a wide variety of programming languages. As such, we’ve been building infrastructure to constrain the code they generate, test the code to ensure correctness, and handle ancillary logic for things like dependency management and conditional compilation. So far, we have used AI to generate implementations of hundreds of Python functions across popular libraries like Numpy, OpenCV, and PyTorch.

Performance Optimization via Exhaustive Search

The final topic worth discussing is performance optimization. Most popular approaches here involve rolling out hand-written code (e.g. Assembly or PTX); using heterogenous accelerators (e.g. GPU, NPU); doing heuristic-based algorithm selection at runtime (e.g. convolution algo search in ArmCL and cuDNN); or some combination thereof.

From our past experience building extremely low-latency computer vision pipelines for embedded systems, we have learned a very bitter lesson8: effective performance optimization is always empirical. The latency of a given operation on some given hardware depends on so many factors that the only way to know for sure it to simply test every single approach you have. The only reason why engineering teams don’t do this is because it is impractical: you would have to rewrite your code tens or hundreds of times then test each variant…but wait!

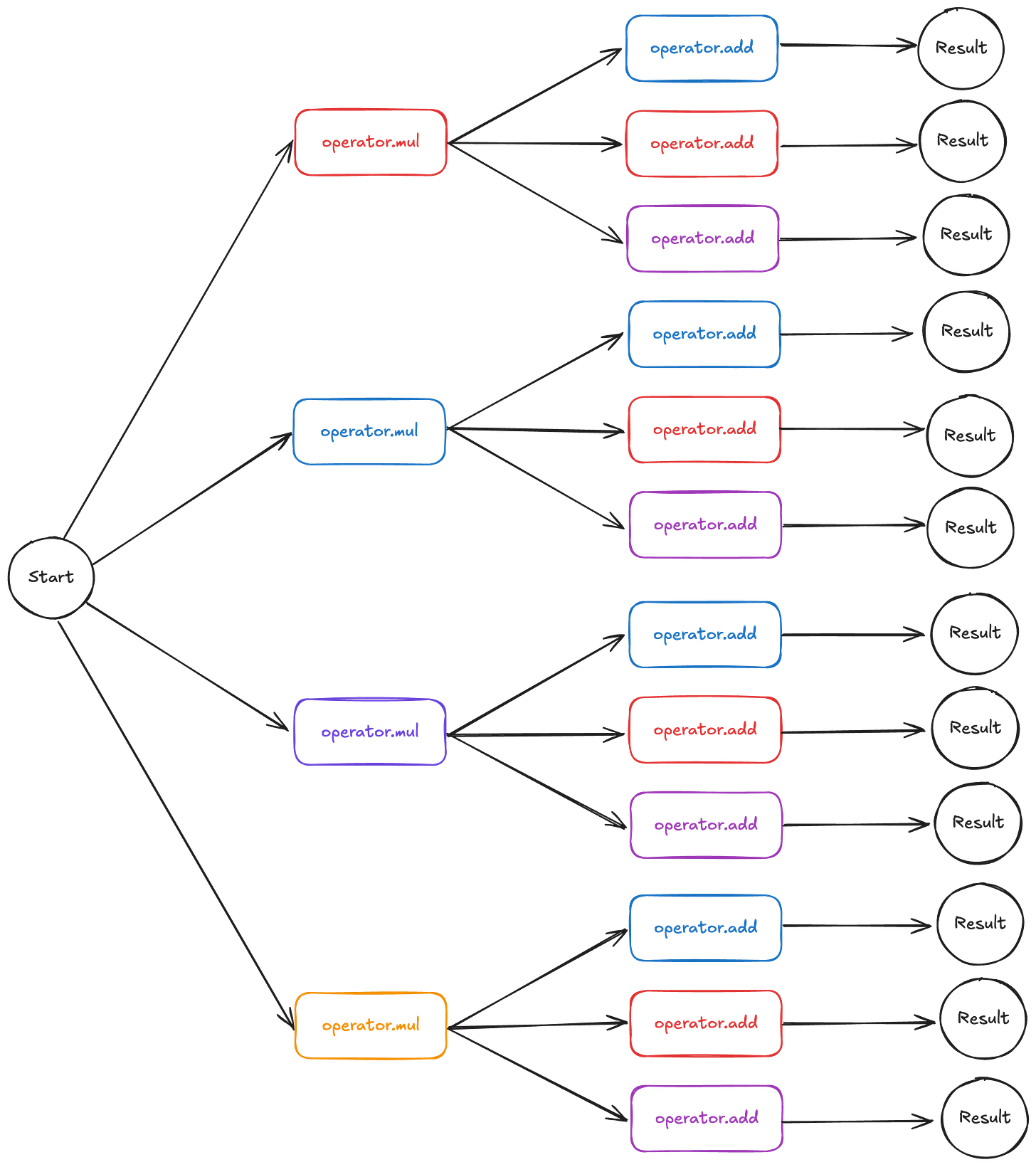

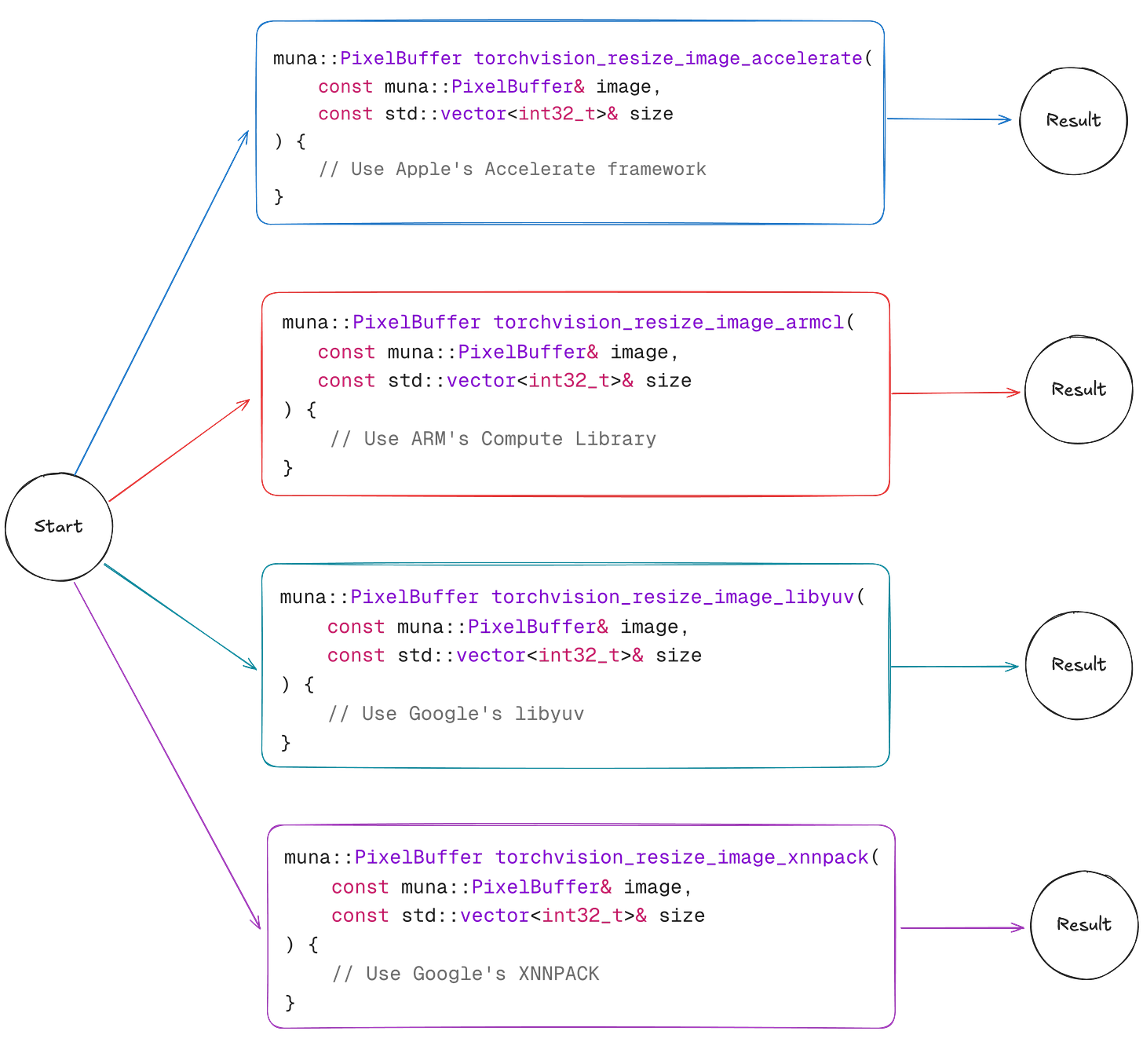

Earlier, we went over how we propagated types through a Python function with the help of a C++ operator. What I didn’t mention was that we don’t just use one C++ operator; we use as many as we can write (*ahem* generate). So instead of this:

What really happens is this:

Each path from start to result is a unique program, guaranteed to be correct with respect to the original Python function. But each C++ operator (colored rectangles) could be powered by different algorithms, libraries, and even hardware accelerators. Let’s walk through a concrete example:

The function above resizes an input image to 64x64 with bilinear resampling using the torchvision library. When compiling this function for Apple Silicon (macOS, iOS, or visionOS), we have a range of approaches and libraries to choose from, including:

The above is just a small selection, as the possibilities for implementing a bilinear resize operation on Apple Silicon are numerous (e.g. using the GPU, Neural Engine). The key here is that we can generate as many of these as possible (thanks to LLM-powered codegen), then emit compiled programs that use each one—with absolutely no limits. So in the example above, the user’s Python function would be emitted as four unique programs for Apple Silicon alone. In our real-world testing, we have seen a single Python function be emitted as almost 200 unique programs across 9 compile targets.

From here, we can easily test each compiled function to discover which one runs the fastest on given hardware. We gather fine-grained telemetry data, containing latency information for each operation, and use this data to build statistical models to predict which variant runs the fastest. There are two significant benefits in this design:

We can optimize code purely empirically. We don’t make any assumptions about which code might perform best; and we don’t need a separate performance tuning step after generating code. We simply ship out every compiled binary we have, gather telemetry data, and use this to discover which one is the fastest.

We benefit from network effects. Because the C++ operators are shared among thousands of compiled functions; and because we ship these compiled functions to hundreds of thousands of unique devices across all of our users; we have tons of data that we can use to optimize every piece of code we generate.

For our users, this will feel like their compiled Python functions running faster over time, entirely on autopilot.

Designing a User Interface for the Compiler

Now, we have to wrap up everything we’ve covered above into a user interface. Our most important guiding principle was to design something with near-zero cognitive load. Specifically, we didn’t want developers to have to learn anything new to use the compiler. We decided to go with PEP 318, decorators:

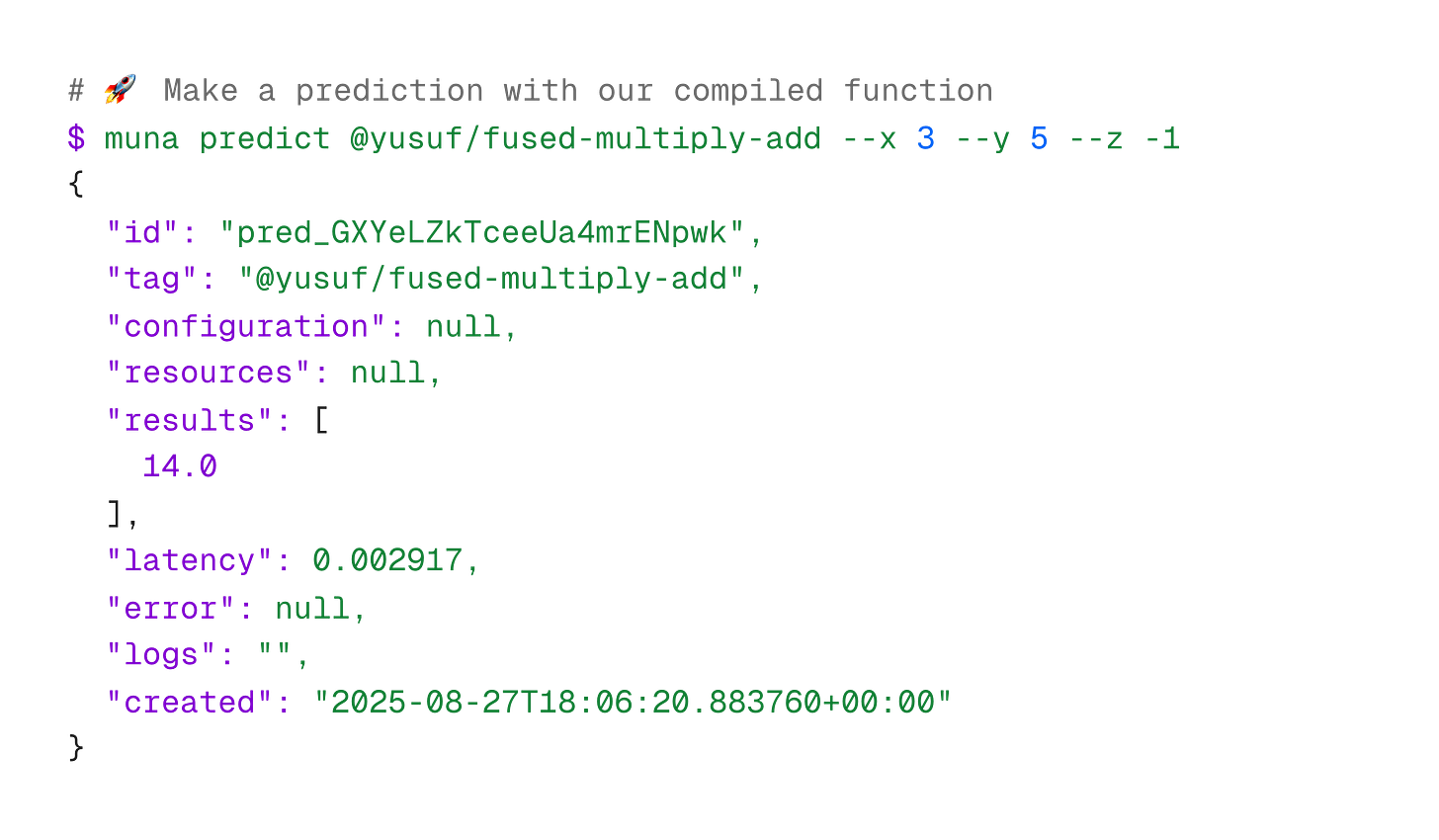

Developers could simply decorate their Python function with @compile to specify the compilation entrypoint. Then, they would compile the function9 and all its dependencies using the CLI:

We fell in love with the decorator paradigm from seeing how developers strongly preferred expressing complex infrastructure as code10. Furthermore, it was a familiar form factor within the Python ecosystem, evidenced by its use within Numba and PyTorch. With the decorator, our CLI could find the compilation entrypoint function, and use that as a springboard to crawl through all other dependency code (both first-party as provided by the developer, and third-party packages installed via pip or uv).

The @compile decorator would also serve as the primary customization point for developers compiling their function. Beyond the required tag (which uniquely identifies the function on our platform) and description, developers could provide a sandbox description to recreate their local development environment (e.g. installing Python packages, uploading files); along with metadata to assist the compiler during codegen (e.g. running PyTorch AI inference with ONNXRuntime, TensorRT, CoreML, IREE, QNN, and more).

Once compiled, anyone can run the compiled function anywhere11:

Closing Thoughts

In all candor, we still have a high level of disbelief that any of this actually works. That said, the compiler has a lot of standard Python features that are partial or missing: exceptions, lambda expressions, recursive functions; and classes. The through-line connecting these missing features is our type propagation system. While type propagation works for simple functions with unitary parameter and return types, it requires additional consideration for composite types (e.g. unions) and higher-order types (e.g. classes, lambda expressions).

The other significant item we are still figuring out is the debugging experience. The good news for us is that we guarantee that developers’ Python code will work as expected once compiled, absolving them of any responsibility to debug the code at runtime. This is similar to how developers who use Docker or other containerization technologies simply expect everything to work—almost nobody debugs their Docker image layers. The bad news is that because we enable developers run their Python code anywhere, we have to figure out how to write extremely safe code; and how to gather fine-grained, symbolicated trace data when some function raises an exception. This is further complicated by the fact that because we have to deliver the smallest and fastest compiled binaries possible, we compile generated code with full optimizations, inevitably stripping out valuable debug information.

It has not all been difficult though, especially because the evolving C++ standard has been a major boon for us. Muna would not exist without C++20, because our code generation relies extensively on std::span, concepts, and most importantly, coroutines. And we’re dying for broad C++23 support, because we use std::generator to support streaming, <stdfloat> to support float16_t and bfloat16_t, and <stacktrace> to support Python exceptions.

On a final note, if you are currently deploying embedding models or object detection models in your organization, or if you find any of this work interesting, we would love to chat with you. We’d love for more developers to use the compiler on problems and programs we haven’t yet run ourselves; and we love to meet developers who enjoy the mundane, low-level worlds of Python, C++, and everything in-between.

Rumble Racing and Sly Cooper: Not the most well-known titles on the PS2, but games that carry incredibly amounts of sentimental value from my upbringing.

One way to think about bridging Python and C++ during lowering is in how implicit template instantiation works in C++. The Python function defines a template function; and the input types are used to instantiate a concrete function therefrom.

Technically, our compiler doesn’t just compile to C++. We use C++ as the primary language for code generation, but we also emit Objective-C and Rust in some cases. Furthermore, we are actively exploring emitting Mojo.

The main exception here comprises of data validation libraries like Pydantic which use type hints to build schemas for validating and serializing data.

Only the compiler entrypoint function (i.e. the function which is decorated with @compile) requires type annotations. All other functions can be duck typed as normal.

Only the compiler entrypoint function (i.e. the function which is decorated with @compile) is subject to this constraint. All other functions can accept arbitrary input types, and return arbitrary output types.

Our @compile decorator supports providing a list of trace_modules which opt entire modules into tracing. Functions that are not provided as part of a developer’s original Python code must explicitly be opted into tracing.

The Bitter Lesson by Rich Sutton.

By default, we currently compile for Android, iOS, Linux, macOS, WebAssembly, and Windows.

We took inspiration from projects like Pulumi and Modal.

We provide client libraries for Python, JavaScript (browser and Node.js), Swift (iOS), Kotlin (Android), and Unity Engine. And our React Native client is coming soon.

I have written two compilers as projects for my CS degrees and a few small ones for utilities. This was in the late 90s. It's nice to have an update on the current state-of-the-art. The work you describe is a yeoman's gem. It's hard to believe when I spent many hours developing C++ code to run on Mac/Win/Linux. The groups I did research with now has an automatic pipeline to run typical workflows. What you describe is an order of magnitude. more. Rock on with your bad self(ves)! :-)

https://github.com/EpistasisLab/tpot

so cool to look under the hood in this way - thanks for sharing yusuf + abhinav