Performance Engineering and The Need for Speed

Why performance engineering is gaining significance and how to get started in this area

A skilled performance engineer can sometimes make a small change in software that saves enough money to pay for 10 years of salary. Companies and Customers like such people. — Richard L. Sites (Google)

Last weekend a bunch of us hung out in a live session and talked about performance engineering and 1BRC. I have always had a deep interest in performance engineering techniques but it was refreshing to see that so many of you also share the same curiosity about this topic. I want to write a series of posts on performance engineering, starting with this one where I want to talk about why this field is gaining importance and what it takes to master it.

Upcoming Live Session on 1BRC: If you are interested in learning about performance optimization techniques, then we are doing a live session on 17th Feb. Check it out and sign up if interested. More Details at the below post:

The Need for Speed

First of all let’s talk about why performance engineering is getting important in the current era of computing.

Limits of Moore’s Law

Gordon Moore, the co-founder of Intel, famously predicted in 1965 that the number of transistors on a microprocessor chip would roughly double every two years (Moore's Law). This trend not only increased the computational power of processors but also meant that the speed of computer programs could improve with each new hardware generation, without requiring significant changes by the application programmers.

You may also want to read this excellent deep dive on Moore’s law by The Chip Letter

However, in recent decades, we've encountered physical limitations that confront this exponential growth. We've reached the point where increasing the clock speed of a processor leads to significant challenges in heat dissipation. While the total number of transistors on microprocessor chips has continued to rise, consistent with Moore's Law, the rate of improvements in the clock speed of the processor cores themselves has not maintained the same pace. Instead, the industry has shifted its focus toward multi-core processors that feature more than one processing unit (core) within a single chip, along with enhancements in other architectural aspects to boost performance without increasing clock speeds.

This means that an unoptimized piece of code will not automatically start running faster on newer hardware. We need to put efforts to optimize our code to get the max out of a single processor core and if that’s not enough, we need to learn to leverage the additional cores.

Cloud Cost and Power Savings

The cloud is ubiquitous, and most companies run their code on public cloud platforms. The cloud compute bill is also amongst the highest expenses for such companies. Naturally, they spend a lot of time trying to reduce these costs by cutting down the number of compute instances used, efficiently routing the network traffic between their services and many other tricks.

But cutting down the computational resources for a service which is critical for the business is not ideal. Sooner or later these resources have to be bumped up again because the end-users start noticing sluggish response times. The right way to cut cloud costs is to optimize the performance of the services so that they can continue to provide the same level of response latency and throughput with reduced compute requirements. This is becoming critical in the present market situations where businesses are trying to be frugal to survive.

Performance Critical Systems

There is a certain class of software which is always expected to deliver the maximum performance because they power the underlying infrastructure for most businesses out there. This includes software like the operating system kernels, web servers, database engines, game engines, compilers and interpreters, etc. If you work or intend to work in one of these areas, then performance engineering is an essential skill.

Those few points are not exhaustive but they highlight why performance engineering is critical. Now let’s talk about what it takes to do this kind of work.

The Performance Engineering Mindset

We spend years learning good software engineering practices, such as building and working with abstractions, modularity, avoiding code duplication etc. However, performance engineering is all about trade offs and sometimes we need to trade off quality of the code to gain performance benefits*.

*Code correctness and readability are still paramount. Performance optimization always comes at the end when we know that the code delivers the desired functionality.

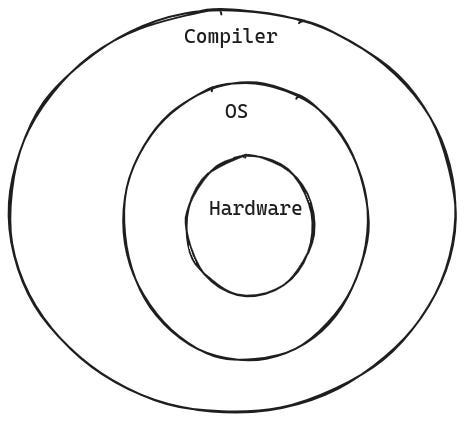

Performance engineering requires us to break open the abstraction layers and understand the performance costs we are paying at each layer. This not only involves the layers within our code, but also the compiler, the operating system and the CPU.

We need to build a holistic view of the entire system, right from the application layer, down to the hardware. This involves having an understanding of the kind of optimizations the compiler might or might not be doing, how the operating system might be coming into the picture during various parts of execution such as system calls, page faults, and finally how the CPU might be executing the code.

And this is hard because usually there are no courses specifically teaching performance engineering; it’s an application of everything we have learned about computer architecture, compilers, programming languages, runtime etc.

Do we need to be an expert at reading assembly or knowing how exactly the hardware works? That would be extremely useful but we can get pretty far by just having a conceptual understanding of these layers. There are a lot of low hanging fruits which can easily optimize the performance of we code if you can simply reason about how it is being executed.

For instance, are you using a boxed integer (i.e. an integer value wrapped around in an object) instead of a simple primitive integer? Every time you access such a boxed integer, you are having to do a load from memory, which can get costly if you are getting cache misses. On the other hand a primitive integer value once loaded, can sit right in a register until its use is done.

The Roadmap for Performance Engineering

So how do we go about learning the skills needed for performance engineering? As I mentioned in the previous section, it is all about learning the costs of each layer of abstraction below our stack. We write code in a high-level language which is translated to machine code by a compiler, then the OS sits between our program and the hardware during execution and finally there is the hardware itself. We need to get familiar with what is happening at each of these layers.

Programming Language Internals and Runtime

Languages like C and C++ are very close to the hardware and we can have a good mental model of how a piece of code might compile and execute on the machine.

However, if we are using a high-level language, such as Java, C#, Go, then the first thing to understand is the intricacies of their compiler and runtime, and how that might impact the performance of our code.

For instance:

If the language runtime has a garbage collector (GC), then how does it work and what is the cost of GC?

What kind of optimizations the compiler is capable of doing and can we look at the generated assembly to ascertain if those are being done?

What is the layout of the objects in memory?

How are object passed around in function calls (references vs values)?

How expensive are threads and how are they scheduled and managed?

Details like these at the level of the programming language are critical to characterize if we are taking full advantage of the language level features, and if there are any potential bottlenecks in our code.

Instruction Set Architecture and Assembly Language

As we move down a layer below, we need to have familiarity with the instruction set architecture (ISA) and assembly language of our target hardware platform.

We don’t necessarily need to be able to write working programs in assembly but we should have enough understanding so that we can read and understand the assembly of the critical path of your code which needs optimizations.

This typically means having understanding of the general purpose registers, common instructions and their latencies, and things like how function calls and system calls are made and their associated costs.

We will usually come down to this layer after having profiled our code and having found the hot paths which may be forming a bottle neck. Looking at the generated assembly instructions may give us clues about what might be costing us performance. For instance, is there a register spill happening?

Register spilling: The CPU needs values to live in the register to perform computations but there are a limited number of registers. If our code is using too many variables, the CPU might run out of registers and some values need to be stored on the stack. Once this starts happening in a hot path, the performance usually takes a hit because the CPU will spend a lot of time reading/writing values from the stack.

Operating System and Its Role Behind the Execution of Programs

The operating system (OS) sits right between our program and the hardware. It provides crucial services to interact with the hardware and manage resources like memory and I/O. It is important to understand how the OS provides these services and their associated costs.

The OS provides services via the system call interface. But there is a high cost associated with a system call. Most of the time these system calls are necessary and the only way to get something done. But sometimes there are alternatives available which may be able to reduce the number of system calls.

The OS is also responsible for providing process and thread execution services. We should understand how the OS spawns processes and threads, how their runtime memory layout looks like, the cost of starting a thread vs a process, virtual memory and demand paging, kernel vs user mode execution etc. For instance, in one of my recent articles I explained how the Instagram engineers identified and fixed a performance issue in their production system due to how the OS managed virtual memory pages of its processes.

Again, we don’t need to open the source code of Linux kernel to understand these things. A high level mental model of what is happening in the OS is sufficient for a majority of performance optimization work.

Microarchitecture of the Hardware

This is the lowest level detail of our execution stack. The microarchitecture of the CPU is an implementation detail of the particular platform we are using to run our code. These details play a significant role in the performance of our code. These include things like the size and levels of CPU caches, instruction level parallelism, branch prediction, instruction pipelining etc.

Having a conceptual understanding of how these features come into play to execute a sequence of instructions can be game changing. For instance, our CPU might be capable of issuing and executing several instructions in parallel each cycle, but due to dependencies between two instructions in a hot loop, the CPU might be stuck in executing instructions sequentially. If we can identify and fix such an issue, we may notice surprising improvement in the performance of your code.

Profiling and Performance Analysis Tools

But we can’t go in blind when it comes to optimization. Usually there are very few hot paths in an application which actually require any optimization efforts. The first step of any kind of performance engineering work is profiling, which helps us identify these hot paths.

Almost everyone uses observability tools to monitor their production infrastructure. These provide a starting point for analyzing an issue in the performance of our application. However, to do further drill down we need to get familiar with more specialized tools. These include tools such as perf, ebpf, ftrace, dtrace, and language specific tools for profiling our application code.

We need to know how to generate profile and read the flamegraph to find hot spots in our code. Tools like ebpf and ftrace help us trace what is happening at the OS layer, e.g. which system calls are being invoked and if anything specific is slow in the OS.

Finally, perf allows us to profile the CPU and collect hardware level event data, such as how many branch mispredictions are happening, how many cache hits and cache misses, how many context switches and so on. We can go even further down and do more specific analysis with it.

Combined together these tools can help us do performance analysis and optimization of our systems in a top-down manner, starting from the high-level language code and going down to the kernel and hardware microinstruction level details.

Conclusion

Performance engineering is a deep and exciting area, and a modern day software developer needs to have some of these skills to meet the demands of delivering performant systems at minimal computational costs. These skills not only help us build high performance solutions, but they give us a new lens to look at any piece of code. I believe that these skills make us a more competent engineer.

Building performance engineering skills requires breaking down abstraction layers and understanding what is happening at each of them. There is a wide range of topics that we need to get familiar with, but we don’t need to become an absolute expert at any of them.

Keep an eye out for the upcoming posts as we dive deeper into specific performance engineering topics. And don't forget about the live session on optimizing 1BRC – it's an opportunity to apply these concepts in real-time and see firsthand the impact of performance tweaks.

Register for the hands-on live session on performance optimization:

Live Session on Performance Optimization Using 1BRC as a Case Study

We just concluded our first ever live session on performance engineering last Sunday. Many people expressed interest in it afterwards. Although, we did have a recording, I think the real value is in attending these things live and asking questions. I am going to do a repeat of this topic but with a different format