Decoding the ACL Paper: Gzip and KNN Rival BERT in Text Classification

Breaking Down the Hype: Unpacking the Findings of the Recent ACL Conference Paper

Introduction

A new paper published at this year’s edition of the prestigious ACL conference for natural language processing (NLP) has gone viral among the researchers. The paper shows that using a combination of gzip and K-nearest neighbour (KNN) to classify text achieves performance on par with state-of-the-art models, including BERT.

At a time when much of the research work being published is centred around large language models, this innovative approach provides a refreshing perspective. The paper, although only ten pages long (in a two-column format), is not a straightforward read. I have decided to compose a series of two articles to dissect this paper. The first article of the series — this piece — aims to demystify the paper's crucial findings using layman's terms. The subsequent article will dig deep into why this approach works, what implications it holds for future research in NLP, and more detailed aspects of the study.

For more in-depth analysis to understand why compression works for text classification, check out my follow-up article on this topic: LZ77 Is All You Need?

Existing Approaches for Text Classification and Their Limitations

Text classification is one of the classical problems in the area of NLP. The goal is to classify a given piece of text into one of several predefined categories. For instance, let’s consider a dataset of newspaper articles, where each article is assigned one of the four topics as its target label: [sports, politics, entertainment, science]. The task for a model is to be able to accurately classify a piece of text into one of these four classes.

Solving any classification problem in machine learning typically involves training a supervised model — such as linear, logistic regression, support vector machines or neural networks. Supervised learning refers to a category of machine learning models that learn by training on labeled datasets. For the newspaper article dataset mentioned earlier, each article has one of the four topics as its target label.

All supervised machine learning strategies are grounded on various forms of mathematical models. These models consist of some unknown parameters which need to be learned via training. For example, linear regression is based on the following model:

Where x and y are the input sample and its output, from the training data. While a and b are the unknown parameters or weights of the model which need to be learned through the process of training.

Parametric models: Because of the presence of learnable parameters, these models are also called parametric models.

While the above is a simple example featuring only two parameters, real-world state-of-the-art models often consist of millions of parameters. Training these large models entails substantial computational time, and their operation in production environments can be costly. For example, the state-of-the-art model BERT — a deep learning-based model — has 109 million parameters. Running such deep learning models requires specialized hardware, including GPUs, leading to a steep increase in costs.

Gzip and K-Nearest Neighbours for Classification

The recent trend in NLP research has been to build larger and larger models. Although that trend has lead us to the LLM revolution, but running such large models for simple problems like text classification is an overkill. This paper proposes a simpler approach which works just as well as the state of the art models, while not requiring expensive training process, or dedicated hardware for running it.

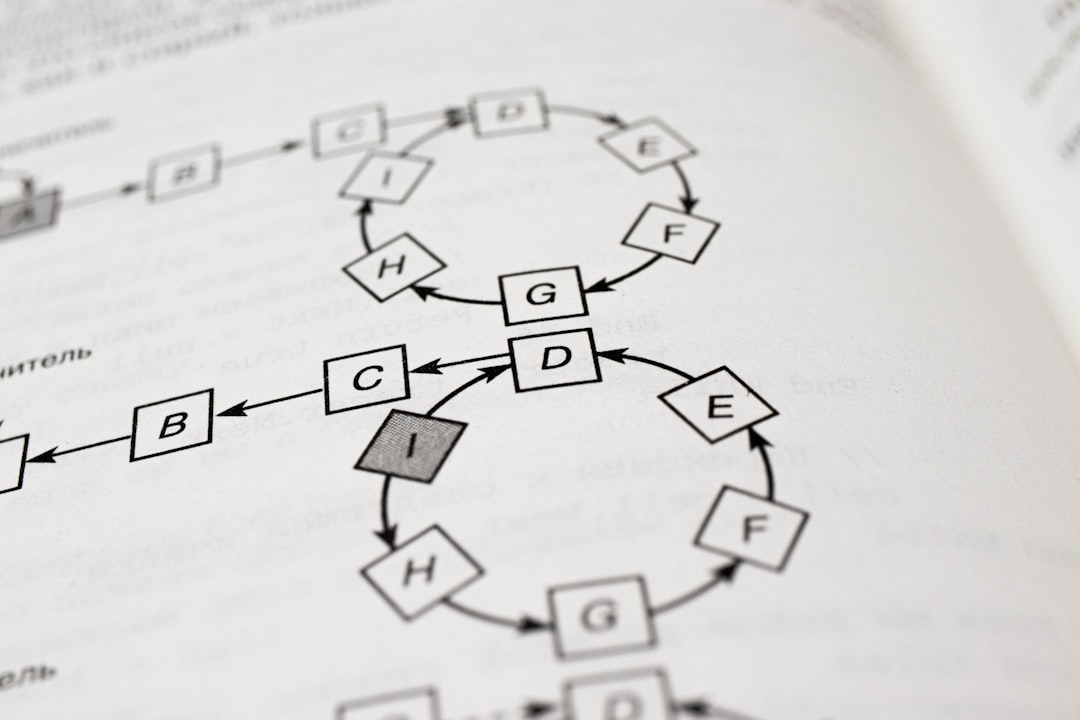

The approach proposed by the paper is very simple and can be described in following steps:

To classify a sample t:

1 compress t using gzip

2 for each sample s in the training dataset:

2.1 compress s using gzip

2.2 compute distance between gzip(s) and gzip(t)

3 find k-nearest neighbours for t based on distances computed in 2.2

4 pick the majority class as the target label from the k neighboursThe question comes down to how do you compute distance between two compressed documents? The paper introduces a distance called the Normalized Compression Distance (NCD). The following equation shows the formula for computing NCD between two texts x and y.

Crucial elements of this equation include:

C(x), the length, in bytes, ofxafter compression.C(xy), the length, in bytes, of the result whenxandyare concatenated and then compressed.

In essence, NCD gives an estimate of the amount of information shared between x and y. If x and y have a lot of common content, their concatenation will achieve higher compression, leading to a smaller value of NCD. It makes sense, therefore, to assume that texts belonging to the same class share more common characteristics than those from different classes.

Compression Algorithms, Information Theory and NCD

The concept of NCD should be easy for those familiar with how compression algorithms and information work. However, for the benefit of those new to the subject, let's break it down.

The purpose of compression algorithms is to encode a piece of data into as few bits as possible while ensuring that a decoder can rebuild the original data.

These algorithms come under two categories: lossless and lossy. As the names suggest, lossless algorithms reconstruct the data without any loss of original information, while lossy algorithms may lose some information during the decoding process. Text compression typically employs lossless compression, while media such as images, audio, and video use lossy compression techniques.

Information

I mentioned the term “information” when talking about lossless and lossy compression, what do we mean by information? Let’s understand with the help of an example.

Imagine a biased coin which gives heads 98% of the times when tossed. If I toss it 100 times and transmit the result over the network, by encoding each head as 0 and each tail as 1, I would need 100 bits. Since in this message, 0’s have a probability of 98%, they don’t convey much information, where as the 1’s are a rarity and thus provide much more information about the outcome of the experiment. We could simply omit 0’s from transmission and just send the 1’s, thus achieving 98% compression. And, this is the essence of compression, to efficiently encode information present in the data.

The field of data compression is built on the back of information theory which gives solid mathematical underpinning for describing information and entropy in the data. There’s not enough space and time to go deeper than this in this article.

Going back to NCD

Now that we have a better understanding of compression, let’s try to understand how NCD helps us do text classification.

Compressing a piece of text is fundamentally a process of efficiently encoding the information it contains.

If two pieces of text,

xandy, are of the same class, they presumably share many common words, terminologies, phrases, and patterns. Hence, their joint document,xy, should possess a high amount of information.Since

xyis information-rich, compressing it should yield a shorter output, which in turn corresponds to a smaller NCD betweenxandy.

In simple terms, NCD serves as a measure of the information distance between two texts,

xandy. Ifxandyshare numerous common elements, their shared information is significant, leading to a smaller NCD.

Significance of Gzip + KNN Classifier

There are a few reasons why this paper is significant. Let’s discuss them.

Firstly, the gzip + KNN approach is lightweight and economical, especially when compared to cumbersome deep learning models.

Unlike state-of-the-art models that necessitate training, this model is non-parametric — meaning it does not contain parameters that need to be learned, which significantly reduces costs.

Parametric models usually need training on specific datasets and fare well on data similar to those datasets. However, they may struggle with out-of-distribution data — data significantly different from the datasets on which the model was trained. Compression algorithms do not suffer from this shortcoming as they are agnostic to the dataset, performing consistently on out-of-distribution data. Notably, the paper demonstrates that the gzip + KNN-based approach outperforms all other models on out-of-distribution datasets (as illustrated in the table below).

Limitations of Gzip + KNN Classiier

It’s not all fun and glory for this approach either. There are a few limitations to this approach as well.

KNN can be slow when handling large datasets. This approach requires KNN computations for each new sample for prediction — and although KNN doesn't need specialized hardware, it can be slow when the datasets are hefty.

For executing KNN, we must keep the entire data in memory, which can consume considerable resources for voluminous datasets. Conversely, if we load data from the disc as needed, it might exacerbate the algorithm's overall slowness.

Some Potential Issues with the Paper

As the paper went viral, it has also gone through massive amounts of scrutiny from the community. In particular, Ken Schutte, found a bug in the paper’s implementation of KNN, which he described in his blog. He found that the paper reported the accuracy for top-2 results rather than implementing KNN with k=2 (as stated in the paper). Upon conducting experiments for k=2, he discovered that the classifier's accuracy slightly declined – which he only indicated for four datasets. Strikingly, it went from the best performing model on KirundiNews to the least effective one.

In essence, it's advisable to take the paper’s reported figures with a grain of salt, particularly as they cannot be precisely reproduced as described. Nonetheless, this approach continues to deliver unexpectedly well.

Wrapping it Up

The approach of this paper to go towards simpler techniques is a breath of fresh air, when looking at the flood of papers on LLMs and large scale models. Even though few questions have been raised on the numbers reported by them, let’s hope more research focus goes towards such simple and out of the box approaches.

One question that remains unanswered is why this technique performs so impressively well. The paper does not spend any time in trying to explain that. My goal with this article was to explain the findings of this paper in simpler language and set the stage. In my next post I will be collaborating with Alejandro Piad Morffis and dig deeper — we will cover why and how the gzip based classifier works, and the scientific implications of this paper. Stay tuned!

Hey,

> Firstly, the gzip + KNN approach is lightweight and economical, especially when compared to cumbersome deep learning models.

as you mention later, it is not really economical given it requires a full KNN computation at each inference. In effect, this requires a lot of cpu and a lot of memory. I'm trying to reproduce the results but it seems even reproducing AG_NEWS takes around 24 hours.

> Unlike state-of-the-art models that necessitate training, this model is non-parametric — meaning it does not contain parameters that need to be learned, which significantly reduces costs.

yes, but this misses the idea that some compression algorithms do build a kind of ~parametric model~ when they build a compression dictionnary. The dictionnary is a form of learning and could be re-used at inference.

I tried to build a demo of such approach here:

https://github.com/cyrilou242/ftcc

it uses zstd to build compression dictionnaries.

Overall training + inference is multiple order of magnitudes faster than the gzip approach, and performance seems to be similar.

I'd be curious to have your feedback on this.

I wrote a follow-up article on this which dissects various compression algorithms to analyze what exactly is happening during compression leading to unexpected performance in text classification: https://codeconfessions.substack.com/p/lz77-is-all-you-need