A Tutorial Guide to Using The Function Call Feature of OpenAI's ChatGPT API

Learn How to Use OpenAI's ChatGPT API's Function Call Feature to Build Powerful ChatGPT Plugins

Few days back OpenAI announced some major enhancements to the GPT-4 and GPT-3.5 APIs. This included some significant cost reductions, and release of a 16k context window version of the GPT-3.5 model. But the biggest news of all was the introduction of the function call feature to the chat completions end point.

The function call feature of the ChatGPT API allows users to pass a list of functions that the model can call if it needs help to perform its task. These are not actual functions, but rather specifications of the function call signature. By passing a list of functions to the LLM, we give it the option to use these functions to answer the question it has been asked. It can do this by generating a function call, which is simply a JSON containing the name of the function to be called and the value of the function's arguments. Essentially, this feature allows us to get a JSON formatted output from ChatGPT. This has been a major pain point for many users, as the LLMs do not always follow the prompt to give a JSON response, resulting in all kinds of messy situations in applications.

The whole Internet is abuzz about this new feature. In this article, I will take you through a tutorial showing how the function call feature can be used to implement a ChatGPT-like plugin system. We will build a chat application in Flask and incrementally design and implement a plugin system in it to resemble the ChatGPT plugin system. By the end of the article we would have a fully working ChatGPT like application with support for a web browsing plugin and a Python code interpreter plugin. So let’s get started!

Update: There is a follow-up article to this where I discuss some of the gotchas you may come across when using function calls in your apps and how to deal with them. Check it out here

Introduction to Function Calling in GPT-4 and GPT-3.5 APIs

Let’s first take a quick look at the basics of calling the chat completions API before we look at function calling. The chat completions API is used to call the ChatGPT models for completing a user prompt. The API takes two required parameters: model and messages.

model: This describes the model that should be used. Currently, we have the option of using either GPT-4 or GPT-3.5-Turbo models. For the full and current list of models, check out the OpenAI’s models documentation.

messages: This is a list of messages that represent the chat conversation history between the user and the ChatGPT model. Each message in the list is a JSON object consisting of a role and the message content. The role can take one of three possible values: system, user, and assistant. The system role is used to set the model behavior and its personality. The user role usually represents the message entered by the user, and the assistant role is used to represent the messages generated by the LLM itself.

Here is an example, taken from the OpenAI documentation:

import openai

openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Who won the world series in 2020?"},

{"role": "assistant", "content": "The Los Angeles Dodgers won the World Series in 2020."},

{"role": "user", "content": "Where was it played?"}

]

)The functions parameter

The new function calling feature introduces another parameter to this API. This parameter takes list of functions. Each function has 3 fields: name, description, and its parameters. The name and description fields describe the name of the function and its functionality respectively. The parameters field describes the parameters that the function takes. In order for the ChatGPT API call to be able to generate an accurate function call, these parameters need to be described using the JSON schema specification. Following is an example function call for a function called square.

{

"name": "square",

"description": "square the given number",

"parameters": {

"type": "object",

"properties": {

"x": {

"type": "integer",

"description": "The number to be squared"

}

}

}

}If we send a prompt to the ChatGPT API, such as “Compute the square of 214321223”, it might return a response which could look like this:

{

'index': 0,

'message': {

'role': 'assistant',

'content': None,

'function_call': {

'name': 'square',

'arguments': '{\n "x": 214321223}'

}

},

'finish_reason': 'function_call'

}When the ChatGPT API generates a function call in its response, it adds a new field function_call which contains the name of the function that the model wants to call and the values of the arguments for that function. We can easily read the value of the function_call field in the response and parse it as JSON.

If the ChatGPT model decides not to make a function call, then the function_call field in the response is not set, instead you will find the model’s reply in the content field. By checking if the function_call field is set in the response or not, we can know when the ChatGPT model is making a function call.

The function_call API parameter

There is another optional parameter which has been introduced as part of function calling. This parameter is called function_call and it can take 3 possible values:

none: If we don’t want the ChatGPT API to generate a function call, we can passfunction_callwith value none. This is the default if we don’t pass any functions to the API.auto: This allows the ChatGPT API to decide whether to generate a function call or not. If multiple functions have been passed to it, it can pick the most suitable one. This is the default when functions are passed to the API.function name: We can also pass the name of a specific function, e.g.

{“name”: “square”}if want the model to generate call to that function.

Essentially it is a hint to the ChatGPT API, whether to generate a function call or not.

If you are looking for a more detailed example of using the function calls, I would suggest to check out the OpenAI documentation for function calls. My focus in this article is to show how to leverage the function calling feature for more sophisticated use-cases such as building a ChatGPT like plugin framework.

Building a ChatGPT Plugin Framework Using the Function Calls

Utilizing the function call parameter to generate JSON formatted output from ChatGPT API calls is just the most basic application of this new feature. However, it opens up possibilities for much more sophisticated applications. By passing multiple functions to the ChatGPT API call, the model can selectively invoke any of those functions as needed to accomplish its goal. This allows for a non-linear flow involving the user, LLM, and the functions, where LLM can navigate through a series of function calls, interact with the user through questions, and return to function calls to arrive at a final answer.

For instance, the following illustration demonstrates how this process would function. Assuming web search and HTML scraping are functions passed to ChatGPT API, it may choose to utilize them to answer user queries that fall outside its training data.

In a way, this mirrors the functioning of ChatGPT plugins. Depending on the assigned task and enabled plugins, ChatGPT selects the most suitable tool and executes the necessary functions behind the scenes to obtain the answer. Let's develop a simple proof of concept to demonstrate this process. We will accomplish this by building a chat application using Flask in Python and implementing a plugins framework within it.

All the code for the following part of the article is available on GitHub: https://github.com/abhinav-upadhyay/chatgpt_plugins

Setting up Requirements and Virtual Environment

Let's quickly set up a virtual environment and install the dependencies needed for this project. Open a terminal and execute the following commands:

mkdir chatgpt_plugins # create a directory for the project

cd chatgpt_plugins

python -m venv venv # create a virtual environment

source venv/bin/activate # activate the virtual environment

pip install openai --upgrade

pip install flask requests python-dotenvHere, we have created a directory for our project, set up a virtual environment, and installed the necessary dependencies.

Setting Up OpenAI API Key

If you haven't already, you need to obtain an API key from OpenAI in order to make API calls. To do this, go to the OpenAI accounts page and click on the “Create new secret key” button to create a new key. Once the key is created, copy and save it somewhere safe.

After creating the key, create an environment variable called OPEN_AI_KEY and assign the key as its value. It is recommended to create a .env file in your project and declare the environment variable there.

OPEN_AI_KEY="<your api key here>"Be extremely cautious in managing the key to avoid any leaks, as misuse can result in significant charges.

Creating the Flask Application Structure

Next, let's create the structure for our Flask application. Assuming we are inside the project's directory (chatgpt_plugins), execute the following commands:

mkdir -p app/templates

touch app/__init__.pyThe app directory will contain our application's code, and the template directory will store the HTML templates for the application's user interface.

Creating the Chat UI

I am not great when it comes to creating UIs and as I wanted to get this article out as quickly, I took ChatGPT’s help to create something simple to get things going. Following is the UI of the chat interface, that we will put in templates/chat.html.

<!DOCTYPE html>

<html>

<head>

<title>Chat App</title>

<link

rel="stylesheet"

href="https://cdnjs.cloudflare.com/ajax/libs/bootstrap/5.3.0/css/bootstrap.min.css"

/>

<style>

.chat-container {

max-width: 500px;

margin: 0 auto;

padding: 20px;

}

.message-list {

list-style-type: none;

padding: 0;

}

.message-list li {

margin-bottom: 10px;

}

.message-list .sender-name {

font-weight: bold;

margin-bottom: 5px;

}

.message-list .message-content {

background-color: #f6f6f6;

padding: 10px;

border-radius: 8px;

}

.input-box {

display: flex;

margin-top: 20px;

}

.input-box input[type="text"] {

flex: 1;

padding: 10px;

border: 1px solid #ccc;

border-radius: 4px;

margin-right: 10px;

}

.input-box input[type="submit"] {

background-color: #007bff;

color: #fff;

border: none;

padding: 10px 15px;

border-radius: 4px;

cursor: pointer;

}

</style>

</head>

<body>

<div class="chat-container">

<h2>Chat App</h2>

<ul class="message-list" id="messageList"></ul>

<div class="input-box">

<input type="text" id="messageInput" placeholder="Type your message" />

<input type="submit" value="Send" id="sendButton" />

</div>

</div>

<script>

const conversationData = {{ conversation|tojson}};

console.log(conversationData)

const messageList = document.getElementById("messageList");

const messageInput = document.getElementById("messageInput");

const sendButton = document.getElementById("sendButton");

function translateRole(role) {

if (role == "user") {

return "You";

}

return "ChatGPT";

}

function renderMessage(sender, message) {

const listItem = document.createElement('li');

const senderName = document.createElement('div');

senderName.className = 'sender-name';

senderName.innerText = sender;

const messageContent = document.createElement('div');

messageContent.className = 'message-content';

messageContent.innerText = message;

listItem.appendChild(senderName);

listItem.appendChild(messageContent);

messageList.appendChild(listItem);

}

// Render initial conversation data

function renderConversation() {

conversationData.forEach((item) => {

const listItem = document.createElement("li");

const senderName = document.createElement("div");

senderName.className = "sender-name";

senderName.innerText = translateRole(item.role);

const messageContent = document.createElement("div");

messageContent.className = "message-content";

messageContent.innerText = item.content;

listItem.appendChild(senderName);

listItem.appendChild(messageContent);

messageList.appendChild(listItem);

});

}

// Handle message submission

function submitMessage() {

const message = messageInput.value.trim();

if (message !== '') {

renderMessage('You', message);

messageInput.value = '';

// Simulate backend API call

fetch('/chat', {

method: 'POST',

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify({ message })

})

.then(response => response.json())

.then(data => {

const responseMessage = data.message;

renderMessage('ChatGPT', responseMessage);

})

.catch(error => {

console.error('Error:', error);

});

}

}

// Bind event listeners

sendButton.addEventListener("click", submitMessage);

messageInput.addEventListener("keydown", (event) => {

if (event.keyCode === 13) {

event.preventDefault();

submitMessage();

}

});

// Render initial conversation

renderConversation();

</script>

</body>

</html>Creating the Chat Module

Before we can build the plugin framework around it, let's start by building the basic chat functionality. We will place all the code related to implementing the chat functionality in the chat module, which will be located inside the chat package. Let's proceed with creating these components:

mkdir app/chat

touch app/chat/__init__.py

touch app/chat/chat.pyMaintaining the Conversation

In the chat module, we will create a class to maintain the conversation between the user and ChatGPT.

import openai

import requests

import json

from typing import List, Dict

import uuid

class Conversation:

"""

This class represents a conversation with the ChatGPT model.

It stores the conversation history in the form of a list of

messages.

"""

def __init__(self):

self.conversation_history: List[Dict] = []

def add_message(self, role, content):

message = {"role": role, "content": content}

self.conversation_history.append(message)

Each message in a conversation consists of the role and the content of the message, as expected by the ChatGPT API.

Creating a Chat Session

Next, we will create an abstraction to represent a chat session. This is nothing very complicated.

GPT_MODEL = "gpt-3.5-turbo-0613"

SYSTEM_PROMPT = """

You are a helpful AI assistant. You answer the user's queries.

NEVER make up an answer.

If you don't know the answer,

just respond with "I don't know".

"""

class ChatSession:

"""

Represents a chat session.

Each session has a unique id to associate it with the user.

It holds the conversation history

and provides functionality to get new response from ChatGPT

for user query.

"""

def __init__(self):

self.session_id = str(uuid.uuid4())

self.conversation = Conversation()

self.conversation.add_message("system", SYSTEM_PROMPT)

def get_messages(self) -> List[Dict]:

"""

Return the list of messages from the current conversation

"""

# Exclude the SYSTEM_PROMPT when returning the history

if len(self.conversation.conversation_history) == 1:

return []

return self.conversation.conversation_history[1:]

def get_chatgpt_response(self, user_message: str) -> str:

"""

For the given user_message,

get the response from ChatGPT

"""

self.conversation.add_message("user", user_message)

try:

chatgpt_response = self._chat_completion_request(

self.conversation.conversation_history

)

chatgpt_message = chatgpt_response.get("content")

self.conversation.add_message("assistant", chatgpt_message)

return chatgpt_message

except Exception as e:

print(e)

return "something went wrong"

def _chat_completion_request(self, messages: List[Dict]):

headers = {

"Content-Type": "application/json",

"Authorization": "Bearer " + openai.api_key,

}

json_data = {"model": GPT_MODEL,

"messages": messages,

"temperature": 0.7}

try:

response = requests.post(

"https://api.openai.com/v1/chat/completions",

headers=headers,

json=json_data,

)

return response.json()["choices"][0]["message"]

except Exception as e:

print("Unable to generate ChatCompletion response")

print(f"Exception: {e}")

return eThe ChatSession class consists of two fields:

session_id: This is a unique ID used to identify a session between a user and ChatGPT. It allows us to associate a uniqueChatSessioninstance with each user, ensuring that their messages are not mixed up.conversation: This is the conversation history for this user

The get_chatgpt_response method is an important part of this class. It serves as the primary interface for taking a user message and obtaining a response from ChatGPT through an API call. It also adds both the user's message and ChatGPT's response to the conversation history, ensuring a smooth continuation of the conversation.

It is important to note that the handling of context window limits and exclusion of older messages as the limit is reached is not implemented in this method.

Wiring Up The UI and Chat Backend

Now we are ready to connect the UI with the backend. To do this, we will create `routes.py` in the app directory, where we will define all the endpoints for our chat application.

Firstly, we will define the endpoint for the root of the application.

import os

from typing import Dict

from dotenv import load_dotenv

from flask import Flask, render_template, request, session, jsonify

from flask import Flask

from .chat.chat import ChatSession

load_dotenv()

app = Flask(__name__)

# flask requires a secret to use sessions.

app.secret_key = os.getenv("CHAT_APP_SECRET_KEY")

chat_sessions: Dict[str, ChatSession] = {}

@app.route("/")

def index():

chat_session = _get_user_session()

return render_template("chat.html", conversation=chat_session.get_messages())

def _get_user_session() -> ChatSession:

"""

If a ChatSession exists for the current user return it

Otherwise create a new session, add it into the session.

"""

chat_session_id = session.get("chat_session_id")

if chat_session_id:

chat_session = chat_sessions.get(chat_session_id)

if not chat_session:

chat_session = ChatSession()

chat_sessions[chat_session.session_id] = chat_session

session["chat_session_id"] = chat_session.session_id

else:

chat_session = ChatSession()

chat_sessions[chat_session.session_id] = chat_session

session["chat_session_id"] = chat_session.session_id

return chat_sessionHere’s what’s happening in the above code:

We are using the

dotenvmodule to read the environment variables from the.envfile.We also need to setup another environment variable in the

.envfile with the nameCHAT_APP_SECRET_FILE. The value for this variable can be a random string. We are reading it and using it as the secret key in the flask app. Flask requires this for setting up the user session.The

_get_user_session()function is a utility function used to retrieve theChatSessionassociated with the current user. We are using the Flask session to store oneChatSessionobject with each user. In the session, we store the chat session ID of the user. If we find an ID in the session, we look up the correspondingChatSessionobject from thechat_sessionsdictionary. If the current user does not have an existing session, we create a new one and store it in the session.The

indexfunction is mapped to the root of the application. It retrieves theChatSessionfor the current user and returns the chat UI template. If the current session had an ongoing conversation, we send all those messages to the template so that it can show the historical conversation to the user.

We are now ready to test the application. However, we need to create a startup file to launch the Flask app. In the root of the project (chatgpt_plugins), we will create a file called run.py and put the following boilerplate code in it, which enables us to run the application.

from app.routes import app

if __name__ == '__main__':

app.run(host='0.0.0.0:5000', debug=True)At this point, we can do a test run of the chat. Let’s do that. In the terminal, we need to run the following command while in the root directory of the project (chatgpt_plugins).

flask --app run.py runIf everything goes as per the plan, then this should start running the Flask server on port 5000. You should be able to access the application UI at http://localhost:5000, and it should look like the screenshot below:

I entered the message “Hello, world”, but nothing happened. This is expected because we don’t have code for returning the response from ChatGPT yet. Let’s do that next.

Generating ChatGPT Response

We just need to make few lines of change in routes.py to add the /chat endpoint that the UI is calling on submitting a message. Let’s do that. Following changes are for routes.py:

@app.route('/chat', methods=['POST'])

def chat():

message: str = request.json['message']

chat_session = _get_user_session()

chatgpt_message = chat_session.get_chatgpt_response(message)

return jsonify({"message": chatgpt_message})This is it! The UI sends the user’s message in the JSON body of the request, which we read on the first line of the chat() function. We pass that message to the get_chatgpt_response() function, and return the response from ChatGPT back to the UI.

Let’s try running the app again and see if we get a response back from ChatGPT.

It works! Now that the basic chat functionality is working, we can start implementing plugins.

Adding Support for ChatGPT Like Plugins

In order to incorporate multiple plugins, such as a web search plugin or a python code interpreter plugin, we need to establish an interface for the plugins. Once a plugin meets the requirements of this interface, we can call it without concerns about the specific plugin implementation. To accomplish this, we will create a plugins package within the chat package, as outlined below:

# assuming we are in the root of our project

mkdir app/chat/plugins

touch app/chat/plugins/__init__.pyNext, we will define the plugin interface in plugin.py, as shown below:

# plugin.py

from abc import ABC, abstractmethod

from typing import Dict

class PluginInterface(ABC):

@abstractmethod

def get_name(self) -> str:

"""

return the name of the plugin (should be snake case)

"""

pass

@abstractmethod

def get_description(self) -> str:

"""

return a detailed description of what the plugin does

"""

pass

@abstractmethod

def get_parameters(self) -> Dict:

"""

Return the list of parameters to execute this plugin in the form of

JSON schema as specified in the OpenAI documentation:

https://platform.openai.com/docs/api-reference/chat/create#chat/create-parameters

"""

pass

@abstractmethod

def execute(self, **kwargs) -> Dict:

"""

Execute the plugin and return a JSON serializable dict.

The parameters are passed in the form of kwargs

"""

pass

PluginInterface is an abstract class which defines the APIs that any plugin implementation should follow. Let’s discuss these APIs in detail.

get_name,get_description, andget_parametersmethods return the name, description, and required parameters of the plugin, respectively. This information is necessary to pass as function parameters to the chat completions API, which we discussed earlier in this article. The plugin's description is particularly important because the LLM determines which function to call based on the information provided in the description.The

executemethod is called when we want to execute the plugin. This method should return a JSON serializable dictionary because the result will be returned to the LLM, which will extract any relevant information from it. Keeping the return value as JSON makes the interface flexible because different plugins may have varying complexities in their return values.

A Web Search Plugin

Now, let's implement our first plugin. We'll start by creating a simple web search plugin using the Brave search API. If you're following along, you'll need to create a free account with Brave and obtain an API key for the free plan. Although they ask for credit card information, the free plan doesn't charge anything. It limits us to 1 request per second and 2000 requests per month, which is sufficient for this toy project. Once you have obtained the key, add a new environment variable to the .env file as follows:

BRAVE_API_KEY="<your Brave API key>"Next, in the plugins package, create a new file called websearch.py to contain the implementation of the web search plugin. Here’s the code for it:

from .plugin import PluginInterface

from typing import Dict

import requests

import os

BRAVE_API_KEY = os.getenv("BRAVE_API_KEY")

BRAVE_API_URL = "https://api.search.brave.com/res/v1/web/search"

class WebSearchPlugin(PluginInterface):

def get_name(self) -> str:

"""

return the name of the plugin (should be snake case)

"""

return "websearch"

def get_description(self) -> str:

return """

Executes a web search for the given query

and returns a list of snipptets of matching

text from top 10 pages

"""

def get_parameters(self) -> Dict:

"""

Return the list of parameters to execute this plugin in the form of

JSON schema as specified in the OpenAI documentation:

https://platform.openai.com/docs/api-reference/chat/create#chat/create-parameters

"""

parameters = {

"type": "object",

"properties": {

"q": {

"type": "string",

"description": "the user query"

}

}

}

return parameters

def execute(self, **kwargs) -> Dict:

"""

Execute the plugin and return a JSON response.

The parameters are passed in the form of kwargs

"""

headers = {

"Accept": "application/json",

"X-Subscription-Token": BRAVE_API_KEY

}

params = {

"q": kwargs["q"]

}

response = requests.get(BRAVE_API_URL,

headers=headers,

params=params)

if response.status_code == 200:

results = response.json()['web']['results']

snippets = [r['description'] for r in results]

return {"web_search_results": snippets}

else:

return {"error":

f"Request failed with status code: {response.status_code}"}All of this is very straightforward. The WebSearchPlugin simply implements the interface defined in the PluginInterface class. The execute function makes an API call to the Brave search API and returns the response.

I should note that the full response from Brave is extensive. However, since we are using the 4k context version of GPT-3.5, we have limited space. Therefore, instead of sending all the details to ChatGPT, we are taking a shortcut by only including snippets of the top 10 matching results. It would have been better to also send the URLs to ChatGPT so that it could scrape the text of those results if needed to provide an answer.

Integrating the Web Search Plugin into the Chat Application

Let's test whether this integration works by adding the web search plugin to the chat application. We need to make a few small changes in the chat module. Open chat.py and make the following modifications:

from .plugins.plugin import PluginInterface

from .plugins.websearch import WebSearchPlugin

SYSTEM_PROMPT = """

You are a helpful AI assistant. You answer the user's queries.

When you are not sure of an answer, you take the help of

functions provided to you.

NEVER make up an answer if you don't know, just respond

with "I don't know" when you don't know.

"""

class ChatSession:

def __init__(self):

self.session_id = str(uuid.uuid4())

self.conversation = Conversation()

self.plugins: Dict[str, PluginInterface] = {}

self.register_plugin(WebSearchPlugin())

self.conversation.add_message("system", SYSTEM_PROMPT)

def register_plugin(self, plugin: PluginInterface):

"""

Register a plugin for use in this session

"""

self.plugins[plugin.get_name()] = plugin

def _get_functions(self) -> List[Dict]:

"""

Generate the list of functions that can be passed to the chatgpt

API call.

"""

return [self._plugin_to_function(p) for

p in self.plugins.values()]

def _plugin_to_function(self, plugin: PluginInterface) -> Dict:

"""

Convert a plugin to the function call specification as

required by the ChatGPT API:

https://platform.openai.com/docs/api-reference/chat/create#chat/create-functions

"""

function = {}

function["name"] = plugin.get_name()

function["description"] = plugin.get_description()

function["parameters"] = plugin.get_parameters()

return function

def _execute_plugin(self, func_call) -> str:

"""

If a plugin exists for the given function call, execute it.

"""

func_name = func_call.get("name")

print(f"Executing plugin {func_name}")

if func_name in self.plugins:

arguments = json.loads(func_call.get("arguments"))

plugin = self.plugins[func_name]

plugin_response = plugin.execute(**arguments)

else:

plugin_response = {"error": f"No plugin found with name {func_call}"}

# We need to pass the plugin response back to ChatGPT

# so that it can process it. In order to do this we

# need to append the plugin response into the conversation

# history. However, this is just temporary so we make a

# copy of the messages and then append to that copy.

print(f"Response from plugin {func_name}: {plugin_response}")

messages = list(self.conversation.conversation_history)

messages.append({"role": "function",

"content": json.dumps(plugin_response),

"name": func_name})

next_chatgpt_response = self._chat_completion_request(messages)

# If ChatGPT is asking for another function call, then

# we need to call _execute_plugin again. We will keep

# doing this until ChatGPT keeps returning function_call

# in its response. Although it might be a good idea to

# cut it off at some point to avoid an infinite loop where

# it gets stuck in a plugin loop.

if next_chatgpt_response.get("function_call"):

return self._execute_plugin(next_chatgpt_response.get("function_call"))

return next_chatgpt_response.get("content")

def get_chatgpt_response(self, user_message: str) -> str:

"""

For the given user_message,

get the response from ChatGPT

"""

self.conversation.add_message("user", user_message)

try:

chatgpt_response = self._chat_completion_request(

self.conversation.conversation_history)

if chatgpt_response.get("function_call"):

chatgpt_message = self._execute_plugin(

chatgpt_response.get("function_call"))

else:

chatgpt_message = chatgpt_response.get("content")

self.conversation.add_message("assistant", chatgpt_message)

return chatgpt_message

except Exception as e:

print(e)

return "something went wrong"The highlighted lines indicate the additions or modifications we have made in order to integrate plugins. Let's briefly discuss them.

We have made a small change in the

SYSTEM_PROMPTin order to indicate to the ChatGPT API that it needs to use functions as needed. Although I am not sure it is really needed because OpenAI’s docs say that the latest version of the models are fine tuned to automatically generate function calls as needed.In the

ChatSessionclass, we have added a dictionary to maintain a mapping of the plugins and their names. There is aregister_pluginmethod that can be called to register a new plugin. Currently, we are enabling the web search plugin ourselves in the__init__method.The

_get_functions()method is a utility function used to generate thefunctionsparameter to be passed to the ChatGPT API if any plugins are registered.Finally, the

_execute_plugin()method is the core of this implementation. If the ChatGPT API call returns afunction_callin its response, we call_execute_pluginwith the value of thefunction_callfield as returned in the the response. The requested plugin is executed and its response is sent back to the ChatGPT API for processing. If ChatGPT needs to call another function to perform its task, it may request anotherfunction_callin its response. As long as it continues to ask for function calls, we will continue to call_execute_plugin(). However, this can lead to infinite loops if the model gets stuck, but that is not handled here to keep things simple.In the

get_chatgpt_response()method, we have added a check to see if the ChatGPT API has returned afunction_callin its response. If it has requested a function call, we call_execute_plugin(). Otherwise, we return the message from ChatGPT back to the UI.

With these changes, we are ready to try out the web search feature. Let's see it in action.

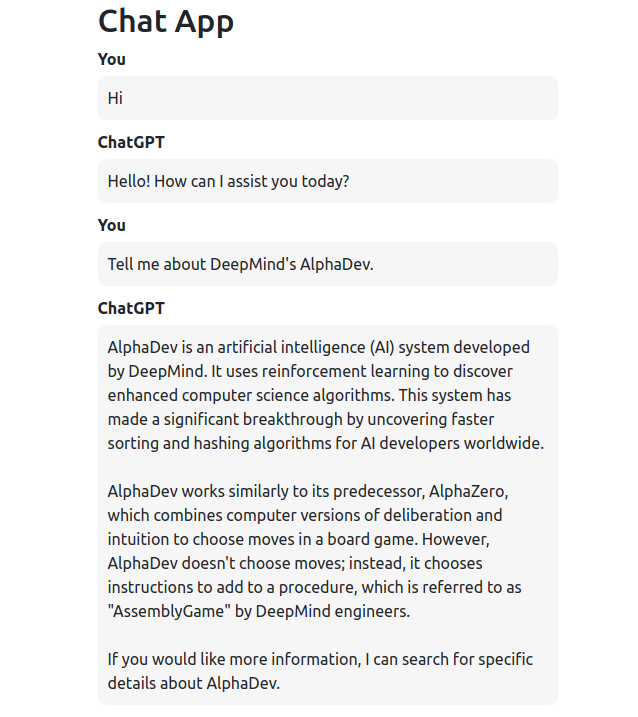

As you can see, when asked for something recent like DeepMind's AlphaDev, it is able to provide accurate information because behind the scenes it is calling our websearch plugin to retrieve this information.

Implementing a Python Code Interpreter Plugin

Our plugin ecosystem wouldn't be complete without a Python code interpreter plugin. Let's quickly implement that. In the plugins module, we will create a pythoninterpreter.py file and add the following code.

from .plugin import PluginInterface

from typing import Dict

from io import StringIO

import sys

import traceback

class PythonInterpreterPlugin(PluginInterface):

def get_name(self) -> str:

"""

return the name of the plugin (should be snake case)

"""

return "python_interpreter"

def get_description(self) -> str:

return """

Execute the given python code return the result from stdout.

"""

def get_parameters(self) -> Dict:

"""

Return the list of parameters to execute this plugin in

the form of JSON schema as specified in the

OpenAI documentation:

https://platform.openai.com/docs/api-reference/chat/create#chat/create-parameters

"""

return {

"type": "object",

"properties": {

"code": {

"type": "string",

"description": "Python code which is to be executed"

}

}

}

def execute(self, **kwargs) -> Dict:

"""

Execute the plugin and return a JSON response.

The parameters are passed in the form of kwargs

"""

output = StringIO()

try:

global_namespace = {}

local_namespace = {}

sys.stdout = output

exec(kwargs['code'], local_namespace, global_namespace)

result = output.getvalue()

if not result:

return {'error': 'Not result written to stdout. Please print result on stdout'}

return {"result": result}

except Exception:

error = traceback.format_exc()

return {"error": error}

finally:

sys.stdout = sys.__stdout__The plugin accepts one parameter, which is the code generated by ChatGPT itself. This code is executed in order to provide an answer to the user's query. The core functionality of the plugin lies in the execute method. However, executing arbitrary code within our application's process is highly dangerous and should be avoided. Nevertheless, since this is only a toy code interpreter plugin and not meant for production, let's keep things simple.

To execute the code, we directly pass it to the exec function. Since we have no knowledge about the code being executed, we are unsure how to obtain its result. To overcome this, I am making ChatGPT print the result on stdout so that I can read it from there. By redirecting sys.stdout to a StringIO object, we can read whatever output is written to stdout. Although, this approach is not entirely safe and reliable, it suffices for this limited demo. A slightly better alternative would have been to spawn a new Python process, execute the code within that process, and read its stdout.

Despite the aforementioned drawbacks of this plugin's implementation, we can still integrate it into the application and test it. To do so, we just need to register the plugin in chat.py as shown below:

from .plugins.pythoninterpreter import PythonInterpreterPlugin

...

class ChatSession:

def __init__(self):

…

self.register_plugin(PythonInterpreterPlugin())Let’s give it a try.

As you can see, with the addition of the code interpreter plugin, the ChatGPT model is now capable of performing mathematical operations. Behind the scenes, it simply generates a call to our plugin.

Wrapping Up

It is time to conclude this rather lengthy post. In this post, we have learned about the new function call feature introduced for GPT-4 and GPT-3.5 chat models, and we have explored how this feature can be utilized for sophisticated use-cases, such as implementing ChatGPT-like plugins. The possibilities with this feature are endless. For example, you could also create agents similar to AutoGPT or more specialized agents with a specific set of functions. The only limit is your imagination.

Resources

All the code shown in this article is available on GitHub under MIT license. Feel free to use it: https://github.com/abhinav-upadhyay/chatgpt_plugins

If you do decide to build apps using function calls, there are few rough edges you need to be aware of while using it. I talk about it in my next article.

Very detailed write-up. Thanks for sharing this.

The plugins integration part is missing from the blog. I copied this part from the github source code (chat.py) to get the plugin features work.

if self.plugins:

json_data.update({"functions": self._get_functions()})

Amazing. I am always inspired by the amount of detail you put to your articles. They are so comprehensive.